Perplexity Sonar Pro — Complete Guide, (2025)

Perplexity Sonar Pro is a web-grounded, citation-first API model built for fast, verifiable question answering. It’s optimized for search-style Q&A workloads where source provenance and throughput matter more than purely creative output. This guide translates Sonar Pro into NLP terms: what it is, how it behaves as a model + retrieval stack, how to benchmark and measure it, worked pricing examples, copyable Node/Python snippets, prompt engineering recipes for citation-first answers, production cost controls, and a final buying checklist. Read this to decide whether Sonar Pro is the right model for your product pipeline.

What is Perplexity Sonar Pro?

From an NLP system design perspective, Sonar Pro is a search-aware generative model that integrates web retrieval and answer synthesis into a single API surface. It performs three core functions in most calls:

- Retrieve — Search the live web or a configured source set for relevant documents and snippets.

- Synthesize — Produce a concise answer that synthesizes the retrieved evidence.

- Provenance — Attach numbered citations and source metadata (URLs, titles, publish dates) so downstream UIs can show “where the model got this.”

Think of Sonar Pro as a pre-built retrieval-augmented generation (RAG) stack tuned for speed and citation-first outputs — a useful building block when you need traceable answers rather than purely creative text. The Perplexity docs describe Sonar Pro as an advanced search offering with grounding and pricing tiers specific to input/output tokens.

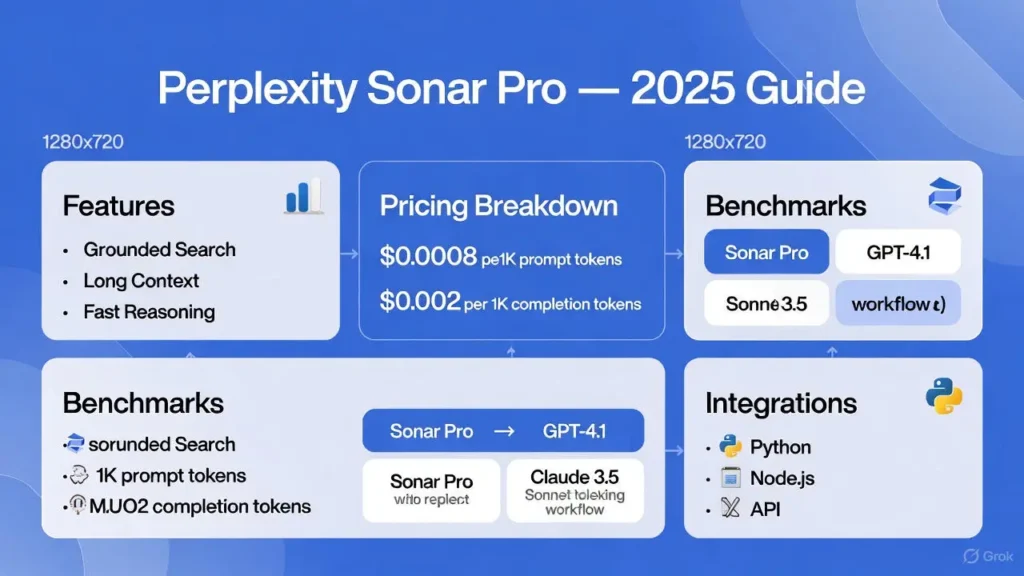

Key features

Below are the Sonar Pro features that matter when you design systems and pipelines.

- Built-in grounding and citations. The model returns numbered citations with URLs and metadata by default, so your frontend can display evidence alongside claims without building a separate citation layer. This reduces RAG integration work.

- Large context and search-aware architecture. Sonar variants support large context windows and can reason across many retrieved passages, enabling multi-document synthesis and follow-up chains. This is valuable when your prompt strategy includes a long user context or multiple documents.

- Throughput & latency engineering. Perplexity emphasizes speed (low latency, high qps). For real-time Q&A apps, Sonar is positioned to deliver faster decoding throughput than many general-purpose LLM offerings. If low TTFU (time to first usable token) is critical, Sonar’s architecture is relevant.

- Multiple Sonar variants. Sonar, Sonar Pro, Sonar Reasoning Pro, and Sonar Deep Research exist so you can choose a tradeoff between cost, reasoning tokens, and search depth. Each has different pricing characteristics.

- Request-level search controls. Many workflows require controlling search depth, result counts, or source filters. Sonar Pro exposes parameters for adjusting search behavior (e.g., number of returned results or context size), which you can tune per request.

Why this matters: to an NLP engineer, Sonar Pro looks like a pre-composed RAG + answer-synthesis pipeline that offloads the heavy lifting of web retrieval and citation assembly to the vendor.

Who should choose Sonar Pro?

Use Sonar Pro when:

- You need verifiable answers (news readers, legal assistants, academic helpers, Q&A UIs where provenance matters).

- Your product’s UX surface shows sources and expects users to click through to original pages.

- You require moderate-to-high throughput for many short queries with citations.

- You prefer out-of-the-box web grounding instead of building a custom retrieval index and citation system.

Don’t choose Sonar Pro when:

- Your primary workload is cheap bulk long-form generation (novels, marketing copy) — token-output pricing can be expensive.

- You already have a mature internal retrieval index and want only an LLM for formatting — then a cheaper base LLM + your index might be more affordable.

This is essentially a workload fit decision: If provenance is core, Sonar Pro is purpose-built for it. If cost-per-token is the primary constraint and provenance is non-critical, consider cheaper alternatives or hybrid architectures.

Benchmarks — What to Measure

When benchmarking Sonar Pro (or any retrieval-augmented model), measure the following — they directly affect UX and cost.

- Latency

- TTFU (time to first usable token): important for perceived responsiveness.

- Time-to-complete response: affects throughput and SLA planning.

- Report median and p95 to capture typical and tail behavior.

- Token usage

- Measure average input tokens and output tokens per query (including prompt tokens + retrieved context when charged).

- This drives billing (input tokens × input rate + output tokens × output rate).

- Citation quality

- Sample responses and verify whether citations actually support claims. Mark each claim as supported/unsupported.

- Track citation recall (does the model cite the primary source?) and citation precision (are cited sources truly relevant?).

- Throughput

- Requests per second under realistic concurrency.

- Measure under warm and cold-start scenarios.

- Cost per 1k queries

- Convert tokens measured into dollars using Perplexity’s published token rates (input & output). This is the real operating cost.

- Robustness

- Evaluate how the model behaves with ambiguous queries, adversarial prompts, and follow-up chains.

- Track hallucination rates and citation-mismatch incidents.

These metrics give you both UX and financial visibility — two non-negotiable metrics for production NLP systems.

Real-world pricing Examples

Perplexity publishes token-based pricing for Sonar models. As per the Perplexity docs, Sonar Pro token rates are approximately $3 per 1M input tokens and $15 per 1M output tokens, plus request fees for certain Pro Search features. Use these numbers for planning, but always re-check the docs before billing.

Quick token primer

- 1 token ≈ 0.75 English words (approx).

- 1,000 tokens ≈ , 750 words.

Scenario — Small research bot

- Queries/month: 10,000

- Avg input tokens/query: 150

- Avg output tokens/query: 200

Calculations

- Input tokens/month = 10,000 × 150 = 1.5M → cost = 1.5 × $3 = $4.50

- Output tokens/month = 10,000 × 200 = 2.0M → cost = 2.0 × $15 = $30.00

- Total ≈ $34.50 / month (illustrative).

Scenario — Heavy research pipeline

- Queries/month: 100,000

- Avg input tokens/query: 300

- Avg output tokens/query: 600

Calculations

- Input tokens = 100,000 × 300 = 30M → cost = 30 × $3 = $90

- Output tokens = 100,000 × 600 = 60M → cost = 60 × $15 = $900

- Total ≈ $990 / month (illustrative).

Takeaway: Output-token pricing dominates the bill. If your product generates long answers per user action, Sonar Pro’s per-output-token price will be the main cost lever. For scenario planning and comparisons, tools like Helicone’s LLM cost calculator can help you model variations.

Production tips

- Add retries with exponential backoff.

- Use streaming (if supported) to improve perceived latency.

- Log request/response tokens and store them for audits (PII-scrubbed).

- Monitor token usage and set daily cost alarms.

Pro tips

- Trim long user inputs client-side — summarize or extract salient parts before sending.

- Force the model to include citation tokens after every claim (explicit instruction).

- Use a “short sum + sources” output format to control output length.

- Post-validate citations server-side: fetch the cited URL and confirm relevant evidence exists.

These templates map neatly to a RAG-style pipeline and significantly reduce hallucination risk if enforced.

Cost Control & Production Best Practices

To Operate Sonar Pro Economically:

- Compress inputs — Summarize user documents locally and send distilled context only.

- Limit max_tokens — Cap outputs per route; return “Read more” links instead of full, long answers when appropriate.

- Cache aggressively — Cache answers and partial outputs for TTLs; many queries are repeatable.

- Use step-down models — Only call Sonar Pro when you require fresh web grounding. For lower-risk or internal docs, use cheaper models for formatting.

- Batch when possible — If you can batch multiple micro-queries into a single request, you can save overhead.

- Monitor & alert — Track daily token burn and request spikes. Enforce hard limits on production keys.

- Server-side validation — Validate citations before surfacing high-stakes claims to users.

These measures let you keep Sonar Pro where it matters while optimizing for budget where provenance is unnecessary.

Troubleshooting common issues

Citations Are Shallow or Unrelated.

- Action: Increase num_search_results (if supported) and re-run the query. Post-validate citation text by fetching the target page and running a snippet-match.

Model Hallucinates Facts.

- Action: Force an inline citation after each factual sentence. Lower temperature or strengthen deterministic instructions.

High Latency Spikes.

- Action: Use streaming to reduce TTFU, add retries, and add client-side caching for common queries.

Excessive Token Use.

- Action: Set max_tokens, compress input, and offload long-form formatting to a cheaper model.

Auditability / Compliance

- Store request/response pairs (with PII redaction) for regulated verticals. Surface source metadata (author, date, domain) in UI to support EEAT.

Sonar Pro vs general-purpose LLMs

| Feature | Sonar Pro | General LLM (e.g., vanilla large LLM) |

| Built-in citations | (citation-first outputs) | (requires custom retrieval) |

| Best use | Research & citation-backed Q&A | Creative writing, generative tasks |

| Pricing | Token-based, premium output pricing | Varies; often cheaper for long text |

| Speed | Engineered for throughput | Varies by provider |

| Integration effort | Lower for web-grounded Q&A | Higher (you must add RAG + citation layer) |

Bottom line: Sonar Pro is a purpose-built choice for provenance-first applications. If your product priorities align with verifiable Q&A, Sonar Pro reduces integration overhead.

Step-by-step benchmarking plan you can run

Test set

- 50 queries: 20 short facts, 20 deep research queries, 10 follow-up chains.

Script

- Use the same prompt templates for each run across models.

- Record full request/response JSON including tokens.

Measure

- Latency: median & p95.

- Tokens used: mean input and output tokens per query.

- Citation count: citations per response.

- Citation correctness: manual validation of 200 claims.

Compute cost

- Convert measured token counts to dollars using Perplexity’s published $/1M rates for Sonar Pro (e.g., $3 input / $15 output). Include request fees when benchmarking Pro Search contexts.

Report

- Median latency, p95 latency, citation accuracy %, and $ per 1k queries.

This gives an apples-to-apples view that you can use to compare to competitors or alternative architectures.

Final verdict — who should buy Sonar Pro

Buy Sonar Pro if:

- Your product must provide evidence for claims (legal research, news, academic tools).

- You value fast, citation-first answers and are willing to manage output-token costs.

- You’ll implement caching, token controls, and server-side validation.

Consider Alternatives If:

- Your workload is mostly long-form content where citations aren’t necessary.

- You already operate your own retrieval index and prefer a cheaper base LLM for formatting.

FAQs

A1: No. Sonar Pro prioritizes citation-backed Q&A. Sonar Reasoning Pro incorporates explicit reasoning tokens and different behavior for structured “thinking” outputs; it has different pricing and response formats.

A2: Yes. You can combine Sonar Pro’s web grounding with your internal retrieval by sending internal docs or combining outputs. Many teams use hybrid retrieval: a local index for private docs and Sonar Pro for web grounding.

A3: Perplexity publishes factuality claims and benchmarks, but accuracy varies by domain. Run a validation suite on representative queries for your vertical before committing to production.

A4: Summarize inputs, cap outputs, cache aggressively, and step down to cheaper models when citations aren’t required. Use cost calculators like Helicone to model scenarios.

A5: Perplexity’s official docs publish token rates and model pages — re-check these pages before launching

Closing

- Run the 50-case benchmark (metrics: median/p95 latency, token usage, citation accuracy).

- Confirm pricing on the Perplexity docs page and model page.

- Implement server-side citation validation for regulated verticals.

- Add caching + step-down model routing to control costs.

- Prepare UI to display source metadata (author, date, domain) to support.