Introduction

When Perplexity announced R1-1776 in February 2025, it was more than another model checkpoint — it was an explicit statement about reproducibility, guardrails, and product design choices. Perplexity R1-1776 is a post-trained derivative of DeepSeek-R1, published with the stated goal of making certain application-level content filters less restrictive so researchers could inspect model behavior without product-layer censorship. For NLP engineers and applied researchers, that meant an opportunity to study model-level behavior and a responsibility to manage the increased risk surface that comes from fewer application-level filters. This guide collects what practitioners need to know: official source links, practical specs, reproducible benchmark methodology, deployment recipes (local and hosted), quantization & inference guidance, and a prioritized safety checklist to follow before any production usage. Read this as a hands-on runbook for controlled experimentation and responsible deployment.

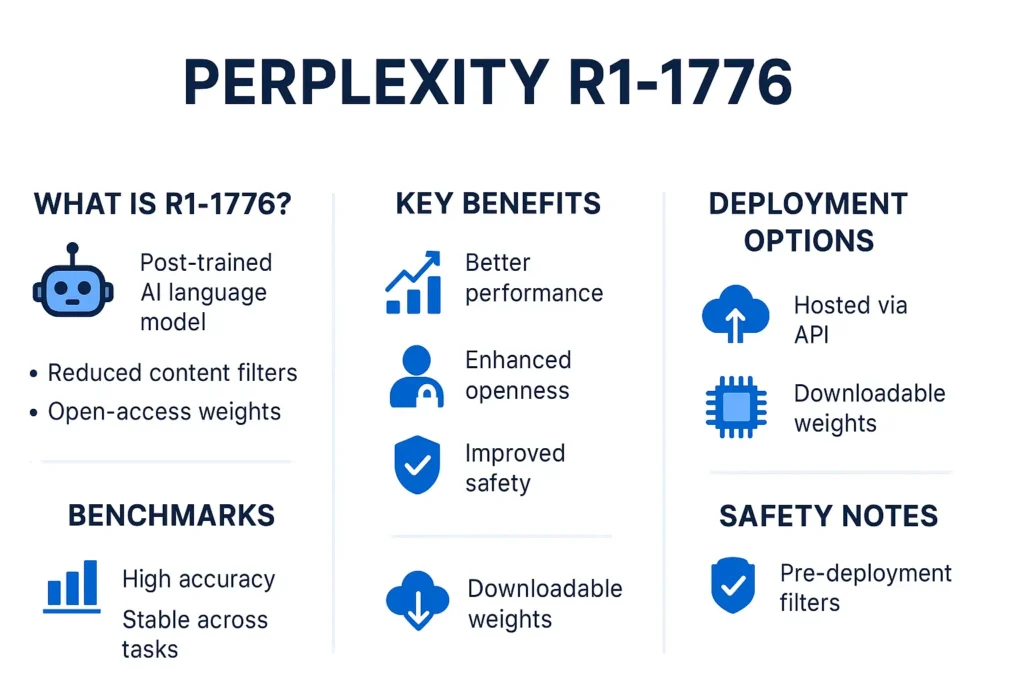

What is Perplexity R1-1776?

High Level:

R1-1776 is a post-trained variant of DeepSeek-R1 released by Perplexity on February 18, 2025. Perplexity framed it as an open-sourced version tuned to remove or relax certain application-level censorship behaviors while preserving the model’s reasoning capabilities. The announcement emphasized researcher access, downloadable weights on Hugging Face, and hosted options through Perplexity’s Sonar API for experimentation.

Why The Name Perplexity R1 “1776”?

The name generated conversation because it evokes historical/political symbolism (1776 as an emblem of freedom/independence in some cultural contexts), and observers flagged that the choice amplified public attention and debate about openness and values in AI. Community commentary on naming and motivations added to media coverage.

Short Timeline:

- Jan 2025 — DeepSeek-R1 draws scrutiny for internal and application-level censorship mechanisms; security and bias investigations follow.

- Feb 18, 2025 — Perplexity publishes Open-sourcing R1 1776; weights become available on Hugging Face, and Sonar API hosted access is announced.

- Spring–Summer 2025 — Community benchmarks and anecdotal reports appear, showing mixed results across tasks and safety probes.

- Later 2025 — Perplexity removes R1-1776 from the user-facing UI selector to focus engineering on newer model lines; hosted/offline access patterns change accordingly.

Key Specs & Where To Get The Model:

Where To Download :

- Perplexity blog announcement — Official release post Open-sourcing R1 1776. Use this as the canonical announcement and for any official clarifications.

- Hugging Face repository — Perplexity-ai/r1-1776 contains model weights, the model card, and related assets. Always read the model card and license before deployment.

- Perplexity Sonar API — Perplexity offered a hosted option (Sonar) for experimentation; check current docs and rate limits if using a hosted path.

Notable practical specs :

- Model family: Post-trained variant of DeepSeek-R1 (reasoning model lineage).

- Behavior: Tuned to reduce certain application-level censorship signals; this makes outputs more direct for some sensitive prompts compared to DeepSeek-R1. (Note: behavior varies with prompt phrasing, temperature, and decoding.)

- Context window: Varies by release and mirror; verify the exact limits in the Hugging Face repo or the specific fork you plan to use. Do not assume context size is identical across community forks.

- License: Model card contains license terms and usage constraints — double-check before commercial use because model cards can be updated post-release.

How Perplexity R1 1776-1776 Differs From DeepSeek-R1

High-level Differences:

- Behavioral tuning / post-training: R1-1776 was post-trained with the explicit aim of removing or relaxing application-level content filters that DeepSeek-R1 carried in production. That alters the model’s response surface on geopolitically sensitive or otherwise restricted topics.

- Open weights: Perplexity provided weights for offline download; this enables local quantization, forks, and independent evaluation. That openness facilitates reproducible research but also shifts responsibility for safety onto deployers.

- Product lifecycle: Perplexity later deprecated R1-1776 from its UI selector to reduce engineering maintenance and to concentrate on evolved model lines. Product removal from a UI does not necessarily mean the weights vanish — hosted or offline options may persist, but with reduced product support.

Practical implication:

- Expect more direct answers to some sensitive prompts, which can be useful for certain research aims (e.g., probing alignment) but increases risk for consumer-facing products without additional layers of filtration and human review.

Safety, Moderation & Legal Considerations

Main Risk Perplexity R1 1776:

R1-1776 was explicitly post-trained to remove certain content filters; this raises the risk of producing content that may violate laws, policy, or platform rules. Treat it as a higher risk than conservative, product-tuned models.

Multi-Layered Safety Checklist

- Pre-prompt filtering: Reject or rewrite clearly disallowed inputs (illicit instructions, detailed wrongdoing requests) before sending to the model.

- Model output classifiers: Run every model output through up-to-date classifiers for toxicity, illegal instructions, and PII leakage. Use ensembles where feasible.

- Human-in-the-loop: Escalate high-risk or ambiguous outputs to moderators before release.

- Regional gating & geo-fencing: Disable or restrict capabilities in jurisdictions with legal constraints.

- Logging & forensics: Maintain immutable logs for incident investigation (inputs, outputs, model config, timestamps).

- Continuous red-teaming: Set up automated jailbreaks plus periodic manual adversarial testing. Treat findings as defects with owners and SLAs.

Legal & Compliance

- Export and jurisdictional rules: Confirm if running a less-filtered model is lawful in your target countries; regulators have scrutinized models in the DeepSeek family.

- License compliance: Recheck the model card before commercial use; terms can change.

Perplexity R1 1776 vs alternatives — quick technical comparison

| Model / Option | Strengths | Weaknesses | When to choose |

| R1-1776 | Open weights; fewer application-level filters; useful for open research and reproducibility. | Requires strong safety engineering; mixed Community signals on long-chain reasoning; UI deprecated. | Research projects, internal tooling with strong human review, and alignment studies. |

| DeepSeek-R1 (original) | Conservative on sensitive topics; had product-level guardrails. | May block legitimate research prompts; less open for offline evaluation. | Public-facing chatbots need strict compliance. |

| Other open models | Active ecosystem, forks, and tooling; diverse license choices. | Varied tradeoffs in quality & safety; metadata and support differ. | When community tooling or permissive licensing is a priority. |

How to Run a Quick,

- Pick a harness: Start from an existing benchmark template (lm-eval, custom scripts) and place everything in version control.

- Install deps: Bitsandbytes, transformers, and evaluation libraries. Pin versions.

- Download weights: Perplexity-ai/r1-1776 from Hugging Face and confirm checksums.

- Prepare test suites: GSM8K, MMLU subset, TruthfulQA probes, plus a curated red-team set (e.g., 50–200 targeted prompts).

- Quantization parity: Run FP16 vs 8-bit (repeat runs). Capture outputs and metadata.

- Run seeds: 3–5 seeds minimum; save outputs to structured CSV/JSON with metadata.

- Publish: Push raw outputs, configs, and a short README to GitHub; include an open DOI or permanent link if possible.

Pro tip: Add a Docker-compose or Dockerfile to capture exact runtime dependencies and reduce friction for third-party reproducibility.

Pros & Cons Perplexity R1 1776

Pros

- Open weights enable reproducible research and local evaluation.

- Fewer application-level filters can reveal model behavior previously hidden by product guardrails.

- Hosted Sonar API allowed quick prototyping without heavy infrastructure.

Cons

- Increased moderation & legal risk because some safety filters were relaxed.

- Community reports show mixed performance on long-chain reasoning; results depend on prompt engineering and numeric format.

- Product deprecation reduces direct product support and can complicate integration.

FAQs Perplexity R1 1776

A: Not exactly. R1-1776 is a post-trained variant of DeepSeek-R1 with behavioral changes intended to reduce certain application-level filters; weights were published on Hugging Face.

A: Only after extensive task-specific benchmarking, legal review, and strong safety layers (pre-filters, output classifiers, human review). Treat it as higher-risk than conservative models.

A: Perplexity announced a deprecation in the UI to reduce engineering overhead and to focus on newer models — hosted or offline options may still be available for research.

A: It depends on the task: R1-1776 often responds more directly to sensitive questions (by design) but may show varied performance on long-chain reasoning. Benchmark your workload.

A: On Hugging Face at perplexity-ai/r1-1776 — always review the model card and license before use

Conclusion Perplexity R1 1776

R1-1776 is a consequential release for those focused on openness, reproducibility, and alignment research. By publishing post-trained weights, Perplexity enabled an empirical study of model behavior that product-tuned models previously obscured. That transparency is valuable, but it brings responsibilities: safety engineering, legal checks, and continuous adversarial testing. For engineers and researchers, the practical path is straightforward — download weights, reproduce standardized tests, start with 8-bit quantization, add layers of output filtering, and enforce strong human review for high-risk outputs. For consumer products, prefer conservative models and hosted, monitored integrations where governance and logging are robust. Publication of benchmark scripts, raw outputs, and clear runbooks helps the community validate claims and fosters safer collective practices. Treat R1-1776 as a research asset and an operational experiment — a tool for understanding tradeoffs between openness and safety, not a drop-in replacement for production chat models.