Introduction

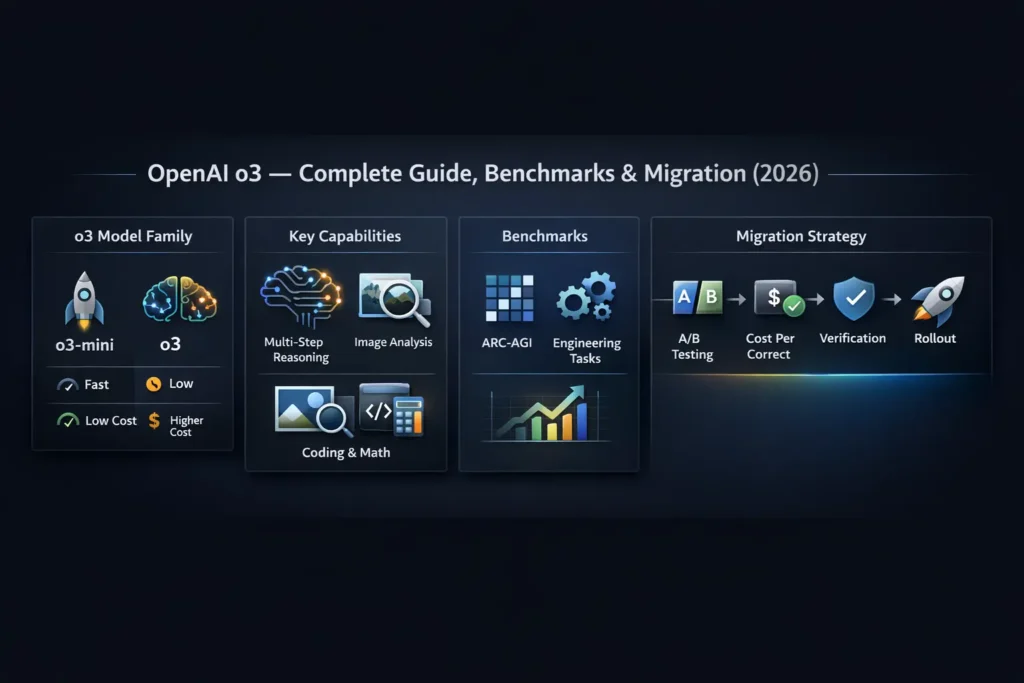

Many teams unknowingly make critical AI errors that can waste time, money, and resources. In this guide, we reveal hidden truths about OpenAI o3, show you how to avoid these pitfalls, and provide a practical playbook to make smarter, more reliable AI decisions today. OpenAI o3 is a reasoning-first family of models engineered for extended deliberation and structured multimodal understanding — especially useful for NLP and vision+language tasks that require multi-step inference, program-like reasoning, or inspection of visual context. The family intentionally separates into three practical variants to help engineers trade cost vs. correctness: o3-mini for high-volume, low-cost inference; o3 for balanced multimodal reasoning; and o3-pro for mission-critical high-accuracy synthesis that justifies more compute. o3’s biggest product-level shifts are (1) explicit image-region operations (crop/zoom/rotate) integrated into the model’s internal reasoning trace, and (2) longer internal deliberation passes that raise correctness on multi-hop problems at the cost of compute and sometimes latency.

Vendor and early independent benchmarks show strong gains on visual reasoning sets like ARC-AGI and many engineering tasks, but dataset overlap, compute configuration, and prompt style materially change outcomes — so reproduce your own holdouts. Migrate with a careful A/B plan: inventory high-value record baseline metrics, run staged traffic experiments (10–25%), measure cost-per-correct-answer, add RAG and verifier passes, and gate high-risk outputs with human review.

Why This OpenAI o3 Guide Matters — Who Can’t Afford to Miss It

If you build applied NLP systems, production assistants, developer tools, or embed model-driven decision flows, OpenAI o3 should be on your shortlist in 2026. o3 reframes the engineering tradeoffs for tasks that are inherently multi-step, visual, or programmatic: think symbolic math, code debugging with screenshots, diagram QA, and multi-document scientific synthesis. This guide reframes O3 in NLP terms: how it changes inference paradigms, what to measure in evaluation, how to run reproducible benchmarks, and practical migration steps to adopt the family safely and efficiently.

What Is OpenAI o3 — The AI Reasoning Game-Changer

- Deliberative inference: o3 is tuned to perform additional internal reasoning passes (deliberation) before emitting answers. Conceptually, this is similar to enabling an internal chain of thought that the model leverages to reduce incorrect conclusions on multi-hop problems.

- Image-region-aware reasoning: Rather than treating images as flat blobs or monolithic tensors, o3 can request and condition on regional visual observations (crop, zoom, rotate) as part of its reasoning trace. For the vision+language task,s this allows the model to perform localized visual inspection and then fold those observations into symbolic reasoning steps.

- Stratified family for routing: The family includes o3-mini, o3, and o3-pro to allow operational routing based on task criticality and price sensitivity.

- Designed for verification-first workflows: Because reasoning-strength doesn’t equal perfect accuracy, o3 is intended to be paired with retrievers, verifiers, and automated checks in production NLP pipelines.

In practice, o3 aims at code & math reasoning, diagram understanding, and long-horizon synthesis — exactly the workflows where the cost of human review is high and structured verification is feasible.

Core Technical Behavior of OpenAI o3

Internal deliberation & “thinking passes.”

o3 is optimized to perform multiple internal compute passes that refine intermediate latent representations before producing an output. This yields better multi-step logical consistency, but at the cost of higher compute per request. From an engineering perspective, treat the extra compute as a tunable knob: more passes = higher precision, but longer latency and greater billed cost.

Image-Region Operations as Evidence Tokens

Instead of a single visual embedding for an entire image, o3 exposes region-based observations that the model can select and reference. Each crop/zoom/rotation becomes an evidence token in the model’s internal reasoning sequence — effectively blending symbolic observations with textual tokens.

Structured outputs and syntactic constraints

o3 performs well when asked to produce structured outputs (JSON, stepwise proofs, unit-testable patches). Use explicit output schemas to make automatable verifiers practical (unit tests, symbolic checks, property assertions).

Prompt Engineering for verifier pipelines

Because o3 is deliberate, prompts that demand stepwise justification and include checkable constraints (e.g., “provide steps A→B and a final compact answer; then produce a JSON of facts used”) improve downstream automated verification.

OpenAI o3 Quick Specs at a Glance

- Model family: o3-mini, o3, o3-pro

- Strengths: Multi-step reasoning, visual reasoning (region-level), coding & math

- Context support: Large windows suitable for long prompts & multimodal inputs

- Best fit: Debugging, diagram QA, research synthesis, high-risk triage with human gates

- Operation model: Route by cost/accuracy—mini for scale, pro for high assurance

What’s new vs o1 — and why it Matters

Integrated image Reasoning

o1 treated images as single embeddings. o3 treats them as manipulable evidence. For NLP workflows that ingest screenshots, architecture drawings, or scanned documents, this is transformational: you can ask the model to inspect a region of a circuit diagram and then reference the inspected pixels in subsequent inference steps.

Longer internal passes

o3’s extra computation is akin to deeper beam-search or internal chain-of-thought sampling that is not exposed as verbose output by default. This reduces superficial hallucinations on multi-hop reasoning but requires rethinking latency budgets.

Operational stratification

Introducing distinct cost-performance variants (mini, standard, pro) forces teams to adopt model routing strategies as a first-class architectural concern — and to instrument metrics that reflect business value, not just token counts.

OpenAI o3 Benchmarks — What the Numbers Really Mean

- Dataset overlap matters — If your evaluation examples or domain-specific tests leak into pretraining, scores will be inflated. Use locked-down holdouts or new adversarial splits.

- Compute mode matters — High-compute vendor runs are not the same as production latency configurations. Compare both.

- Measure business metrics — Prefer cost per correct answer, human review reduction, and MTTR (mean time to rectify incorrect outputs) over raw accuracy.

- Reproducibility is mandatory — publish the exact prompt templates, context windows, and computational budgets used in your benchmarks.

Comparing the OpenAI o3 Family: Mini, Standard & Pro

| Variant | Best for | Latency | Typical cost posture |

| o3-mini | High-volume low-cost inference (chat UIs, autocomplete) | Low | Low |

| o3 | Balanced reasoning + multimodal tasks | Medium | Mid |

| o3-pro | Mission-critical synthesis, research, legal, or long chains | Higher (may be much larger on hard prompts) | High |

Routing heuristics: Use heuristics or classifiers to route traffic—e.g., a domain classifier that tags tasks as low, medium, high criticality and routes accordingly, or a confidence-estimator that re-routes low-confidence outputs to o3-pro with a verifier.

When to pick which

- o3-mini: Low-latency front-line UIs, autocorrect, conversational assistant, where occasional errors are tolerable.

- o3: Backend pipelines requiring multimodal context or stepwise reasoning with some verification.

- o3-pro: Batch jobs, legal or medical triage with human-in-loop approvals, or when the marginal value from reduced review time outweighs cost.

Practical integration & migration checklist

Copy this into your engineering playbook.

Inventory & Baseline

- Export top 20 high-value prompts and rank by business impact.

- For each prompt record: current model, correctness rate, hallucination incidents, avg tokens, average latency, and average human review time.

Safety gates & Risk policy

- Classify outputs by risk (low/medium/high).

- For high-risk outputs, mandate human review or structured verification.

A/B test & Rollout plan

- Route 10–25% traffic to o3/o3-mini initially.

- Minimum sample size: 1,000+ requests per variant (adjust for variance).

- Duration: 2–4 weeks

Metrics to collect

- Human correctness score (blind human rating).

- Hallucination rate (binary).

- Latency distribution.

- Cost per verified correct answer.

- Human hours saved.

Image-Enabled Testing

- Re-run any image-processing tasks through o3 with region requests and measure uplift.

Fallbacks & circuit-Breakers

- If cost or latency exceeds thresholds, automatically shift traffic to cheaper variants or cached responses.

Monitoring & Logging

- Log model version, token counts, region requests, inference duration, and structured output schema compliance.

Security & Governance

- Sanitize PII in images and text.

- Set retention policies and contractually limit persisted content.

Deployment & UX

- Communicate to end-users when high-assurance models are used.

- Provide traceability for decisions (structured reasons, citations).

Minimal A/B Test plan

- Duration: 2 weeks minimum

- Traffic: 10–20% to the new model (scale up gradually)

- Requests: ≥ 1,000 per arm

- Metrics: human-scored correctness, hallucination rate, latency, cost-per-verified-answer

- Success threshold (example): 15% uplift in correctness or 10% reduction in review time at acceptable cost delta

- Actions: If successful, expand to 50,% then 100%. If fail → rollback and analyze failure modes.

Cost & performance Tradeoffs

- A higher-cost model that halves human review can reduce net cost.

- Track total cost = model compute + human review + downstream costs (e.g., errors that cause refunds or rework).

- Use model routing to optimize: cheap variants for routine or low-risk, balanced for unknown complexity, pro for high-impact.

Safety, Limitations & Common Failure Modes

- Confident hallucinations: A long chain-of-thought can produce plausible but incorrect conclusions.

- Image-context misreads: Cropping or missing visual context leads to incorrect inferences.

- Over-optimization: Outputs may appear rigorous yet fail on out-of-distribution inputs.

- Latency spikes: High-compute runs for difficult inputs can suddenly increase tail latency.

Practical Mitigations

- RAG (retrieval-augmented generation): ground the model with retrieved documents and force citations.

- Verifier pass: run a cheaper Deterministic checker or an automated test suite to validate structured outputs.

- Human-in-the-loop gates: human approval for high-risk outcomes.

- Structured outputs: require machine-parseable outputs and run property checks (unit tests, symbolic evaluation).

- Monitoring & rollback: automated triggers on anomalies (spike in hallucinations, cost, or latency).

Practical real-world NLP & multimodal use cases

- Code generation + bug triage

- Input: failing unit tests + stack traces + minimal repo context.

- o3 provides prioritized fixes and candidate patches; use unit tests as automated verifiers. Validate candidate patches with quick tests using CI before human review.

- Visual diagram Q&A

- Input: architecture diagram or circuit screenshot.

- Ask: “List the top 5 single points of failure with region references.” Use o3’s crop ops to point to relevant components.

- Scientific literature synthesis

- Chunk papers using retrieval, feed evidence to o3-pro for final synthesis with citations and confidence scores, and gate through researcher review.

- Math & symbolic tutoring

Prompt for step-by-step solutions and require symbolic verification via SymPy or a CAS to confirm final results. - Legal document triage

- Have O3 identify risky clauses and generate redlines; always require lawyer sign-off.

- Data extraction from images

- Use o3 to find table regions in invoice images and extract JSON fields; apply confidence thresholds to flag low-confidence extractions for human review.

- UX/design critique from screenshots

- Feed mockups and ask for prioritized accessibility issues with actionable fixes.

- Product analytics narrative

- Feed charts + metrics and ask for a one-page narrative with 3 prioritized actions; include a footnoted list of evidence retrievals.

Comparison: o3 vs GPT-Family Models

- o3: Designed for reproducible multi-step reasoning and image-aware chain-of-thought. Best for tasks that require explicit internal deliberation and visual inspection.

- GPT-family (GPT-4.x/GPT-5): Generalist models optimized for broad conversational fluency and diverse tasks. May be better for open-ended creative tasks or general chat UIs.

- Decision rule: Choose the tool that aligns with the task’s failure-mode and value: visual/multi-step correctness → o3; open-ended, conversational tasks → GPT family.

Pros & Cons

Pros

- Strong multi-step reasoning for code, math, and visual tasks.

- Family approach allows cost/accuracy tradeoffs.

- Early benchmark gains are promising on visual reasoning tasks.

Cons

- Higher cost and potential latency, especially for pro runs.

- Reasoning does not guarantee accuracy; verification sis till required.

- Benchmarks vary by compute mode; reproduce results before trusting them.

FAQs

A: OpenAI announced the o3 family between 2024 and 2025; for the exact press release date, consult OpenAI’s official release notes before publishing.

A: Do not flip the switch blindly. Run controlled A/B tests on your top prompts, measure accuracy and cost-per-verified-answer, and roll out incrementally if metrics justify it. Use o3-mini for scale-first experiments.

A: Not always. o3 excels at visual and multi-step reasoning; GPT-family models may be superior for other classes of tasks. Benchmark your own workflows.

A: Use layered mitigations: grounding (RAG), verifier passes, human review, and strict monitoring. Treat model output as a decision support tool, not the final authority.

A: Pricing changes often; refer to OpenAI’s official pricing and API docs for current rates. Verify before you publish.

Conclusion

OpenAI o3 is a practical pivot toward models that deliberately reason and can reason about images inside their inference process. For teams, the right approach is measured: A/B test on high-value prompts, compute cost-per-correct-answer, and add RAG/verifier layers plus human gates. Use o3-mini for scale, o3 for general multimodal tasks, and o3-pro where downstream value justifies the cost. Pair any published guide with reproducible benchmarks and a downloadable migration checklist to increase credibility and adoption.