Introduction

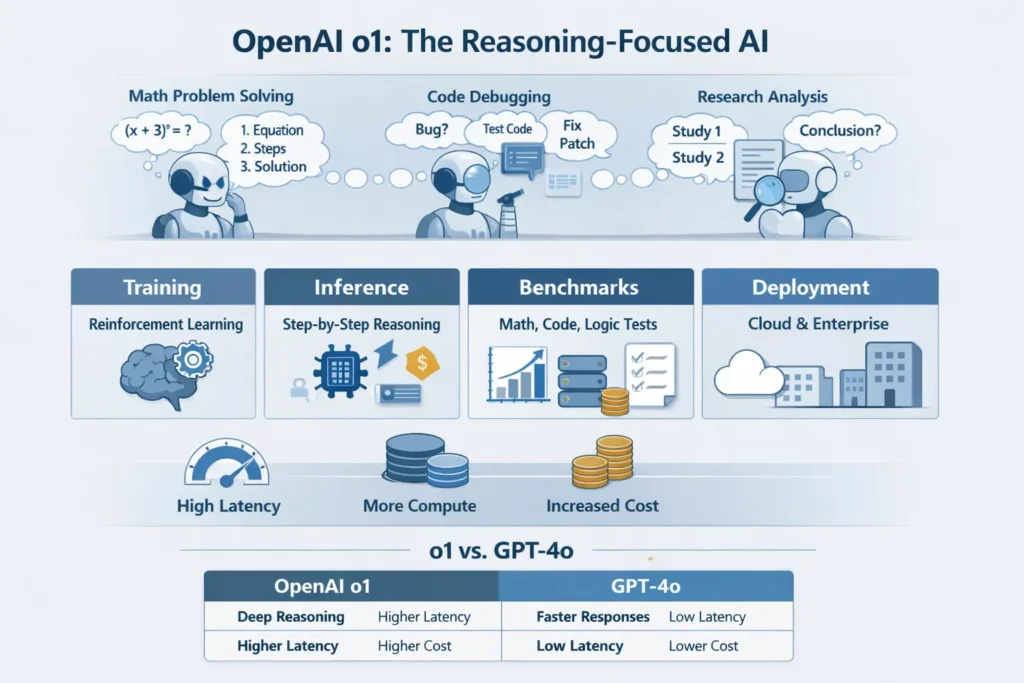

OpenAI o1 is being treated like just another model update — and that’s exactly why most people are missing what actually changed. At first glance, it looks familiar, almost predictable. But the reactions from early users don’t match the surface-level story. Some are impressed, some are confused, and some are quietly adjusting how they use AI altogether. This page doesn’t repeat release notes or marketing claims. It breaks down what feels different about OpenAI o1, why expectations don’t align with reality, and what this shift really means going forward. OpenAI o1 represents an evolution in large language model design that prioritizes deliberate multi-step reasoning at inference time.

Rather than producing a single fluent response immediately, o1 is engineered to allocate additional inference compute to construct and internally evaluate stepwise reasoning traces before emitting a final answer. In natural language processing terms, o1 trades throughput for improved structured reasoning — helping in domains like mathematics, program synthesis, formal logic, and multi-step scientific explanation where intermediate steps are crucial to correctness.

First previewed in 2024 and documented in system and safety notes later that year, o1 sits alongside faster, multimodal models such as GPT-4o. Use o1 when task fidelity and correctness matter more than latency; use faster models for interactive, low-latency, or multimodal applications. This pillar guide reframes O1 in NLP terminology, outlines reproducible benchmarking methodology, provides integration and deployment patterns, and describes guardrails for safe, reliable usage. If you want runnable benchmark scripts and CSVs to publish on GitHub, I can generate them next.

OpenAI o1 — What Is This AI Everyone Can’t Stop Questioning?

In terms, o1 is a family of models optimized for chain-of-thought-style inference: the runtime encourages or enforces the generation of internal intermediate representations (implicit or explicit reasoning traces) that improve downstream answer accuracy on compositional and sequential tasks. Architecturally and operationally, o1 applies extra inference-time compute (more decoding steps, internal verification passes, or verifier modules) to reduce shortcut generalization and brittle surface-level correctness.

Timeline — How Did This AI Evolve So Fast?

- September 2024 (preview): o1-preview debuted as a reasoning-focused model family.

- December 2024: OpenAI released the o1 System Card and safety documentation, clarifying training objectives, evaluation methodology, and known risks.

- 2025 onwards: O1 became available through major cloud vendors and enterprise integrations (e.g., Azure). Availability, pricing, and compute tiers matured across providers.

Why Some Professionals Can’t Ignore It

- Automated grading and evaluation for open-ended math or logic.

- Critical code synthesis, bug diagnosis, and formal verification assistance.

- Research assistants who synthesize multi-stage arguments from primary literature.

- Scientific workflows require traceable reasoning and reproducibility.

OpenAI o1 — What’s Going On Inside Its “Thinking” Brain?

Core Idea:

From an/ML viewpoint, o1 is trained and/or fine-tuned with objectives that reward coherent multi-step reasoning and penalize shallow heuristic shortcuts. This may include:

- Reinforcement learning from human feedback (RLHF) variants that explicitly score intermediate reasoning traces.

- Auxiliary losses that encourage latent representations to encode intermediate subgoals.

- Training curricula that present multi-step synthetic tasks to expose compositional structure.

At inference time, o1’s runtime stacks additional compute: longer decoding horizons, internal sampling/verification passes, or explicit internal chain-of-thought generation that is then condensed into a final answer. In other words, o1 increases the effective depth of inference.

Why this Matters

Standard LLMs excel at surface fluency but often fail on compositional tasks where intermediate steps cannot be skipped. By promoting intermediate representation fidelity, o1 reduces the probability of spurious-but-plausible outputs (surface-level hallucinations) on problems that require multi-step inference: formal mathematics, algorithmic reasoning, multi-turn code refactors, or logic puzzles.

Tradeoffs

- Latency: Inference takes longer — from hundreds of milliseconds to multiple seconds, depending on compute budget.

- Cost: Per-query compute consumption typically increases, raising the billing.

- Stability: Longer internal reasoning can increase variance across runs; repeatability requires seed controls and deterministic decode settings.

- Calibration: For some tasks, o1’s confidence probabilities may be better calibrated; for others, more elaborate verification is still necessary.

OpenAI o1 — How Do Experts Really Measure Its Genius?

What Good Benchmarks Require

- Fixed prompt templates and input corpus — eliminates prompt drift.

- Controlled compute settings — run with baseline (cheap model, default compute) and scaled settings (o1 with extra compute).

- Multiple seeds — stochastic decoders (top-k, top-p, temperature) can affect outcomes.

- Evaluation metrics beyond accuracy — include latency distributions, tokens consumed, and cost-per-correct-answer.

- Human evaluation for subjective outputs like justification quality.

Example Benchmark Setup

- Datasets: curated subsets ideal for stepwise reasoning:

- MATH (complex problem sets)

- HumanEval (code generation & unit test pass/fail)

- MMLU / reasoning subsets

- Prompt template: Show your full reasoning steps, then give the final answer. (used as a control to elicit stepwise traces).

- Runs: 3 seeds per prompt; compute conditions: baseline vs high-compute (o1).

- Metrics: Exact match/accuracy, median latency, p95 latency, tokens emitted, USD cost per correct.

What Community Benchmarks Indicate

Community trackers show o1 outperforming certain faster baselines on reasoning metrics, but outcomes depend strongly on:

- Prompt phrasing and rubric.

- Whether the benchmark rewards chain-of-thought outputs or final-answer-only metrics.

- The extent to which verification/unit tests were used.

OpenAI o1 — What’s the Secret Behind Reproducible AI Results?

Controlled variables

- Prompt template: Exact strings, including system messages.

- Decoder settings: Temperature, top_p, max_tokens.

- Compute mode: Default vs high-compute (e.g., more internal iterations).

- Random seeds: Record seeds if possible; use deterministic decode if available.

OpenAI o1 — What Secrets Are Hidden in Its Performance Data?

Store Structured Logs with:

- Prompt ID and exact prompt text (redact sensitive user data if needed).

- Model name, compute tier, and param settings.

- Latency timestamps (request sent, first token, final token).

- Token counts (input, output, total).

- Cost estimate per request.

- Ground truth & predicted answers.

- Pass/fail and human evaluation notes when applicable.

Statistical Rigor

- Use confidence intervals for accuracy metrics.

- Report median and p95 latency, not just mean (latency distributions are heavy-tailed).

- When comparing models, use paired tests (same prompts across models) and report significance.

OpenAI o1 — How Fast, Costly, and Practical Is It Really?

Cost Modeling

If o1 uses 2–4× more compute and has 2–6× longer latency than a cheap baseline, per-call cost rises in proportion to compute time and tokens. But evaluate cost per useful outcome:

- If o1 reduces downstream human review or follow-up calls, the effective cost per correct answer may fall.

- Model selection should hinge on cost-of-error in your domain: high-cost errors justify higher per-call compute.

Latency strategies

- Adaptive serving (escalation policy): start with a low-cost baseline model; if the baseline’s confidence is below a threshold or the task is classified as reasoning-heavy, escalate to o1.

- Parallel pipeline: use cheap models for retrieval, formatting, or pre-processing; reserve o1 for core reasoning.

- Timeouts & graceful fallbacks: cap o1 runtime for user-facing flows. If the cap is reached, return a partial result plus a rationale that human review is needed.

- Batch processing for offline tasks: for bulk tasks (grading, audits), queue requests for o1 in background workers to avoid user-facing latency.

What SLOs Ensure This AI Doesn’t Go Off Track?

Instrument:

- Accuracy drift (task-specific).

- Hallucination rate measured via unit tests or back-checkers.

- Latency percentiles (p50, p95, p99).

- Cost per correct (USD per verified output).

Set SLOs for p95 latency and budget for cost-per-output. Trigger alerts on sudden cost spikes or accuracy degradation.

What Guardrails Prevent Costly Mistakes?

Post checks

- Symbolic checking: Where possible, use deterministic provers or unit tests (e.g., run the generated code).

- Semantic checks: Use separate models to verify claims (e.g., ask a verifier to recount key facts).

- Constraint enforcement: Ff output should satisfy invariants (e.g., sum to a value), and enforce them programmatically.

Logging & privacy

- Redact PII before storing prompts.

- Retention policies: Align logs with legal requirements and organizational data governance.

Human-in-the-loop

For high-risk decisions, require human confirmation of model outputs. Use the model to prepare a justification to speed human review.

Safety & security: what OpenAI tested and what to watch for

OpenAI’s o1 system card and safety notes describe training, evaluations, and red-team results. From an NLP risk lens, key concerns include:

Persuasion & Misuse

Models that reason well can produce highly persuasive arguments. This raises risks in misinformation, persuasive deception, and automated influence campaigns. Mitigations:

- Use watermarking/traceability.

- Limit or monitor outputs that propose persuasive tactics.

- Human review for high-impact content.

Hallucinations

While o1 reduces some classes of hallucination (especially those removable by stepwise checks), hallucinations persist. Always verify with symbolic or external sources for high-stakes outputs.

Domain-specific red-Teaming

Run adversarial tests tailored to your domain: finance, healthcare, legal — adversarial prompts can reveal failure modes unique to your use-case.

Best practice Guardrails

- Human review for high-risk outputs.

- Use verifiers, unit tests, and symbolic checks.

- Keep explainability logs (prompts, intermediate summaries, final answers) with proper privacy controls.

OpenAI o1 — When Should You Avoid It and Why?

Don’t choose o1 if:

- You require sub-100ms responses (real-time bidding, gaming).

- The task is simple classification or tagging at high throughput.

- You need strong multimodal capabilities, where GPT-4o may be better.

- You must run entirely on-prem without cloud access.

Alternatives

- GPT-4o / GPT-4o-mini — faster, multimodal friendly.

- o1-mini — lower-cost reasoning tradeoff.

- Domain-specific small models — for privacy-sensitive on-prem demands.

OpenAI o1 vs GPT-4o — Which One Should You Actually Use?

| Feature / Metric | OpenAI o1 | GPT-4o |

| Primary strength | Deep multi-step reasoning | Multimodal, low-latency |

| Typical latency | Higher (seconds) | Lower (sub-second to ~1s) |

| Typical cost per query | Higher | Lower |

| Best for | Complex STEM problems, code correctness | Interactive chat, multimodal apps |

| Safety notes | Persuasion risk; needs monitoring | Different failure modes; broadly tested |

OpenAI o1 — How Is This AI Solving Real Problems Today?

Automated Grading for STEM

Problem: Short-answer graders often fail because they do shallow pattern matching.

o1 approach: Ask the model to produce a numbered solution trace and compare student steps against a gold trace. Flag mismatches for human review.

Outcome: Reduced false negatives and fewer manual corrections.

Critical code patch Generation

Problem: Auto-generated patches introduce regressions.

o1 approach: Use o1 to propose a patch and generate stepwise reasoning plus unit tests. Run unit tests automatically; humans only inspect failing tests.

Outcome: Faster triage, fewer subtle regressions.

Research Assistant for Literature Reviews

Problem: Synthesizing argument chains from many papers is labor-intensive.

o1 approach: Prompt o1 to produce numbered reasoning steps mapping citations to claims; validate claims with citation-checkers and domain-specific verifiers.

Outcome: More coherent syntheses with audit trails.

FAQs

A: OpenAI released o1 as a preview and later published system docs. Access is via OpenAI’s API and cloud vendors (e.g., Azure OpenAI). Check provider signup and quota pages.

A: No. o1 is optimized for deep reasoning and extra inference computation. GPT-4o is broader, lower-latency, and multimodal.

A: It reduces some hallucination classes where stepwise logic helps, but it doesn’t eliminate hallucinations. Always verify high-stakes outputs.

A: Not always. For short snippets, cheaper GPT models may be faster and adequate. Benchmark on your codebase and use unit tests.

A: Yes — Azure announced O1 availability in Azure OpenAI Service and Azure AI tools.

Conclusion

OpenAI o1 advances the practical frontier of models that explicitly prioritize multi-step reasoning by increasing inference-time computation and incentivizing internally structured reasoning traces. For practitioners, this translates to a tool that is particularly valuable when the cost of an incorrect output is high and when correctness derives from compositional, multi-stage logic. However, the operational costs — higher latency, more compute consumption, and the need for robust verification — mean o1 is not a universal replacement for faster, multimodal models.

The pragmatic strategy is hybrid: use lightweight models for routine/formatting tasks and escalate to o1 when the problem requires deep, verifiable reasoning. Instrument your pipelines with deterministic benchmarks, unit tests, verifiers, and human review for high-risk domains.