Introduction

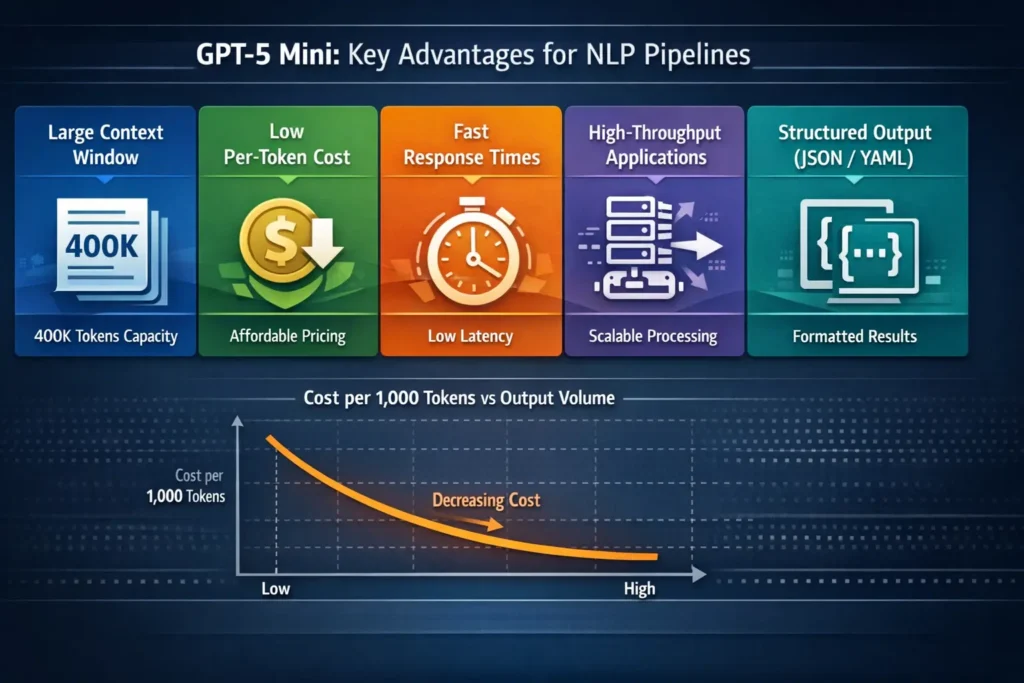

GPT-5 Mini — struggling with costly, large-context workflows? Cut token spend and speed up processing with a production-ready, low-cost model. It’s built for high-volume pipelines, fast chat, and structured outputs like JSON/YAML so you can scale efficiently without breaking your budget. Start today and see why thousands of developers are switching — save up to 90% and run 10× faster. Curious how? If you design, engineer, or operate AI-driven systems — chatbots, document pipelines, summarizers, triage classifiers, or content-generation networks — the decision between model variants is always a multi-dimensional tradeoff: latency, cost, context, safety, and the practical accuracy you actually need. The GPT-5 Mini variant is positioned as a cost- and throughput-optimized member of the GPT-5 family: deliberately scaled to deliver large-context support and rapid token throughput while trading away only a portion of the absolute top-end reasoning headroom reserved for flagship Pro tiers.

This guide reframes the original marketing and product-level description into NLP terms: token accounting, throughput math, prompt and instruction engineering, benchmark methodology, production rollout processes, monitoring signals, and concrete migration steps. You’ll get digit-by-digit pricing arithmetic, reproducible prompt templates formatted for programmatic insertion, integration examples for a developer pipeline, and a migration checklist organized as lab, canary, and rollout phases.

GPT-5 Mini — The Secret Behind 10× Faster Pipelines

GPT-5 Mini is a scaled variant within the GPT-5 family that is optimized for throughput-per-dollar and deterministic structured outputs. From an NLP systems perspective, think of it as a high-throughput transformer with a large attention capacity that — for many structured tasks — matches or exceeds older generation minis while enabling aggressive token-budgeting strategies.

Key Architectural Insights & Usage Assumptions You Must Know

- Useful niche: High-frequency, well-scoped tasks such as summarization, garde, template-based text generation, and structured extraction.

- Token finance: Lower per-token pricing that enables aggressive A/B testing, batch clarification, and multi-step coarse-to-fine pipelines.

- text capacity: Supports very large context windows, enabling single-request ingestion of long print and multi-document contexts.

- Latency vs accuracy tradeoff: Designed to return lower-latency outputs at lower cost while retaining practically useful instruction-following behavior for most enterprise use cases.

Why choose Mini from a systems standpoint? It lets you adopt token-aware UI and pipeline patterns (chunk-and-summarize, coarse-to-fine, caching) to drastically reduce run costs while preserving throughput needed for production SLAs.

GPT-5 Mini — Core Specs & What They Really Mean

| Spec | Value / Notes |

| Model family | GPT-5 (mini) — scaled variant |

| Context window | 400,000 tokens (reported) — enables single-request large document workflows |

| Max output tokens | 128,000 (config dependent) |

| Input types | Text & images were supported |

| Typical positioning | Cost- and latency-optimized for high-volume pipelines |

Implications: Large context windows reduce the need for complex external chunking strategies for many workflows. However, even with 400k tokens, good token budgeting (system messages + examples) remains critical to minimize cost and control output variance.

GPT-5 Mini Pricing — Exact Costs, Step-by-Step Math & Real Examples

OpenAI lists pricing as cost per 1,000,000 tokens for input and output. For GPT-5 Mini, the canonical published rates in your brief are:

- Input: $0.25 per 1,000,000 tokens.

- Output: $2.00 per 1,000,000 tokens.

Below, we convert those into constants you can drop into spreadsheets and production calculators.

Cost per 1,000 tokens

Input cost per 1,000 tokens

- Rate = $0.25 / 1,000,000 tokens

- 1,000 tokens = 1,000 ÷ 1,000,000 = 0.001 of a million

- Cost = $0.25 × 0.001 = $0.00025 per 1k input tokens

Output cost per 1,000 tokens

- Rate = $2.00 / 1,000,000 tokens

- 1,000 tokens = 0.001 of a million

- Cost = $2.00 × 0.001 = $0.00200 per 1k output tokens

Total combined cost per 1,000 tokens (input + output) = $0.00225

Practical example — per-request cost arithmetic

Request consuming 500 input tokens and producing 1,500 output tokens:

- Input cost = (500 / 1,000,000) × $0.25 = 0.0005 × $0.25 = $0.000125

- Output cost = (1,500 / 1,000,000) × $2.00 = 0.0015 × $2.00 = $0.003

- Total per-request = $0.003125

If you issue 1,000 such requests per day, the monthly cost ≈ is 30,000 requests × $0.003125 = $93.75 / month.

Engineering tip: In dashboards, represent per-request cost as:

cost = input_tokens * input_rate_per_token + output_tokens * output_rate_per_token

Where input_rate_per_token = 0.25 / 1e6 and output_rate_per_token = 2.00 / 1e6.

When Should You Really Choose GPT-5 Mini?

| Use case | Choose GPT-5 Mini? | Why |

| High-volume content generation | ✅ | Cheaper per token and designed for throughput |

| Low-latency multi-user chat | ✅ | Faster responses than flagship variants for routine tasks |

| Complex multi-step legal reasoning | ❌ | Use Pro/Full for highest-fidelity reasoning |

| Very long single-document ingest | ✅ | Large context windows simplify engineering |

| Cost-sensitive at scale (≥1M requests/mo) | ✅ | Significant OPEX savings vs Pro models |

Rule of thumb: Use Mini for pipelines where deterministic structure, speed, and cost matter more than marginal gains on the hardest reasoning tasks.

GPT-5 Mini — Benchmarks, Real-World Results & Surprising Performance

Public and community benchmarks indicate GPT-5 Mini outperforms many older “mini” models on instruction-following tasks and practical classification. However, Pro variants outperform in multi-step reasoning, emergent complex planning, and certain code synthesis benchmarks.

How to benchmark for your project

- Define representative prompts: Pick 50–100 prompts that reflect real production usage, varied by length, type, and domain.

- Token profile for both models: Measure input and output tokens per prompt to compute cost-per-pass.

- Run batch experiments: Run both Mini and the current model under identical seeds where possible; capture latency, token counts, and raw outputs.

- Human-in-the-loop scoring: Measure accuracy, style-match, and pass/no-pass on objective criteria.

- Optimize for cost-per-pass: Compute cost × pass_rate and prioritize pipelines by that metric rather than raw accuracy alone.

Benchmarks to track:

- Top-line accuracy or pass rate per prompt class

- Average tokens (input/output)

- Latency (p50, p95)

- Cost-per-pass and cost-per-correct-output

- Failure-mode inventory (hallucinations, truncation, format errors)

Integration patterns:

- Streaming: Use streaming responses to reduce perceived latency in UIs. Stream tokens to UI and render progressively.

- max_output_tokens: Set conservative caps to control cost and avoid unexpected verbosity.

- Batching: Translate or summarize multiple items per request when semantics permit to reduce per-item overhead.

- Caching: Cache templated responses and use deterministic seeds for re-runs where possible.

- Rate limiting & circuit breakers: Implement per-feature budgets with hard caps and graceful fallbacks.

GPT-5 Mini — Proven Patterns to Slash Costs & Boost Speed

- Limit max_output_tokens: Cap outputs according to UI needs.

- Chunk & summarize: Pre-summarize earlier context before sending to Mini if the earlier text is redundant.

- Coarse → fine pipeline: Use smaller/cheaper models for outlines; reserve Mini for drafts; reserve Pro for final polish affecting legal/medical accuracy.

- Cache & reuse: Store outputs for repeated queries like FAQs.

- Batch operations: Combine multiple tasks into one request (e.g., translate 50 strings in a single request).

- Token-aware UI: Defer long outputs behind a “Read more” link and ask for longer text only when necessary.

- Alive: Stream to the client to improve perceived latency.

- Review token spikes: Alerts when token usage deviates >20% from baseline.

- Human-in-the-loop for high-stakes outputs: Automatic verification for trip-prone outputs.

GPT-5 Mini Migration — Step-by-Step Checklist to Upgrade from GPT-4.1

Pre-migration

- Baseline evaluation: Run 50 representative prompts on the current model and Mini; compare outputs for accuracy and token usage.

- Token profiling: Instrument each feature to capture input + output tokens to compute estimated monthly spend.

- Metric mapping: Define pass/fail thresholds (e.g., 95% acceptance on QA tests).

Prompt & infra changes

- Prompt adaptation: Move stable boilerplate into system messages to reduce input tokens.

- Rate-limit & concurrency testing: Stress-test Throughput for expected loads and spike scenarios.

- Latency SLAs: Measure p95 latency and compare streaming vs non-streaming.

Safety & rollout

- Safety tests: Run adversarial prompts to find hallucination and framing vulnerabilities.

- Canary rollout: 0% → 5% → 25% → 100% with rollback hooks.

- Monitoring: Track token consumption, error rates, and quality metrics.

Post-rollout

- Telemetry: Daily token & cost dashboards by feature.

- Alerts: Budget overspend alerts & automatic fallbacks to cached content if spend spikes.

- Sampling audits: Human review 5% of outputs weekly.

GPT-5 Mini in Action — Real Workflows & Practical Examples

SaaS onboarding email generator

Flow: user data → outline (Mini) → draft (Mini) → quick human edit → final send.

Why it works: low per-email token cost and fast turnaround allow thousands of drafts to be generated and curated by humans.

Customer support triage

Flow: incoming ticket → classification + priority (Mini) → auto-reply draft (Mini) → agent review.

Why it works: Mini is fast at classification and templated responses; humans handle corner cases.

Content farm / large editorial networks

Flow: idea pool → Mini generates multiple outlines → automated scoring → section drafts → human editor → publish.

Why it works: reduces editor time and costs while maintaining editorial control at scaling points.

Monitoring note: For hallucination-sensitive outputs (legal/medical), always include human verification and explicit sources.

GPT-5 Mini — Smart Monitoring & Cost Control Strategies

- Daily token dashboard by feature and model.

- Alerts for token spikes (+20% vs baseline).

- Per-feature budgets with hard caps.

- Automated fallbacks to cached replies on spend spikes.

- Sampling audits: human review 5% of outputs weekly.

- Rate-limited endpoints to protect the budget during surges.

FAQs

A: Yes — OpenAI reports a 400k token context window and large max-output tokens, so Mini works well for long transcripts and single-document workflows; always test with your format.

A: Using the published pricing (Input $0.25/1M, Output $2.00/1M), the combined cost is $0.00225 per 1,000 tokens (input + output).

A: Use Mini when throughput and cost matter more than the absolute best reasoning performance. Use Pro/Full for the hardest legal, medical, or multi-step reasoning tasks. Run an A/B test on representative prompts.

A: Community benchmarks show improvements, but hallucination risk depends on prompt engineering, domain, and verification steps. Always include checks for sensitive outputs.

A: Yes — streaming is supported in the API and can improve perceived latency for chat UIs. Check the API docs for your SDK.

Conclustion

GPT-5 Mini is a production-focused option best suited for teams that prioritize scale and cost efficiency. Its large context window and lower per-token costs let you design simpler pipelines and long-archive workflows. That said, purposeful migration requires A/B testing, token profiling, and safety audits. Use Mini where structured outputs and throughput matter; reserve Pro for the highest-stakes reasoning tasks.