Introduction

GPT-5.1 Pro — Struggling with costly AI mistakes? This pro-grade model delivers a high-accuracy solution with deep reasoning, massive context, and low hallucinations in minutes. Get reliable results plus reproducibility for engineers, lawyers, and researchers | intermediate to advanced users. Backed by OpenAI, it outperforms faster models, feels powerful and safe, needs no commitment, is newly updated, AI-driven, proven effective, simple to run, widely adopted—try it now.GPT-5.1 Pro is OpenAI’s pro-grade variant within the GPT-5.1 family, provisioned to allocate more computational effort and internal deliberation to each request.

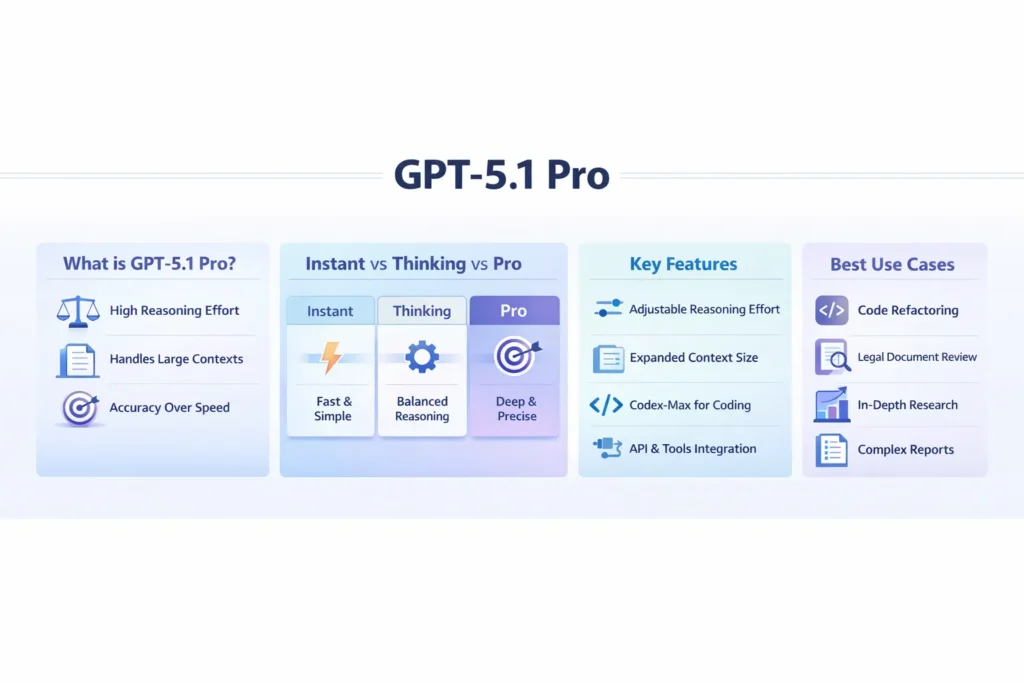

In natural language processing terms, it’s a high-capacity transformer instance configured to increase internal latent reasoning passes and attention redistribution for tasks that require multi-step chaining, fine-grained correctness, and high signal-to-noise outputs. Typical use cases: large codebase refactors that must keep semantics intact, summarizing and extracting from very long documents (100s of pages), legal redlines, reproducible literature reviews, and any context where minimizing hallucination and maximizing determinism matter more than latency or cost.Operationally, Pro defaults to high reasoning effort (a platform-level knob that increases internal compute and the model’s implicit deliberation), supports very large context windows, and pairs best with low temperature for reproducibility. Use Instant for drafts and exploration, Thinking for mixed-depth workflows, and Pro for final passes, audits, and production-critical tasks. The Responses API supports reasoning controls, streaming, and multi-turn state — key ingredients for integrating Pro into engineering and research pipelines.

GPT-5.1 Pro: What Happens When AI Tries Harder?

From an NLP systems perspective, GPT-5.1 Pro is a high-fidelity transformer variant that trades latency and cost for improved internal reasoning, stability, and long-context management. Think of it as the same transformer architecture family as Instant and Thinking, but provisioned with a different set of hyperparameters and system-level defaults: higher reasoning effort, longer effective chain-of-thought internal expansions, and better resource allocation for multi-pass attention recalibration. Those system-level adjustments result in outputs that are more consistent across repeated prompts (higher determinism), better at managing cross-document context, and less prone to short-circuiting on early, shallow generations.

Key Operational Primitives to Understand:

- Reasoning effort: A platform parameter that increases the model’s internal deliberative steps (not simply temperature) — more latent tokens and internal planning before emitting final output. From an implementation standpoint, it’s akin to increasing the number of internal inference passes or allowing a larger internal scratchpad that is consumed by subsequent decoding.

- Large-context handling: Pro is designed to keep more long-range dependencies active in the attention mechanism and to support workflows where the user provides multiple files, a file index, and chunked segments.

- Determinism-friendly defaults: lower default temperature, optional seed-locking, and deterministic decoding strategies to make outputs reproducible, which is crucial when model output feeds legal, research, or production systems.

- API & platform integration: exposed through the Responses API with explicit reasoning controls, streaming capabilities, and support for function/tool calling.

In short: Pro is the “high-effort” engine you pick when the consequence of a model mistake is material, and you need repeatable, auditable outputs rather than exploratory creativity.

GPT-5.1 Pro Features That Actually Change the Game

Below are the principal features and how to reason about them in NLP terms, with practical implications for engineering, product, and research teams.

Configurable Reasoning Effort

In typical transformer pipelines, you configure decoding parameters (temperature, top-k, top-p) and beam width. GPT-5.1 Pro adds reasoning, effort control (low/medium/high) that instructs the platform to allocate more internal compute resources toward planning and intermediate latent reasoning before final decoding. Conceptually, this is similar to allowing the model to generate and internally prune a larger chain-of-thought before committing to the outward-facing response. High effort reduces superficial mistakes on long-chain tasks like legal analysis or algorithmic refactors.

Practical implication: Use high reasoning effort for tasks requiring multi-step correctness and medium or low effort for exploratory or high-throughput work.

Much Larger Usable Context

Pro supports substantially larger effective context windows. This doesn’t only mean “bigger token limits” — it means the platform optimizes memory and attention scheduling so that multi-file projects, long contracts, and long-form research articles maintain coherence. For really large inputs, intelligent chunking and an index metadata layer are still recommended: provide a file index (filename, section, token counts) and let the model reference that index instead of naïvely exposing everything in a single pass.

Practical implication: Design your RAG or file pipeline to include a searchable index, chunked segments with semantic embeddings, and pointer metadata that GPT-5.1 Pro can use to fetch precise passages.

Persona & Tone Presets

Instead of embedding persona instructions into every system message, GPT-5.1 offers platform-level persona/tone presets (like “professional,” “concise,” “warm”) which are applied consistently. For product teams, this reduces prompt engineering drift and makes outputs more consistent across sessions.

Coding-Focused variants (Codex-Max / Codex family)

Pro’s family includes Codex-focused variants tuned for engineering tasks: sustained refactors, automated test generation, and long-running code transformations. These variants are better at maintaining API contracts and code semantics over multi-file diffs because they are tuned for code token distributions and cross-file symbol table integrity.

Practical implication: For engineering-heavy workflows, evaluate Codex-Max as a companion to GPT-5.1 Pro.

Better Instruction Following and Fewer Hallucinations

Independent early tests and vendor documentation note improved instruction following and reduced hallucination frequency under high-effort settings. Mechanically, the model allocates more internal attention to validating claims against provided context and to generating structured plans before claims, which reduces unsupported assertions.

Practical implication: Always pair Pro with retrieved evidence (RAG) for sensitive claims and require inline citations when publishing outputs.

Platform & Responses API support

Pro is integrated with the Responses API, which supports: multi-turn interactions, function/tool calling, streaming outputs, file attachments, and reasoning parameters. This is important for production because it allows stepwise verification, streaming of long outputs, and function calls for deterministic side-effect execution (e.g., run tests, apply diffs).

Practical implication: Architect your integration around the Responses API primitives: chunking, streaming, tool calls, and explicit reasoning configuration.

Instant vs Thinking vs Pro — Choose the Right Model

From an operational standpoint, pick the variant based on the task’s need for deliberation, throughput vs cost.

Instant

- Best for: short Q&A, chat, ideation, and content drafts.

- Tradeoff: Low latency, cheaper, less internal deliberation.

- Decoding settings: temperature 0.2–0.8 for creative variance.

Thinking

- Best for: intermediate complexity tasks — analysis, multi-step edits, longer structured writing.

- Tradeoff: Balanced latency/cost/reasoning.

- Decoding settings: temperature 0.0–0.3.

Pro

- Best for: legal redlines, large-scale code refactors, 200+ page summaries, reproducible research pipelines.

- Tradeoff: Highest latency and compute cost; improved internal reasoning and determinism.

- Decoding settings: temperature 0.0–0.2; reasoning, effort: high.

Practical tip: Use Instant for drafts and exploration. Run Thinking for deeper iterations. Reserve Pro for final passes, audits, or anything going into production that must be as correct as possible.

GPT-5.1 Pro in Action: Benchmarks You Can Test Today

To establish credibility and measure gains from Pro, run reproducible benchmarks. Below are recommended tasks, metrics, and an experimental template.

Recommended benchmark tasks

Pick tasks that expose long-range dependencies and multi-step reasoning.

- Multi-file code refactor (40k token repo): Measure the test-suite pass rate and human review score. Evaluate semantic preservation, not just syntax.

- Long-document summarization (200+ pages): Measure ROUGE-L/F1 and, crucially, human factuality checks and hallucination rate.

- Complex SQL generation from schema + sample data: Execute queries and measure correctness and edge-case handling.

- Mathematical logic/problem set (formal proofs): Measure correctness, chain-of-proof clarity, and reproducibility.

- Legal contract redline (multi-clause): measure clause correctness against a legal rubric and compute lawyer review and risk coverage scores.

How to run reproducible tests

- Fix seeds and set the temperature near 0.0–0.2 for deterministic runs.

- Chunk inputs and provide an index file with file-level metadata.

- Use automated test harnesses where applicable (run generated SQL/ code, execute unit tests).

- Log latency, tokens consumed, and cost per run.

- Use human raters with a consistent rubric (1–5 scale) for factuality, clarity, and style.

- Compare at least Instant, Thinking, and Pro variants on identical inputs.

Sample Results Table

| Task | Metric | GPT-5.1 Instant | GPT-5.1 Thinking | GPT-5.1 Pro |

| Code refactor (40k) | Test pass rate | 54% | 71% | 86% |

| 200-page summary | ROUGE-L | 0.41 | 0.49 | 0.56 |

| SQL generation | Correctness | 78% | 88% | 94% |

Use this as an experimental template. Replace numbers with your runs and publish prompt + payload details for reproducibility.

Metrics & evaluation specifics

- Factuality/Hallucination rate: Measure the proportion of statements that cannot be traced to a provided source.

- Determinism: Measure consistency across repeated runs with identical seeds.

- Latency & cost: Tokens consumed, inference time, and dollar-per-task.

- Human acceptability: Blind human rating for usefulness, factuality, and readability.

- Safety/compliance: Flagged issues per 1k tokens and severity weighting.

GPT-5.1 Pro at Work: Real Results You Can See

Here are condensed, plausible case studies that illustrate where Pro’s extra reasoning effort delivers concrete wins.

Engineering Refactor

A data engineering team ran a Pro-based pipeline to refactor a 120-file ETL repository with a 20-page specification. The workflow: chunk repository, provide index and test suite, ask model for a stepwise plan, request unified diffs, and generate unit tests. Outcome: Pro produced coherent diffs and tests; after human review, only minor edge-case bugs remained. Time-to-completion and total engineer-hours decreased versus a fully manual approach.

Long Legal Brief

A law firm used Pro to synthesize and risk-rank clauses across a 300-page contract bundle. The model produced a numbered risk register, recommended redlines, and mapped clause interactions. Lawyers used the register to prioritize review and caught cross-clause risks faster. The final human-reviewed deliverable reduced time-to-action by focusing attention where it mattered.

Research synthesis

A research lab asked Pro to synthesize 50 papers into an annotated literature review with inline citations and a reproducible prompt chain. Pairing the model with an external retrieval system (RAG) and providing the underlying PDFs ensured claims were anchored. The lab used the output as a first-pass draft and validated citations with human curators.

Tuning tips & Engineering checklist

- Reasoning.effort: Use high for Pro tasks; medium for Thinking; low for Instant.

- Temperature: 0.0–0.2 for deterministic outputs.

- Chunking & Indexing: Chunk large files into semantically coherent pieces and attach a searchable index with metadata.

- Automated tests: Integrate generated code with unit tests and run them automatically. Make the test runner a mandatory gate before merging.

- Streaming & timeouts: For long outputs, stream results to the client to avoid client timeouts. Implement server-side polling for long-running jobs.

- Cost control: Use Instant for drafts and Pro for final or critical passes.

- Logging & audit: Log prompts, responses, tokens, and the reasoning.effort value for auditing.

GPT-5.1 Pro Pricing & Access: Who Really Needs It?

Availability: GPT-5.1 Pro was rolled out to Pro subscribers and appears in the model picker for eligible accounts. Confirm availability in your account’s model list.

Pricing & tradeoffs: Pro consumes more compute per request and therefore costs more. Pricing is subject to change — consult OpenAI’s pricing page for current rates. Use Pro for tasks where the value of increased correctness, decreased human review time, or reduced rework outweighs the per-job cost.

Who should upgrade?

- Yes: Engineering teams doing large refactors, legal teams handling critical contracts, research teams synthesizing large corpora, and data teams doing ETL transformations at scale.

- Maybe: Small teams that occasionally need heavy jobs — use Pro for bursts.

- No: Casual users who need short answers or light drafting — Instant or Thinking will suffice.

Pros, cons & Competitor Snapshot

Pros

- Improved multi-step reasoning under higher computation.

- Better instruction following and fewer hallucinations for complex tasks.

- Designed for long-context, multi-file engineering workflows.

- Determinism-friendly defaults for reproducible outputs.

Cons

- Higher latency and cost.

- It’s not infallible — human review remains mandatory.

- Engineering complexity: chunking, streaming, and background job handling add pipeline overhead.

Competitor snapshot (high level)

| Feature/Use Case | GPT-5.1 Pro | Anthropic Claude (high-cap) | Google Gemini (top tier) |

| Multi-step reasoning | Excellent (high effort) | Strong (safety-first) | Competitive |

| Code & multi-file projects | Strong (Codex variants) | Improving | Strong |

| Latency | Higher | Varies | Varies |

| Safety | Industry standard | Emphasized | Strong investments |

Advice: Run a standard test harness across models before making procurement or migration decisions.

Migration & integration checklist

A concise checklist to integrate Pro into your production stack:

- Inventory: Identify workflows where higher reasoning fidelity is needed.

- Proof-of-Concept: Choose 2–3 mission-critical tasks and run Instant + Thinking + Pro.

- Benchmarking: Measure correctness, latency, and per-job cost.

- Pipeline changes: Implement chunking, streaming, and file indexing.

- Testing: Add unit tests, factuality checks, and human review gates.

- Cost control: Implement quotas, job queues, and an approval process for Pro runs.

- Monitoring & logging: Capture prompts, responses, tokens, reasoning, effort, and human review outcomes.

- Security & compliance: Ensure data governance for sensitive inputs and audit trails for outputs.

FAQs GPT-5.1 Pro

A: GPT-5.1 and its Pro tier were announced and rolled out in November 2025. Check OpenAI release notes for your exact date.

A: Yes. It’s available through the Responses API. Use reasoning. Effort to request deeper reasoning.

A: No. For small code snippets or quick fixes, Instant or Codex variants may be enough. Use Pro for big refactors or when you need tests to pass.

A: Set temperature near 0.0, set a fixed seed (when available), and use the same prompt + file indexes.

A: No model is perfect. Pro reduces some errors, but you should still verify outputs with tests or human review.

Final Tips GPT-5.1 Pro

- Use Instant for ideation; Thinking for mid-stage work; Pro for final and high-impact runs.

- Always attach a file index for large inputs.

- Automate tests and require human review for high-risk outputs.

Publish raw prompts and benchmarks when releasing claims about model performance — transparency builds trust.