Introduction

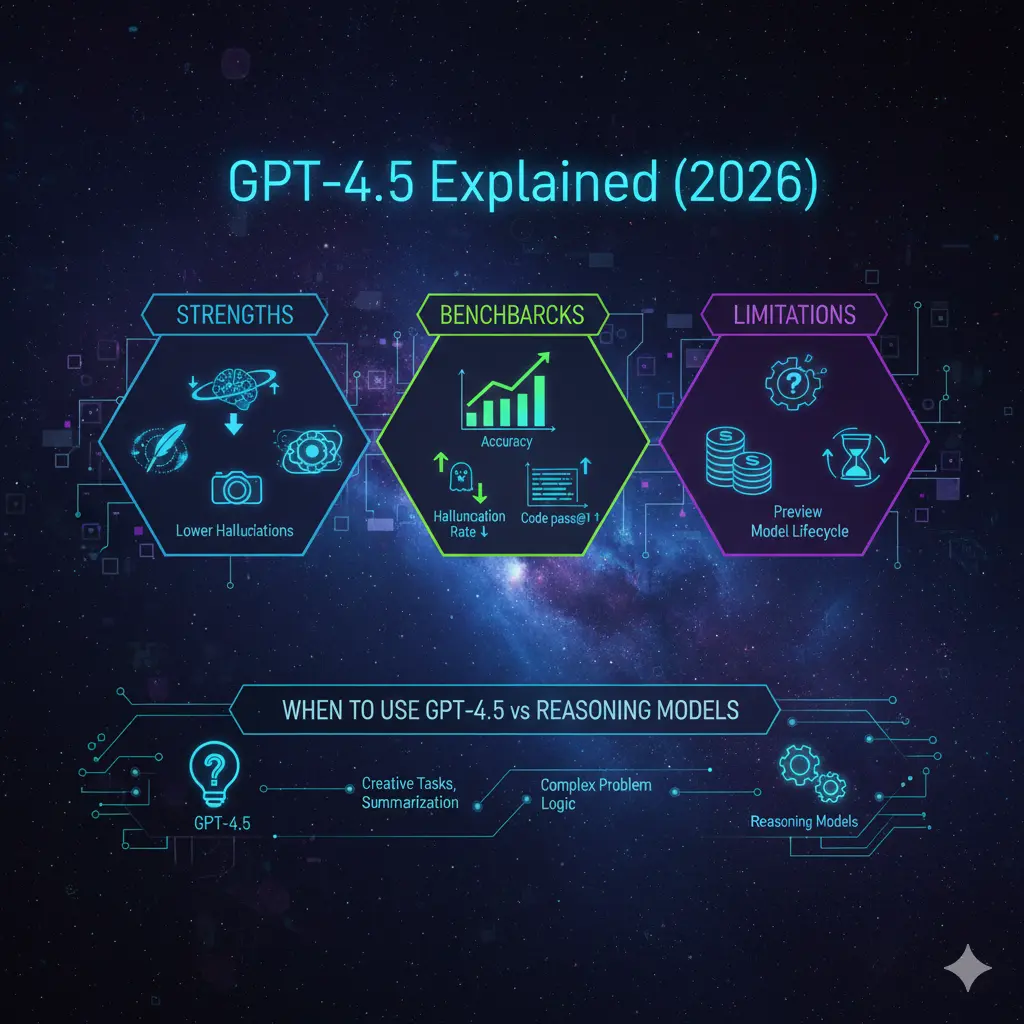

GPT-4.5 is a 2026 research-preview family of transformer-based autoregressive chat models from OpenAI that emphasizes polished natural language generation (NLG), improved pattern recognition on multimodal inputs, and a reduced incidence of factual errors (hallucinations) in many public evaluations. Practically: use it for creative NLG, dialogue agents, and general-purpose code generation with unit tests. For tasks requiring deep, formal stepwise reasoning (long proofs, formal verification), prefer a reasoning-specialist model or architectures that expose chain-of-thought traces. In production, instrument reproducible benchmarks (automatic metrics + human annotations), decouple model endpoints behind an adapter layer, and design cheap, robust fallbacks.

Why You Need This GPT-4.5 Guide Now

You’re reading this because you want a single, replicable, and methodical guide for deciding whether a model in the GPT-4.5 family belongs in your stack. This document reframes product, engineering, and research questions in NLP-centric language so you can:

- Understand what GPT-4.5 optimizes for (fluency, style transfer, pattern recognition, calibrated confidences).

- See how to measure it on closed-book datasets, open-ended generation tasks, and human-judged metrics.

- Copy reproducible benchmark code, instrumentation, and CSV schemas to publish.

- Make deployment and migration decisions while explicitly accounting for lifecycle risks (preview snapshots can be deprecated).

What Makes GPT-4.5 Different in 2026

At an abstract level, GPT-4.5 is an autoregressive transformer variant trained with large-scale unsupervised pretraining and supervised and RL-based fine-tuning (policy updates and alignment tuning). Key operational characteristics:

- Decoding behaviour: Tuned to produce coherent text with fewer low-probability lexical leaps; decoding defaults emphasize lower temperature and nucleus sampling for constrained outputs but can be configured for higher creativity.

- Representation quality: Embeddings and internal activations show improved separability for certain multimodal alignment tasks (text+image token fusion), helping downstream classification or grounding.

- Calibration & confidence: Model outputs more conservative calibration on many public tests — probability estimates (or proxy “confidence” signals) better correlate with factuality than earlier general-purpose chat models in independent trend analyses.

- No guarantee of transparent chain-of-thought: By default, GPT-4.5 returns concise answers rather than exposing lengthy internal reasoning sequences — it was optimized for succinct, user-ready outputs rather than raw introspective traces.

- Preview lifecycle risk: Some snapshots (e.g., gpt-4.5-preview) are experimental and have scheduled deprecation windows — plan to isolate such variants in your stack.

GPT-4.5: Strengths, Limits, and What to Watch

Strengths

- Surface-level coherence & style adaptation: Excellent at generating marketing copy, dialogue turns, and controlled stylistic transfers (formal → casual).

- Multimodal pattern recognition: Better at extracting structured information from short documents and images when image/text ingestion is enabled.

- Lower measured hallucination: Many independent test suites report fewer fabrications on closed factual QA relative to some previous chat models.

- Human-like conversational grounding: Effective for assistant-style use cases with persona and context-preservation.

Limitations

- Deep symbolic/stepwise reasoning: Models specialized for chain-of-thought remain preferable when formal stepwise proof is needed.

- Cost & throughput: Larger compute per token; consider tradeoffs when throughput or cost-per-request is a priority.

- Preview coupling risk: Do not hard-code preview snapshot endpoints in critical workflows.

Why Reproducible Benchmarks Are Crucial for GPT-4.5

Benchmarks are only useful when replicable. Differences in tokenization, prompt templates, sampling seeds, and evaluation scripts can yield divergent claims. For meaningful decisions:

- Use closed-book question sets with ground truth for exact-recall tests.

- Use open-ended generation datasets with human evaluation buckets (usefulness, safety, factuality).

- Record random seeds, tokenizer versions, prompt templates, sampling parameters (temperature, top-p, top-k, beam width).

- Publish raw model outputs, rater guidelines, and aggregated human judgements.

Why the Above Matters:

pretraining corpora, fine-tuning curricula, and prompt variance can drastically alter hallucination rates and apparent accuracy.

GPT-4.5: Key Benchmark Categories You Can’t Ignore

- Factual QA / Closed-book knowledge

- Metric: exact-match accuracy, F1.

- Datasets: NaturalQuestions, curated domain-specific FAQs.

- Hallucination & safe-answering

- Metric: hallucination rate (binary + severity).

- Protocol: include out-of-domain or adversarial queries; enforce “I don’t know” policy for uncertain answers.

- Code generation

- Metric: pass@k (unit-test-based), syntactic error rate.

- Protocol: run generated code in sandboxed unit tests (HumanEval-like).

- Reasoning / Multi-hop

- Metric: stepwise correctness, final answer accuracy.

- Protocol: include chain-of-thought prompts and evaluate whether intermediate steps are correct.

- Real-world user tasks

- Metric: human usefulness rating (1–5), time-to-complete.

- Protocol: sample real user queries, blind A/B test vs baseline.

- Latency & Cost

- Metric: ms/token, tokens/sec, $/1k tokens consumed.

- Protocol: measure under production-like batching and concurrency.

Sample Evaluation Checklist for Reliable Testing GPT-4.5:

- Freeze tokenizer and model snapshot names.

- Use reproducible seeds and deterministic sampling where possible.

- Publish notebooks and raw CSVs to GitHub or a reproducible artifact store.

- Keep human rater instructions fixed and publish them with results.

- Use multi-rater majority vote for binary labels; report inter-rater agreement (Cohen’s kappa or Fleiss’ kappa).

Sample Benchmark Table for Real-World Insights GPT-4.5:

| Task | Metric | GPT-4 | GPT-4.5 (example) | Notes |

| Simple factual QA | Accuracy (%) | 78.2 | 84.1 | closed-book auto-eval |

| Hallucination tests | Halluc rate (%) | 61.8 | 37.1 | lower fabrications on many public tests |

| Coding (HumanEval) | pass@1 (%) | 64.0 | 68.5 | run 100 samples w/ unit tests |

| Chain-of-thought math | Accuracy (%) | 72.0 | 65.0 | reasoning-specialist wins |

| Latency | ms/token | 18 | 20 | example: infra dependent |

Avoiding GPT-4.5 Mistakes: Factuality Checks You Must Do

Definition (operational): Hallucination rate = fraction of responses containing one or more factual errors relative to the ground truth judge label.

Design a Robust Hallucination Evaluation:

- Use closed-book queries with objective ground truth.

- Force an uncertainty-safe policy: instruct the model to output an explicit “I don’t know” when confidence < threshold.

- Collect severity labels for errors: minor drift vs. complete fabrication.

- Use 3+ human raters and compute the majority label; report inter-rater agreement.

Mitigations:

- Constrained decoding: Reduce temperature or use top-p/top-k to minimize improbable tokens.

- Answer-verification loop: Run an internal consistency check (e.g., verify named entities or numerical facts against a knowledge base).

- Retrieval augmentation: Hybrid retrieval-augmented generation (RAG) — fetch documents and condition outputs explicitly on retrieved text with citation snippets.

- Calibrated abstention: Implement a policy where the model must abstain if the retrieval confidence is low.

GPT-4.5 vs GPT-4 / Reasoning Models Decision Matrix

| Use case | Prefer GPT-4.5 | Prefer GPT-4 / reasoning-specialist |

| Marketing copy, storytelling | ✅ | ❌ |

| Short-to-medium code generation | ✅ | ❌ |

| Customer-facing conversations | ✅ | ❌ |

| Formal proofs, long math reasoning | ❌ | ✅ |

| Ultra-low-cost trivial tasks | ❌ | ✅ (smaller models) |

Why: GPT-4.5 optimizes for fluent, pragmatic outputs; reasoning-specialist models are architected and trained for explicit multi-step symbolic reasoning.

GPT-4.5: Access, Costs, and Model Lifecycle Explained

Availability: GPT-4.5 launched as a preview family; early access rolled out to paid developer/pro tiers and some managed partners.

Pricing & Compute Tradeoffs:

- Expect higher inference cost per token compared to smaller families.

- Measure cost using a formulaic approach (see Section 12).

Lifecycle Note:

Preview snapshots (e.g., gpt-4.5-preview) can be deprecated. If you rely on preview endpoints, prepare migration tooling and automated tests to validate model switches.

GPT-4.5: Calculating Cost vs Performance Effectively

Monthly Cost Formula:

Monthly cost = (Avg_tokens_per_request * requests_per_month / 1000) * price_per_1k_tokens + fixed_subscription + infra_costs

Worked Example:

- Avg tokens (in+out) = 1,200

- Requests/month = 50,000

- Price per 1k tokens = $0.12

- Subscription fee = $200

Token bill = (1,200 * 50,000 / 1,000) * $0.12 = 60,000 * $0.12 = $7,200

Total ≈ $7,400/month

Recommendations:

- Cache deterministic responses.

- Use smaller models for cheap or short tasks.

- Batch inputs if your latency constraints allow.

- Monitor cost per conversion (business metric).

GPT-4.5: How to Build a Reproducible Benchmark That Works

- Assemble gold datasets: 10–100 high-quality examples per critical flow.

- Define metrics: automated (accuracy, pass@1) and human (usefulness 1–5).

- Standardize prompt templates: freeze system and user messages.

- Deterministic runs: use seeds; record sampling parameters.

- Human evals: run blind A/B tests (randomize order, de-identify model tags).

- Failure corpus: save and tag the top 100 worst outputs for analysis.

- Pilot & iterate: small HITL pilot before full rollout.

- Publish artifacts: CSVs, notebooks, and rater instructions.

Migration and Deployment Tips You Can’t Ignore

- Do not swap blindly. Stage rollouts and run A/B tests.

- Stagger releases. Start with non-critical flows (marketing drafts).

- HITL for high-risk flows. Legal and medical outputs should have mandatory human checks.

- Adapter layer pattern: create an abstraction that normalizes output schemas, confidence fields, and tokenization differences. This enables model switching with minimal upstream changes.

- Automated regression tests: run end-to-end tests after any model switch.

- Fallbacks: embed a policy to route low-confidence or high-risk queries to a more conservative baseline.

GPT-4.5 in Action

Marketing Brief Generation

System: You are a senior marketing strategist. Provide a 2-week campaign brief for a B2B SaaS product targeting mid-market HR teams.

User: [product details]

Why it works: GPT-4.5’s tone adaptation and clarity produce usable drafts that require light edits rather than full rewrites.

Case study: Code Generation & Review

- Use pass@1 and unit tests; always run the generated code inside CI.

- Save failing cases and tag whether failure is syntax, logic, or a missing edge-case.

Protecting Data with GPT-4.5: Security and Compliance Tips

- Run domain-specific red-team tests (PII handling, privacy leakage).

- For regulated domains (medical/legal), require HITL and explicit “do not rely solely” disclaimers.

- Keep logs and an incident response process for when the model fabricates or exposes sensitive content.

- Use system cards and safety docs as a baseline, but add domain-specific controls.

GPT-4.5 Features and How to Use Them Effectively

| Feature | GPT-4.5 | GPT-4 (stable) | Recommendation |

| Creative writing | Excellent | Good | Use GPT-4.5 for drafts & ideation |

| Chain-of-thought math | Fair | Better (reasoning model) | Use Specialized reasoning models |

| Code generation | Very Good | Good | Use with unit tests/CI |

| Hallucination measured | Lower on many tests | Higher | Always validate domain data |

| Cost (inference) | Higher | Variable | Budget & test costs first. |

Pros & Cons

Pros

- Strong creative & conversational generation.

- Lower hallucinations on many public tests.

- Better for multi-modal workflows when file & image ingestion is available.

Cons

- Not a substitute for reasoning-specialist models in formal proofs.

- Higher compute cost and access tiering.

- Preview API lifecycle requires migration planning.

FAQs

A: It depends. GPT-4.5 improves creative fluency and often shows lower hallucination rates on public tests. But for stepwise formal proofs, reasoning-specialist models can still be better. Test on your data.

A: Preview variants were available to developers, but preview snapshots (like gpt-4.5-preview) have deprecation schedules. Avoid hard-coupling to preview endpoints for critical services; plan for fallbacks.

A: No. GPT-4.5 is strong for conversational and creative tasks, but reasoning-specialist models still outperform on formal multi-step problems.

A: Use closed-book QA with ground truth, require “I don’t know” for uncertainty, and use multi-rater human evaluation (3+ raters). Publish scripts and CSV files so that others can reproduce your work.

Final verdict

GPT-4.5 is a pragmatic, production-relevant family for many teams. It delivers cleaner creative outputs, improved multimodal pattern recognition, and measurable reductions in hallucination on many public evaluations. However, it is not a universal replacement for reasoning-specialist models. Treat GPT-4.5 as a powerful tool in your architecture — abstract model endpoints, run reproducible tests, and maintain migration plans.