Introduction

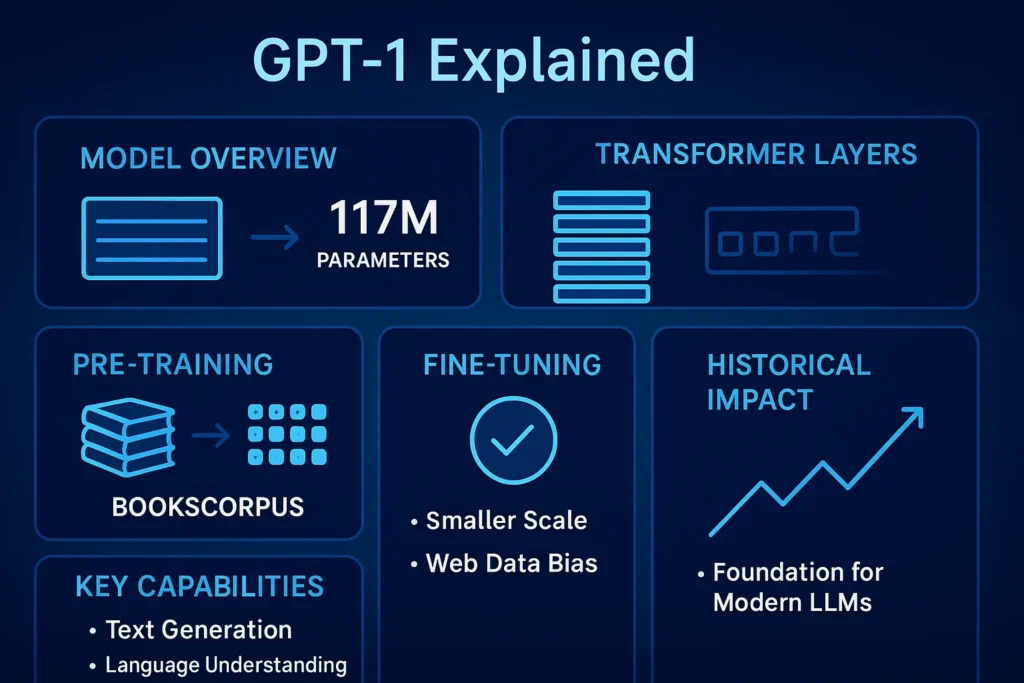

GPT-1 (Generative Pre-Trained Transformer 1) is OpenAI’s 2018 demonstration that unsupervised pre-training followed by supervised fine-tuning is a practical and powerful paradigm for natural language processing (NLP). It established a pipeline that decouples representation learning from task-specific adaptation: first learn contextual token representations by optimizing a generative objective on large unlabeled corpora, then adapt those parameters to downstream discriminative or generative objectives using modest labeled datasets. GPT-1 used a decoder-only Transformer trained with a causal language modeling objective. Although small by modern standards (~117 million parameters), GPT-1’s methodological contribution — the pre-train → fine-tune recipe — seeded a decade of scaling experiments, instruction tuning methods, retrieval augmentation strategies, and efficient adaptation techniques.

This guide explains GPT-1 in NLP terms: architecture, optimization objective, dataset considerations, fine-tuning mechanics, limitations, and how researchers and practitioners build on the recipe today. It includes practical code-level pseudocode, fine-tuning heuristics, and publishing/SEO suggestions for a pillar article.

GPT-1 Quick Facts — At a Glance

- Paper: Improving Language Understanding by Generative Pre-Training (OpenAI, 2018)

- Release: June 2018 (paper + blog)

- Model family: GPT (decoder-only Transformer, autoregressive)

- Parameters: ≈ 117 million (GPT-1 base configuration)

- Objective: Causal (autoregressive) language modeling — maximize ∏tP(xt∣x<t)\prod_t P(x_t \mid x_{<t})∏tP(xt∣x<t)

- Core idea: Learn transferable contextualized representations by pre-training a generative LM, then fine-tune for discriminative tasks.

- Primary contribution: Practical validation that transfer learning in NLP via unsupervised pre-training yields strong performance across many tasks.

Why GPT-1 Matters

Before GPT-1, many NLP systems used task-specific supervised learning pipelines with handcrafted features or architectures tuned per task. GPT-1 reframed the problem: learn a universal language model that captures syntax, semantics, and pragmatic cues from raw text, and reuse its internal representations across tasks. This approach reduced the dependence on large labeled datasets for every new task and unified multiple tasks under a single parameterized model.

From an NLP research lens, GPT-1’s value is methodological rather than purely performance-driven. It demonstrated that:

- Representation learning works for language. A generative objective leads to features that are useful for classification, entailment, and sequence labeling after fine-tuning.

- Transfer learning reduces labeled-data needs. Fine-tuned models can achieve competitive performance with less annotated data than training from scratch.

- Simple architectures + strong pre-training are powerful. A decoder-only Transformer trained with an autoregressive loss yields broadly useful features.

GPT-1, therefore, catalyzed subsequent scaling experiments (GPT-2, GPT-3) and methodological evolutions (e.g., instruction tuning, retrieval augmentation, LoRA/adapters).

The original paper — core idea

GPT-1 proposes a two-stage Training pipeline:

- Pre-training (unsupervised representation learning): Train a causal language model pθ(xt∣x<t)p_\theta(x_t \mid x_{<t})pθ(xt∣x<t) over very large unlabeled corpora. The model learns to compress distributional statistics of syntax, semantics, and world knowledge into its parameters.

- Fine-tuning (supervised adaptation): Attach task-specific heads (e.g., linear classifiers for GLUE tasks, sequence labeling heads, or generation heads) and continue gradient-based training on labeled datasets for downstream tasks. Often, the fine-tuning uses a lower learning rate for pre-trained weights and a higher learning rate for newly initialized task heads.

Intuitively, pre-training builds a function approximator that maps token contexts to rich representations; fine-tuning shapes those representations to produce task-specific outputs.

Architecture & training — concise,

Model family and block structure

- Architecture: Transformer decoder-only stack (autoregressive self-attention with masking to prevent future token access).

- Blocks: 12 Transformer decoder layers (multi-head self-attention + positionwise feedforward).

- Parameterization: ≈117M parameters in the canonical GPT-1 small config (embedding matrices, layer norm parameters, MLP weights, attention projections).

- Positional encoding: Learned positional embeddings (typical for decoder-only stacks).

Training objective

- Loss: Cross-entropy for next-token prediction (causal LM).

- Optimization: Mini-batch SGD/Adam variants; gradient accumulation is sometimes used for large effective batch sizes.

- Regularization: Standard weight decay, dropout; careful optimization of hyperparameters is important to preserve pre-trained knowledge during fine-tuning.

Data and corpora

- Training data: Large mixes of web text, books, and other diverse unlabeled sources. Pre-processing includes tokenization (byte-pair encoding or subword vocab), filtering heuristics, and deduplication steps.

- Compute: Large for the time, but modest compared to later work. GPT-1 required substantial GPU hours but was within reach for well-resourced labs in 2018.

Fine-tuning approach & practical mechanics

- Task head design: Attach small architectures for the downstream objective: a linear classification head, a token-level classifier for sequence labeling, or a fine-tuned decoder for generation tasks.

- Learning rates: Use a lower learning rate for pre-trained weights and a higher learning rate for task heads. This helps preserve generic linguistic representations while letting the new head adapt.

- Regularization & early stopping: With small labeled datasets, overfitting is a major risk — use early stopping on a validation set, dropout, and gradient clipping.

- Batching & sequence length: Maintain tokenization consistency with pre-training and choose sequence length appropriate for the task (longer for document tasks; shorter for sentence classification).

- Evaluation: Use held-out validation sets, calibration for probabilistic outputs, and task-specific metrics (accuracy, F1, exact match).

- Catastrophic forgetting mitigation: Consider lower LR, smaller fine-tuning steps, or techniques like Elastic Weight Consolidation (EWC) if preserving general language understanding across many tasks is required.

Key contributions — Distilled

- Pre-train + fine-tune works. Transfer from generative pre-training improves downstream task performance.

- Decoder-only setups are effective. Autoregressive models capture rich contextual statistics useful for classification tasks after adaptation.

- Simplicity scales. A simple training recipe paves a roadmap for scaling parameter counts, data, and compute.

- Generalizable features. Learned representations generalize across syntactic and semantic tasks better than randomly initialized architectures.

Limitations & practical caveats

- Model size & capability: At ≈117M parameters, GPT-1 lacks the representational capacity needed for complex multi-step reasoning and long coherent generation compared to later models.

- Bias and dataset noise: Pre-training on raw web text exposes the model to social biases, toxic language, and factual errors. Without explicit curation or mitigation, these biases manifest in downstream outputs.

- Single-modality: GPT-1 is text-only; multimodal modeling requires additional architectural and data changes.

- Context window constraints: Shorter context lengths limit performance on document-level tasks.

- Compute & reproducibility: While smaller than GPT-2/3, pre-training still demands significant compute and careful hyperparameter tuning for reproducible behavior.

Practical Lessons for Modern Practitioners

- Curate labeled fine-tuning data. Clean, representative data minimizes bias amplification and improves generalization.

- Use retrieval when needed. For factuality or long-tailed knowledge, couple the model with retrieval-augmented generation instead of relying purely on parametric memorization.

- Prefer efficient adaptation techniques for large models. Methods like LoRA, adapters, and prompt tuning reduce fine-tuning compute and storage costs for large backbones.

- Monitor for undesirable behavior. Evaluate safety metrics and bias tests before production deployment.

How GPT-1 Compares to GPT-2 and GPT-3 — Structured Comparison

| Feature | GPT-1 (2018) | GPT-2 (2019) | GPT-3 (2020) |

| Parameters | ~117M | 1.5B | 175B |

| Core idea | Pre-train → fine-tune | Scale + generative quality | Massive scale → few-/zero-shot abilities |

| Use cases | NLU via fine-tuning | Strong text generation | Broad productization: chat, code, few-shot tasks |

| Training data | Large unlabeled corpora | Larger web-scale corpora | Very large, diverse web corpora |

| Production readiness | Educational / research | Useful for generation tasks | Commercial API usage; widely deployed |

Takeaway: GPT-1 validated the recipe; GPT-2 demonstrated benefits of scale for generation; GPT-3 showed emergent few-shot capabilities at extreme scale.

Head-to-Head Feature Breakdown

- Flexibility: GPT-3 > GPT-2 > GPT-1

- Resource needs: GPT-1, GPT-2, GPT-3

- Fine-tuning ease: GPT-1/GPT-2 straightforward; GPT-3 often leverages prompt engineering or specialized fine-tuning.

- Real-world deployment: GPT-3 and later are production-focused; GPT-1 is mainly historical/educational

Notes: Keep tokenization consistent with pre-training; use gradient clipping and validation checks to prevent overfitting.

Fine-Tuning Checklist — Best Practices

| Step | Why it matters |

| Clean & filter labeled data | Reduces bias, label noise, and spurious correlations. |

| Lower LR for base model | Prevents catastrophic forgetting of pre-trained representations. |

| Add a small task head | Efficient adaptation with fewer parameters to learn. |

| Early stopping | Avoid overfitting on small datasets. |

| Evaluate on holdout | Honest performance estimation and generalization check. |

| Calibration | Improve probability estimates for downstream decision systems. |

Short Real-World Examples

- Sentiment classification: Pre-train on large unlabeled text; fine-tune on a labeled sentiment corpus. Result: better generalization and faster convergence than training from scratch.

- Paraphrase / NLI: Fine-tune pre-trained representations on GLUE-style tasks to reach competitive baselines in entailment and paraphrase detection.

- Domain chatbots (prototype): Fine-tune a small GPT model on domain transcripts for quick prototypes; for production, modern practice often combines larger backbones + retrieval.

Research Timeline & Influence

- 2018: GPT-1 proposes the pre-train → fine-tune recipe.

- 2019: GPT-2 demonstrates scaled generative quality (1.5B params).

- 2020: GPT-3 shows few-shot capabilities at extreme scale (175B params).

- 2020s: Proliferation of techniques: instruction tuning, RLHF, retrieval augmentation, parameter-efficient tuning (LoRA/adapters), multimodal models.

Pros & Cons GPT-1

Pros

- Validated transferable pre-training for NLP.

- Simple decoder-only architecture that’s conceptually easy to explain.

- Lower compute footprint compared to later models — useful for teaching.

Cons

- Small capacity relative to modern LLMs.

- Vulnerable to dataset biases when pre-trained on unfiltered web text.

- Not competitive for complex generation or advanced reasoning tasks by today’s standards.

Should you use GPT-1 Today?

- Education & teaching: Yes — GPT-1 remains an excellent pedagogical example of transfer learning in NLP.

- Production: Generally no — prefer modern models, retrieval-augmented systems, or parameter-efficient adaptations of larger backbones.

- Edge/offline deployments: Maybe — distilled or smaller GPT-1–sized variants can be useful when compute is extremely constrained and high fidelity is not required.

Example Fine-Tuning checklist

- Prepare a clean, labeled dataset.

- Tokenize with a pre-training tokenizer.

- Load pre-trained weights.

- Add task head.

- Use lower LR for base model, higher LR for head.

- Early stop on validation metrics.

- Evaluate & calibrate outputs.

FAQs GPT-1

GPT-1 is OpenAI’s 2018 model and paper that introduced the idea of training a large language model on raw text and then fine-tuning it for specific tasks. It showed that pre-training helps many tasks.

The original GPT-1 model had about 117 million parameters.

Technically yes. But for most real tasks, modern models (or retrieval-augmented systems) give much better results. Use GPT-1 for learning and small demos.

It popularized the pre-train + fine-tune approach. Later models scaled that idea and added instruction tuning and alignment methods like RLHF.

Add a link to the original OpenAI paper (recommended links below).

Visual & UX suggestions

- Feature image: 1280×720 timeline graphic showing GPT-1 → GPT-4. Filename: gpt-timeline-infographic-1280×720.png.

- Expandable blocks: Collapsible technical appendix for math and extra tables.

- Schema: Add FAQ schema and Article schema with author + publish date.

- Downloadables: Offer an infographic and a one-page cheat sheet (PDF) as gated assets for email capture.

Conclusion GPT-1

GPT-1 is a historically pivotal model: compact, conceptually clear, and influential. It shifted NLP toward representation learning via unsupervised pre-training followed by supervised adaptation. For practitioners, the recipe remains foundational: learn powerful representations with large

unlabeled corpora, then adapt efficiently to downstream tasks using principled fine-tuning or parameter-efficient methods. For content creators, a pillar piece on GPT-1 should emphasize the method, provide technical appendices, include practical code, and offer shareable assets to attract developer and research audiences.