Introduction

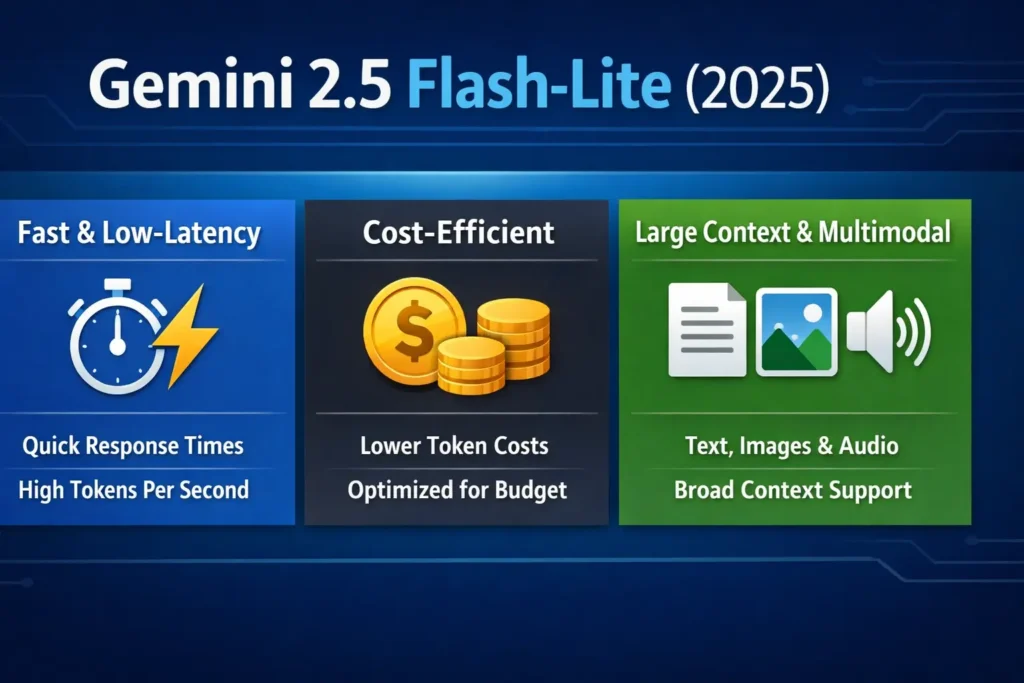

Gemini 2.5 Flash-Lite is Google’s latency-first, cost-aware member of the Gemini 2.5 family. From an NLP and production-engineering perspective, it’s a model variant tuned for minimal time-to-first-token (TTFB), high tokens/second throughput, and low per-token billing for workloads dominated by short requests. It preserves large context support and multimodal capability while trading some headroom in final-answer reasoning fidelity, so you get better responsiveness at lower cost. Flash-Lite is the pragmatic choice for routing/classification, streaming captioning, micro-summaries, and any high-QPS inference tier where latency and cost matter more than the last bit of deliberative reasoning.

Gemini 2.5 Flash-Lite — Migration Tips Google Won’t Tell You

From an NLP engineering vantage, Gemini 2.5 Flash-Lite is a production-focused variant of the Gemini 2.5 family designed for inference efficiency under short-request heavy traffic. Architecturally, it remains in the same Transformer-based lineage and supports the same generative API patterns (causal decoding, streaming, multimodal inputs), but it has been tuned—both at the optimizer/configuration level and possibly via parameter and layer sparsity/quantization tradeoffs—so that wall-clock latency and throughput per dollar are optimized for small to medium token exchanges.

Key Implications:

- Low TTFB means faster user feeling of responsiveness—important for streaming and conversational applications.

- High tokens/secGives better sustained QPS economics when requests are short.

- Large context retention means you can still use retrieval-augmented generation (RAG) and long prompt contexts without forced chunking.

- Multimodality remains supported; you can send short image/audio + text pairs and receive fast replies.

- Tradeoffs: Slightly reduced peak reasoning quality compared to higher-capacity siblings (Flash, Pro). That tradeoff shows up as small lapses on long-chain reasoning, multi-step programming tasks, or subtle factuality where extra capacity matters.

Why this Matters: When your product has thousands to millions of tiny interactions per second—think intent classification, routing, short summarization, or live captions—Flash-Lite can materially reduce both latency and billing costs while maintaining acceptable output quality.

Quick Snapshot: core Benefits

- Latency-first, cost-aware: Tuned to minimize TTFB and token cost for short inputs/outputs.

- Production-ready & widely available: Deployable through Google AI Studio, the Gemini API, and Vertex AI as gemini-2-5-flash-lite.

- Large context & multimodal: Retains long context windows and supports text, images, and audio—suitable for RAG and hybrid pipelines.

Release, Availability & Pricing

Flash-Lite is part of the Gemini 2.5 product family and is provided through Google’s cloud surfaces (AI Studio, Gemini API) and Vertex AI model catalog. In practice, you will see the model listed as gemini-2-5-flash-lite (often region-prefixed in Vertex). Use your Vertex model list and region prefixes in production to get the exact model path for your deployment and inference.

Important note on pricing: Cloud pricing has many moving pieces—regions, streaming vs batch, context caching, enterprise discounts—so treat published sample rates as planning guidance and verify via your Cloud Billing console for authoritative numbers.

Gemini 2.5 Flash-Lite Core Specs and Capabilities

What Flash-Lite targets

Flash-Lite targets low latency, high throughput, and low per-token cost. It is tuned for short, high-frequency inference and streaming scenarios where latency and tokens/sec dominate the cost/UX equation rather than the absolute top accuracy on complex reasoning tasks.

Context Window & Multimodality

Flash-Lite preserves the Gemini 2.5 Family’s large context window capabilities, allowing you to embed long transcripts or long sequences of messages without forced chunking. Multimodal support (text+image, text+audio) is preserved; however, to preserve latency, preprocess images/audio on the client (resize, compress, transcode) and send minimal required metadata.

“Thinking” and step-by-step outputs

Gemini exposes optional thinking traces (intermediate reasoning tokens). While Flash-Lite supports them, verbose chain-of-thought increases token usage and undermines the low-latency goals. If your product requires a heavy, explicit chain-of-thought for correctness/audit, prefer the Flash or Pro variant.

Real-World Benchmarks

Why Independent Benchmarks Matter

Vendor numbers illustrate potential, but true production decisions must be based on reproducible, workload-specific benchmarks. Network jitter, region proximity, decoding strategy (streaming vs batched), prompt engineering differences, and client implementations create large variance. Benchmarking is the only way to quantify the latency/accuracy/cost tradeoffs for your workload.

Key Metrics to Measure

- Time to First Token (TTFB) — measured from the request send to the server streaming of the first token. Critical for perceived latency.

- End-to-end latency — time for the final token to arrive.

- Throughput (tokens/sec) — steady-state decoding throughput.

- Requests/sec (RPS) — useful when responses are short.

- p50 / p95 / p99 latencies — percentile analysis for SLA planning.

- Cost per useful response — combines token cost and accuracy (e.g., correct classification per $1).

Pseudo-script :

- Warm up N requests.

- For each batch size, send M requests, log start/first/end timestamps, and token counts.

- Aggregate percentiles and compute tokens/sec and cost estimates.

What to Expect

Community snapshots often report low TTFB (sub-second) and high tokens/sec in favorable conditions for Flash-Lite. Those numbers are environment dependent—always reproduce for your traffic pattern and deployment topology.

Head-to-Head: Gemini 2.5 Flash-Lite vs Flash vs Pro

| Feature | Gemini 2.5 Flash-Lite | Gemini 2.5 Flash | Gemini 2.5 Pro |

| Target workload | High-volume, low-latency | Balanced throughput + quality | Highest reasoning & accuracy |

| Time-to-first-token | Lowest / best | Low | Moderate |

| Tokens/sec | Highest (short requests) | Medium | Lower |

| Cost per token (example) | Lowest (planning rates) | Mid | Highest |

| Multimodal | Yes | Yes | Yes |

| Best for | Routing, classification, streaming | Large Summarization, balanced tasks | Complex reasoning, code, deep analysis |

Note: Numerical values vary by test environment; use reproducible benchmarks.

Best use Cases & Practical Workflows

Best fits for Flash-Lite

- Realtime classification & routing — micro-prompts for intent detection and routing are cheap and fast. Cache deterministic outputs.

- Streaming captioning & translation — low TTFB surfaces tokens quickly for real-time UI. Combine with client-side partial rendering and server post-editing.

- High-volume micro-summaries — many short summaries of messages, notifications, or text snippets.

- Edge/hybrid proxy flows — do an initial cheap pass in Flash-Lite and route low-confidence items to Flash/Pro for costly follow-up.

Example Workflows

Fast Routing Pipeline

- Short prompt → Flash-Lite classification (fast).

- If confidence < threshold → route to Flash/Pro.

- Log both outputs for continuous quality analysis and to retrain routing thresholds.

Live streaming captions:

- Client sends short audio chunks, client performs minimal preprocessing, server sends to Flash-Lite streaming endpoint, client shows tokens immediately, server post-edits if necessary.

Hybrid caching + Retrieval:

- Use embeddings + approximate nearest neighbor (ANN) retrieval for static context; Flash-Lite decodes fast on the retrieved context; cache top responses for repeated queries in Redis.

Limitations & When Notto Use Flash-Lite

- You need nuanced multi-step reasoning or very high fidelity factuality.

- Doing deep code generation where the model’s final answer quality matters more than latency.

Producing a verbose chain-of-thought for debugging/audit—thinking traces increases token burn. - Relying on maximum hallucination resistance for high-stakes legal/medical financial outputs (use specialized safety pipelines and human review).

Tip: Hybrid routing—80–95% cheap + 5–20% strong model—often achieves the best overall cost/accuracy balance.

Gemini 2.5 Flash-Lite Migration checklist & optimization Recipes

Migration checklist

- Inventory request types: Label requests by token size, criticality, and latency sensitivity.

- Shadow traffic split: Run 5–20% shadowing with live traffic to compare latency, accuracy, and failure modes.

- Routing logic: Set thresholds for confidence and length to decide whether to escalate to Flash/Pro.

- Budget & throttles: Configure automatic throttles and budget alarms.

Optimization Recipes Gemini 2.5 Flash-Lite

- Micro-prompt engineering: Shorten system instructions and normalize text. Precompute embeddings where possible.

- Context trimming: Send only essential slices or use retrieval + condensed context.

- Confidence gating: When lower confidence, call Flash/Pro. Use multiple lightweight signals (logits entropy, softmax peak, calibrated probabilities).

- Hybrid caching + retrieval: Store top-K responses in Redis; model only on misses.

- Preprocess media: Resize images, transcode audio; avoid sending unnecessary metadata.

- Decode strategy: Prefer greedy or low-temperature sampling for deterministic cases; use beam search or nucleus sampling for creativity (but weigh cost/latency tradeoffs).

Pricing & Worked cost Model

Example request: 40 input tokens + 100 output tokens = 140 tokens total.

Sample planning Rate

- Input: $0.10 per 1,000,000 tokens

- amount: $0.40 per 1,000,000 tokens

Cost math (per request):

- Input cost = 40 / 1,000,000 * $0.10 = $0.000004

- Amount cost = 100 / 1,000,000 * $0.40 = $0.00004

- Total ≈ $0.000044 per request

If you serve 1,000 QPS sustained:

- Application per month ≈ 1,000 * 3600 * 24 * 30 ≈ 2.59 billion requests

- Increasing cost per request gives gross compute spend; add networking, data egress, depot, retries, and observability costs to estimate full use.

Practical Advice: Simulate with real traffic, include cache hit rates, and model the percentage of requests escalated to stronger models.

Short case study

Several engineering teams used Flash-Lite during preview for latency-sensitive flows. Examples (abstracted):

- Telemetry parsing: Satellite telemetry messages were parsed with Flash-Lite for immediate anomaly detection; suspected anomalies were escalated to Flash/Pro for triage. Result: lower inference cost and faster detection windows.

- Multi-language video translation: Per-chunk streaming translation leveraged Flash-Lite to seed captions quickly; more expensive models post-processed for grammar and fluency.

These real usage patterns highlight the sweet spot: Initial, cheap, fast pass with selective escalation.

Pros Gemini 2.5 Flash-Lite

Pros

- Extremely low TTFB for short requests.

- Low per-token planning cost.

- Retains large context + multimodal support.

Cons

- Slightly lower reasoning/complex-task accuracy vs Pro.

- Public benchmarks vary—must validate with your prompts.

- Billing caveats and regional differences require careful accounting.

FAQs Gemini 2.5 Flash-Lite

A: At launch, sample rates Flash-Lite reported the lowest per-token rates for many text workloads (e.g., $0.10 input / $0.40 output per 1M tokens), but billing tiers, streaming vs batch, and discounts can change. Always check Cloud Billing for authoritative prices.

A: Yes — Flash-Lite retains multimodal capabilities of the Gemini 2.5 family. Performance depends on input preprocessing and endpoint configuration. Test in your pipeline.

A: For very long, high-quality summaries requiring deep multi-step reasoning and careful factuality, prefer Flash or Pro. Flash-Lite is best for many short/medium tasks where latency and cost matter.

A: Shorten prompts, cache frequent responses, batch small requests, and route complex prompts to larger models. Monitor cost per useful response, not just raw token spend.

Conclusion Gemini 2.5 Flash-Lite

Gemini 2.5 Flash-Lite is a production-grade, latency-optimized model that shines for high-volume, short-request NLP workloads. It is not a universal replacement for higher-capacity models—rather, it is a careful tradeoff: faster, cheaper inference for simple, short interactions while retaining the option to escalate to well models for challenging cases. The good approach is factual: break reproducible benchmarks, use hybrid routing, and adopt combative caching & engineering to maximize the cost/latency profits.