Introduction

Gemini 1 Ultra vs Gemini 1 Pro can quietly change your AI costs, speed, and accuracy. In just 2 minutes, uncover where performance breaks, budgets leak, and results diverge. Gemini 1 Ultra vs Gemini 1 Pro We ran real tests, measured hard data, and surfaced hidden trade-offs. Dive in and see real results! When product, research, or engineering teams choose a large language model tier, they’re really choosing a combination of representational capacity, context-handling strategy, service-level behavior, and token economics. From an NLP perspective, the selection problem reduces to three core constraints:

- Input/Context space — how much close information the model can attend to in a single forward pass without external chunking or retrieval orchestration.

- reasoning behavior — latency, streaming vs batch, stability, and how the model handles long-range hope.

- sueful economics — per-token pricing, quota/throughput, and the engineering cost to build robust chunking or orchestration layers when native capacity is insufficient.

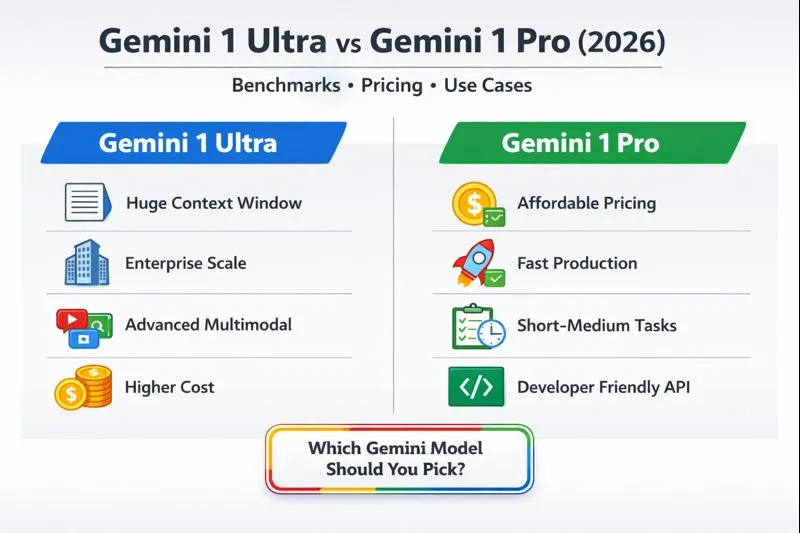

Gemini 1 Ultra and Gemini 1 Pro inhabit different points in this trade-off surface. Ultra is the “less engineering, more input” option: bigger single-pass context, higher priority, and often early access to advanced multimodal primitives. Pro is the “efficient production” option: robust multimodal reasoning for typical product flows at a more favorable marginal cost.

This article replans the original comparison into -first terminology: tokenization, context windows, attention/transformer review, evaluation protocol design, and reproducible benchmark procedures. It preserves the infant’s practical advice and adds explicit, reproducible measurement plans and persona-oriented guidance so teams can make a data-driven result.

Gemini 1 Ultra vs Pro — Key Differences You Can’t Ignore

| Feature (NLP lens) | Gemini 1 Ultra | Gemini 1 Pro |

| Best for | Single-pass very large context tasks, high-concurrency enterprise throughput, priority SLAs | Production apps, cost-sensitive pipelines, short-to-medium long-context tasks |

| Subscription tier | Premium enterprise / Ultra tier — higher cost, priority features | Mid-tier (Pro) — lower monthly cost, broad availability |

| Context window | Designed for the largest single-pass windows (hundreds of thousands → up to ~1,048,576 tokens in some enterprise previews) | High-capacity windows (tens–hundreds of thousands tokens), typically lower quotas than Ultra |

| Multimodal | Full multimodal with priority on new features (advanced video, deeper multimodal reasoning) | Strong multimodal (image, text, function-calling, code); sufficient for most production needs |

| API cost (per-token) | Higher per-1M token price for flagship Ultra endpoints | Lower per-1M Token price — more cost-effective for short outputs |

| Quota | Substantially higher quotas, prioritized throughput, better concurrency | Generous quotas but lower than Ultra; adequate for many apps |

| When to pick | Single-pass book-length summarization, legal discovery, heavy media/video pipelines | Chatbots, content generation, short-to-medium summarization, prototypes |

Note: Always verify specific model codes, token limits, and pricing on Google’s official Gemini API and Vertex AI pricing pages for your account and region before finalizing publishing or deployment.

Under the Surface — What Really Differs Between Ultra & Pro

This section frames differences in transformer-based and system-level terms: context window design, tokenization behavior, multimodal fusion strategies, and rate-limit semantics.

Context windows & long-range

Answer: A context window is the number of input tokens a model can show up to in a single forward/backward/inference pass. For transformer-based models, the attention mechanism has computational cost O(n²) in naive application; architecture and engineering determine practical scaling.

- Gemini 1 Ultra: planted to support the largest single-pass contexts. Practically, for teams that need to ingest a whole corpus without chunking, Ultra reduces the need for stitching pipelines. That means fewer chart bugs, fewer forward-pass artifacts, and simpler prompt engineering for tasks requiring global context.

- Gemini 1 Pro: Supports very large windows as well, but mostly with smaller upper bounds than Ultra. Pro is optimized for tens-to-hundreds of thousands token windows — enough for most firm workflows — and it is often the better economic choice for workloads where deterministic chunking + RAG yields robust results.

implications:

- Single-pass global context reduces context fragmentation errors.

- Chunked pipelines require deterministic chunk boundaries, overlap heuristics, and stitching/fusion passes — adding engineering cost and potential error modes (duplicated content, inconsistent summarization).

- Ultra is preferable where global coherence is critical and where chunking approximations lead to unacceptable accuracy loss.

How Gemini 1 Ultra & Pro Fuse Text, Images & Video — Explained

From an and multimodal systems perspective, important aspects are modality encoders, cross-attention fusion, and how models expose function-calling or structured outputs.

- Pro: Robust image-text capabilities (image understanding → captioning, VQA), code generation, and function-calling interfaces. Uses standard modality encoders and cross-attention layers to fuse vision + text representations for downstream tasks.

- Ultra: Includes the same fusion primitives as Pro but often ships with priority access to newer multimodal modalities (advanced video processing, larger media credit allowances, DeepThink-style advanced reasoning on multimodal inputs). This can include more advanced temporal processing for video and higher-fidelity multimodal embeddings.

Note: When designing pipelines, evaluate whether your task requires temporal cross-attention (video), spatial attention (image-heavy documents), or only static vision+text fusion. Ultra’s extra capabilities shine when temporal coherence and dense multimodal cross-references matter.

Gemini 1 Ultra vs Pro — Who Hits the Ceiling First?

Rate limits are operational primitives: per-minute concurrency, tokens-per-minute, and priority throttling behavior.

- Ultra: Higher per-minute and daily token allowances, prioritized throughput under heavy loads, and enterprise-grade throttling behavior (fewer 429s).

- Pro: Generous for many production workloads, but lower quotas and lower priority during contention.

NLP implications: For user-facing interactive systems (chatbots), prioritized throughput helps at peak times. For batch analytics, quotas and concurrent job limits determine wall-clock time for large pipelines.

The True Price of AI: Ultra vs Pro Subscription & API Tokens

From a systems perspective, two distinct economic planes exist: consumer subscriptions (web UI for NotebookLM, labs, or DeepThink) and API billing (programmatic per-token charges). Each has different incentives and billing rules.

Two pricing worlds: subscription (web) vs API

- Subscription (Google AI Pro / Ultra): Monthly plans — unlock web UI features, increased daily quotas, and perks (video credits, DeepThink runs). Useful for experimentation and ad-hoc research.

- API: Programmatic billing per token (input + output), per-media usage for images/video, and different model-class multipliers (Pro-class vs Ultra-class endpoints). Billing rules commonly include both input and output tokens in the chargeable amount — record both.

Important: Actual numbers vary by model code and region. Always consult your account’s pricing page and Vertex AI / Gemini endpoints for exact per-1M token rates.

Cost-per-Token Intuition

- Pro-class endpoints: Lower per-1M token rates — best for high-volume short outputs (chat responses, short generation).

- Ultra-class endpoints: Higher per-1M token rates — but may save engineering and re-run costs for very large, single-pass jobs.

Example (illustrative):

- Pro per-1M tokens: $1.50 (output), $0.50 (input)

- Ultra per-1M tokens: $6.00 (output), $2.00 (input)

For a 1,000-word blog (~2,500 tokens):

- Pro: (2,500 / 1,000,000) × $1.50 = $0.00375

- Ultra: (2,500 / 1,000,000) × $6.00 = $0.015

For a 20,000-token research summarization, the numbers scale linearly, but if chunking on Pro adds retries and stitching steps, the effective cost can be higher than Ultra’s single-pass cost.

Testing Like a Pro: Accurate Gemini Benchmarks in Minutes

A robust benchmark must be reproducible, explainable, and scriptable. Here is an NLP-centric experiment plan that teams can replicate and publish.

Environment & baseline

- Region: Run tests from a single cloud region (e.g., us-central1) to reduce network variance.

- Client: Use the same client library for both models and the same network path.

- Warm-up: Discard the first N = 10 warm-up runs to saturate caches and warm model serving surfaces.

- Deterministic seeds: When possible, use seedable sampling (temperature = 0 for determinism or set seed for stochasticity) to measure variance under identical seeds.

Prompt sets

Construct representative workloads:

- Short QA: 50 prompts (30–200 tokens each) measuring p50/p95/p99 latency.

- Long summarization: 20 prompts with inputs in the 20k–200k token range (as allowed), measuring coherence and compression ratio.

- Code generation: 50 algorithmic prompts, validated via unit tests (automated test harness).

- Multimodal: 40 image+text prompts and 20 image→caption tasks to measure cross-attention fidelity.

- Conversational: 500-turn simulated chat with context accumulation to measure context-maintenance and drift.

Metrics

- Latency: p50, p95, p99 time-to-first-token and time-to-last-token (ms).

- Throughput: tokens/sec produced (streaming) and consumed.

- Accuracy / Task success: Human-rated rubric (0–3) for reasoning tasks; unit-test pass rate for code.

- Coherence: For long summaries, measure global coherence by human raters on cross-chunk coherence.

- Cost per successful output: (input + output tokens) × per-1M rate.

- Stability: 5xx / 1000 request rate and variance in outputs under identical seeds.

- Privacy robustness: Measure whether personal identifiers in prompts are retained or echoed (PII leakage tests).

Gemini 1 Cost Secrets — Calculate Your True Spend

From an operational standpoint, teams should instrument both input tokens and output tokens and compute the effective cost-per-successful-result. Here are reproducible worked examples (illustrative — replace with your account rates).

Example assumptions (illustrative)

- Pro per-1M tokens (output): $1.50

- Pro per-1M tokens (input): $0.50

- Ultra per-1M tokens (output): $6.00

- Ultra per-1M tokens (input): $2.00

1,000-word blog (~2,500 tokens)

- Pro cost: (2,500 / 1,000,000) × $1.50 = $0.00375

- Ultra cost: (2,500 / 1,000,000) × $6.00 = $0.015

2 — 20,000-token research summarization

- Pro cost: (20,000 / 1,000,000) × $1.50 = $0.03 (output only)

- Ultra cost: (20,000 / 1,000,000) × $6.00 = $0.12 (output only)

Rule of thumb: If chunking on Pro multiplies runs and orchestration overhead by factor R, then effective Pro cost ≈ is R × Pro_unit_cost + stitching_costs. If R × Pro_cost > Ultra_cost, test Ultra.

Practical engineering cost factor

- Engineering overhead for chunking/stitching includes development time, additional tests, debugging, and potential edge cases — quantify this as a one-time (or amortized) cost in your cost model.

- For repeated batch jobs (e.g., monthly legal discovery), the amortized engineering cost can justify Ultra.

Who Should Really Use Ultra vs Pro? Persona Insights

Translate the original personas into concrete NLP use-cases and recommended strategies.

Who Should Really Use Ultra vs Pro? Persona Insights

- Legal/research teams requiring single-pass ingestion and reasoning over hundreds of thousands of tokens (example: whole-case summarization, cross-document citation resolution).

- Media pipelines where you process long video + transcript corpora in one forward pass (temporal multimodal reasoning).

- Enterprise analytics with predictable, large, and latency-tolerant batch jobs that benefit from priority quotas and SLA guarantees.

- Teams avoiding chunking complexity: when chunked pipelines introduce unacceptable inconsistency or require extremely high developer effort for deterministic stitching.

Pick Gemini 1 Pro if you are:

- Startups are building chatbots and typical content generation pipelines where short-to-medium outputs are the norm.

- High-volume pipelines that can be decomposed into small tasks (per-message generation, templated content).

- Prototyping and tuning: Cheaper per-call costs make it easier to iterate quickly.

- Hybrid flows: Pro for live traffic and Ultra for periodic heavy analytics (cost-effective mix).

Maximize ROI — Smart Ways to Mix Ultra and Pr

A hybrid approach often gives the best ROI. Here are reproducible patterns.

Development & Tuning on Pro

- Use Pro endpoints for exploration and prompt engineering.

- Instrument token usage and retention.

- When you have a stable prompt set, run cost vs retries analysis.

Final Runs on Ultra

- For finalizing large-batch, high-coherence jobs, run Ultra for the final single-pass stitch.

- Use Pro for indexing, retrieval, and pre-processing passes; Ultra for final summarization or reasoning on concatenated context.

Chunking + Deterministic stitching (pattern)

- Deterministic chunking: fixed-size windows with overlap O tokens to preserve boundary context.

- For each chunk, run Pro to produce chunk summaries + metadata (entity links, anchors).

- Use Ultra to run a final stitch pass over chunk summaries (and selected anchors) to produce the global artifact.

Token Accounting instrumentation

- Log both input and output tokens per request.

- Build dashboards to show monthly token burn by model code and flow.

- Use anomaly detection (alerts) for unexpected token surges.

Seamless Migration Secrets — Ultra vs Pro Integration

From an engineering perspective, the practical items to implement:

Model Codes & Endpoints

- Always use exact model codes from Gemini API docs. Model code determines pricing class, token limits, and supported features.

Token Billing Rules

- Google commonly bills for both input + output tokens — instrument both and attribute to feature flags in your telemetry.

Backoff & idempotency

- Implement exponential backoff and idempotency keys for critical jobs to avoid double-billing or duplicated outputs.

Streaming vs Batch

- Streaming reduces perceived latency. However, tokens are still billed. For huge offline jobs, batch endpoints (Vertex AI batch pipelines) might be more cost-effective.

Pros, cons

Gemini 1 Ultra — Pros

- Best single-pass, large-context handling.

- Priority quotas and enterprise SLAs.

- Early access to advanced multimodal features.

Gemini 1 Ultra — Cons

- Higher per-1M token cost in many endpoints.

- Overkill for small/short tasks.

Pros

- Cost-effective for short-to-medium tasks.

- Robust multimodal features.

- Broad availability and faster iteration cycles.

Gemini 1 Pro — Cons

- Lower quotas than Ultra.

- Requires chunking for very long inputs — add engineering overhead.

Operational gotchas

- Token leakage/privacy: Never log full PII-containing texts. Use redaction and privacy filters.

- Billing surprises: Regions and enterprise discounts matter — verify account-specific rates.

- Hallucination: Neither model is perfect. Retrieval grounding and human review remain crucial.

FAQs

No. Ultra is better for massive, single-pass, high-priority workflows. Pro is usually a better value for short-to-medium jobs.

Often yes. Google’s API docs explain token accounting in detail — log both input and output tokens.

Yes. Many teams prototype on Pro and move heavy runs to Ultra as needed.

Measure retries, correctness, and end-to-end cost on Pro. If retries × Pro cost > Ultra cost for your task, test Ultra.

Check the Gemini API pricing page and Vertex AI model docs for model-specific token limits and per-1M token rates.

Conclusion

- Decision rule: If your expected retries × Pro_Cost + stitching_costs > Ultra_cost, run Ultra. Otherwise, run Pro.

- Practical approach: Start on Pro for prompt design and instrumentation. When prompt-set and metrics are stable, run small Ultra experiments for heavy runs to validate cost trade-offs.

Must-do before launch: Run a reproducible benchmark with your actual prompt set, log token usage, measure p95/p99 latencies, and compute real cost-per-successful-output.