Introduction

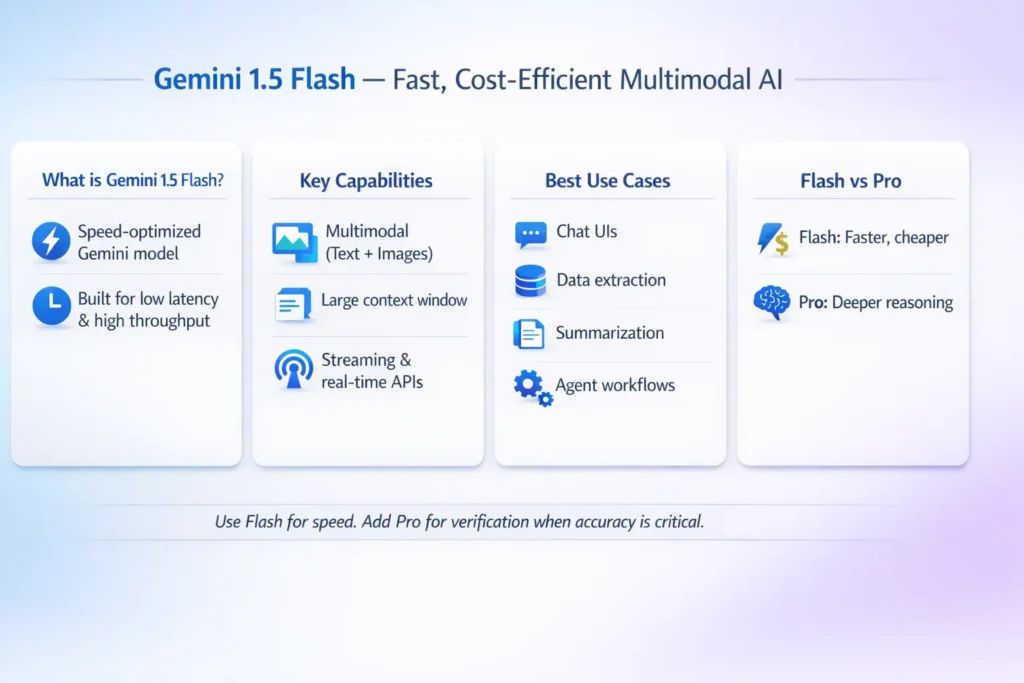

Gemini 1.5 Flash is Google’s speed-and-efficiency-focused member of the Gemini family: multimodal (text + images, with other modalities depending on endpoint), tuned for low latency and high throughput, and aimed at production use where responsiveness and cost-per-call matter more than absolute top-tier reasoning. Use Flash for fast chat UIs, high-volume extraction, and agent tasks — combine with retrieval (RAG) and/or Pro verification when correctness is critical.

Why This Guide Exists Gemini 1.5 Flash

You need one authoritative, practical reference that explains what Gemini 1.5 Flash is in NLP terms, how it behaves inside real systems, how to benchmark and measure it, and how to choose Flash vs Pro for a product. This guide gives step-by-step recipes, copy-ready prompt patterns, cost math, integration pseudocode, production checklists, and a benchmarking template so you can ship with confidence. Where numbers are sensitive (pricing, quotas, context windows), I point to official docs so you can update figures before publishing.

What is Gemini 1.5 Flash?

Gemini 1.5 Flash is a variant in Google’s Gemini family optimized for throughput and latency. From a perspective, it’s a multimodal conditional generator that is engineered (via architecture choices, quantization, and runtime optimizations) to return useful textual outputs quickly and cheaply. Flash models are explicitly tuned to trade a degree of raw benchmark-leading reasoning for speed and cost-efficiency, making them ideal for short-to-medium-length tasks like conversational responses, extraction, classification, summarization, and many agentic steps.

- Multimodal input: Primarily text + images; some endpoints/variants may accept audio/video or other structured signals. The model maps visual features into its internal token space and conditions generation on those encoded signals.

- Fast decoding path: Engineering choices (smaller parameter-stepped variants like 8B options, optimized kernels, request-level batching, low-latency streaming) reduce time-to-first-byte (TTFB).

- Large-context support: Flash family variants support very large context windows (several hundred thousand tokens to ~1,000,000 tokens for some variants), enabling long-document summarization and memory-like behavior. Always confirm the exact variant’s max tokens in the official docs.

Key Features & Specs Gemini 1.5 Flash

Below are core items to surface in a technical CMS snippet. Always verify live numbers before publishing.

- Modalities: Text + images (some variants expose audio/video or other structured inputs through API-specific endpoints).

- Primary goal: Low-latency, high-throughput, cost-optimized inference for production systems.

- Context window: Variant-dependent; public snapshots report large windows (up to ~1,000,000 tokens in some Flash variants). Verify exact limits for your tenant/region.

- Pricing (example snapshot): Public snapshots have reported input/output token costs like $0.15 / $0.60 per 1M tokens as illustrative examples, but pricing varies by region, endpoint (Vertex vs Gemini API), and time. Replace with live values from Google before publishing.

- API modes: REST, streaming, and real-time-like options exist depending on the provisioning and the API surface (Gemini API / Vertex AI).

Quick Spec Table

When to Choose Flash vs Pro

Pick Flash When:

- You require sub-second or ~1s responses for front-end elements.

- You have hundreds to millions of short/medium requests per day, and cost directly impacts your margin or feasibility.

- The task is extraction, classification, short summarization, or high-frequency conversational flows.

- You want streaming outputs to show progressive results in the UI.

Pick Pro when:

- You need the “last mile” of reasoning quality (research-level synthesis, legal/medical conclusions, complex code reasoning).

- Outputs can be mission-critical where small hallucinations are catastrophic.

- Benchmarks (e.g., high-complexity QA, long-form multi-hop reasoning) show Pro materially outperforms Flash for your test suite.

Hybrid Pattern

- Use Flash as the fast front-line model.

- Route high-stakes or low-confidence responses to Pro for verification or regeneration.

- Use RAG + tool verification to lower Pro usage to essential cases only.

How to Benchmark Gemini 1.5 Flash

Public claims are fine for orientation, but run workload-specific tests. Below is an actionable benchmarking plan you can copy/paste.

Metrics To Collect

- Latency: Median, 95th, 99th percentiles for short and medium prompts.

- Throughput: Pokens/sec and concurrent request capacity.

- Accuracy: Precision/recall/F1 on labeled extraction tasks.

- Multimodal correctness: For image+text tasks, measure correctness against labeled answers.

- Cost per useful response: Account for Flash + any Pro verification + infra + retries.

Concrete Recommended Tests Gemini 1.5 Flash

Latency Test

- 1,000 short prompts (10–50 tokens)

- 1,000 medium prompts (500–2,000 tokens)

- Measure: TTFB median / 95th / 99th

Throughput Test

- Concurrent streaming of 50–200 long outputs (500 tokens), measure tokens/sec, and how latency degrades with concurrency.

Extraction Accuracy

- Labeled dataset ≥500 documents; compute precision/recall/F1 for schema extractions.

Multimodal check

- 200 image+question pairs, measure exact match / partial match accuracy.

Cost Simulation

Template Table for Results Gemini 1.5 Flash

| Test | Setup | Metric | Why it matters |

| Latency (short) | 1k queries, 10–50 tokens | Median, 95th, 99th TTFB | UX responsiveness |

| Throughput | 100 concurrent streams | tokens/sec | Server sizing |

| Extraction F1 | Labeled set (500) | Precision / Recall / F1 | Automation accuracy |

| Multimodal accuracy | 200 image+Q | Accuracy | Feature parity check |

| Cost sim | Real average token use | $ per 1k responses | Budget forecasting |

Note: Always compare Flash vs Pro on the same workload and same runtime settings (temperature, streaming vs batch, tokenization options) to make fair decisions.

Integration & Production RAG pattern

Flash is fast but not infallible. RAG (Retrieve-and-Generate) grounds responses and reduces hallucinations.

Why RAG Gemini 1.5 Flash?

RAG provides evidence and reduces risk: rather than relying solely on parametric knowledge, you retrieve relevant context and let the model synthesize. For Flash, this is the common pattern: Flash for the initial answer, Pro for verification if needed.

Production Flow

- User request → 2. Vector DB retrieval (top-k) → 3. Assemble prompt → 4. Call Gemini 1.5 Flash → 5. Post-process & verify (rules / Pro check if flagged) → 6. Return result.

RAG Tips & Tricks

- Chunk large docs into 3–5k token pieces → summarize each with Flash → synthesize.

- Include snippet metadata (source ID, URL, offset, score) in the assembled prompt so the model can surface evidence.

- Use low temperature (0–0.2) for extraction and JSON outputs.

- Two-stage verification (Flash → Pro) reduces cost while preserving correctness for critical queries.

Long-Document Hierarchical Summarization

- Chunk the document into ≤3–5k-token pieces.

- Flash: Summarize each chunk to ~100–200 tokens.

- Concatenate chunk summaries.

- Pro (optional): Synthesize the concatenated summary for higher fidelity. This two-stage pipeline trades latency for final quality when needed.

Common Failure Modes & Practical Mitigations

Focus on pragmatic engineering fixes you can operationalize.

Hallucinations

Mitigations: RAG grounding, structured output (JSON schema), low temperature, post-validation (regex, DB cross-checks), and fallbacks to Pro for verification.

Context Truncation/Forgetting

Mitigations: chunking + hierarchical summarization, micro-memory stores (vector store of recent session snippets) instead of attempting to shove whole transcripts into tokens, prioritize relevance over recency when trimming.

Rate Limits & Throttling

Mitigations: Exponential backoff with jitter, request batching for non-interactive workloads, provisioned throughput (Vertex) if available, monitor 95th/99th percentile latency, and scale horizontally.

Cost Examples & Quick Math

Important: prices change. Replace example numbers with live values from your Google account or the Gemini API pricing page before publishing.

Example

- Input tokens: 30

- Output tokens: 120

- Example prices: Input $0.15 / 1M, Output $0.60 / 1M.

Cost per call = (30 / 1,000,000) × $0.15 + (120 / 1,000,000) × $0.60

= $0.0000045 + $0.000072 = $0.0000765 per call

Cost per 1M calls ≈ $76.50 (illustrative) — highly dependent on average output length and verification overhead. Always measure real traffic distribution.

Flash in Real Systems:

Below are three production-ready patterns you can reuse.

Fast chat UI

Flow: Frontend → API Gateway → Flash (streaming) → client rendering.

Add vector DB + RAG for context. For high-risk queries, asynchronously route to Pro to update a “verified” status or flag. This keeps UI snappy while preserving correctness

Document Extraction Pipeline

Flow: Ingest PDFs → OCR → chunk → Flash extraction (JSON) → validation rules → store in DB.

Use post-validation rules (regex, cross-check values) and a small human-in-the-loop queue for low-confidence extractions.

Agentic Workflows

Flow: Agent controller → Flash for fast plan generation → if plan is high-risk, send steps to Pro for verification → execute safe actions.

This pattern keeps Flash on the front line for speed and uses Pro conservatively.

Flash vs Pro Gemini 1.5 Flash:

| Area | Gemini 1.5 Flash | Gemini 1.5 Pro |

| Primary goal | Low latency, low cost. | Top reasoning, benchmark performance. |

| Best use cases | Chat UIs, extraction, high-volume tasks | Complex synthesis, high-risk decisions |

| Typical latency | Lower / faster | Higher / slower |

| Pricing | Lower per token (example) | Higher per token (example) |

Full Production Checklist

- Run a 72-hour load test with a realistic prompt mix.

- Measure token distribution and average output length.

- Implement caching, deduplication, and request-level caching for static prompts.

- Add a verification layer for high-risk outputs (Pro or external check).

- Instrument latency, throughput, error rates, and cost per successful outcome.

- Add versioned model selection (Flash for normal, Pro for critical).

FAQs Gemini 1.5 Flash

Yes. Gemini 1.5 Flash supports text + images, and some endpoints support expanded modalities. Always check the model docs for exact input types per variant.

Often, yes, for speed and cost. If some queries need top-tier reasoning, route them to Pro or an external verifier. Hybrid systems work well.

Use RAG (grounding), structure outputs (JSON), low temperature, and verification layers (Pro or external checks).

Check the official Gemini API pricing and docs (Google AI for Developers, Vertex AI). Public snapshots like LLM-Stats help for planning, but verify live.

Yes — Flash is designed to be more cost-efficient per token. Exact pricing varies by variant and region. Use live pricing pages for exact numbers.

Conclusion Gemini 1.5 Flash

Gemini 1.5 Flash is a pragmatic LLM for teams that need speed, low cost, and multimodal capability. For many chat UIs, extraction pipelines, and agentic use cases, Flash is the sensible default. However, Flash isn’t the final answer for high-risk or high-reasoning needs — build a hybrid system where Flash handles the fast path and Pro or external checks handle verification. Run workload-specific benchmarks, measure cost per successful outcome, and monitor production metrics closely.