Introduction

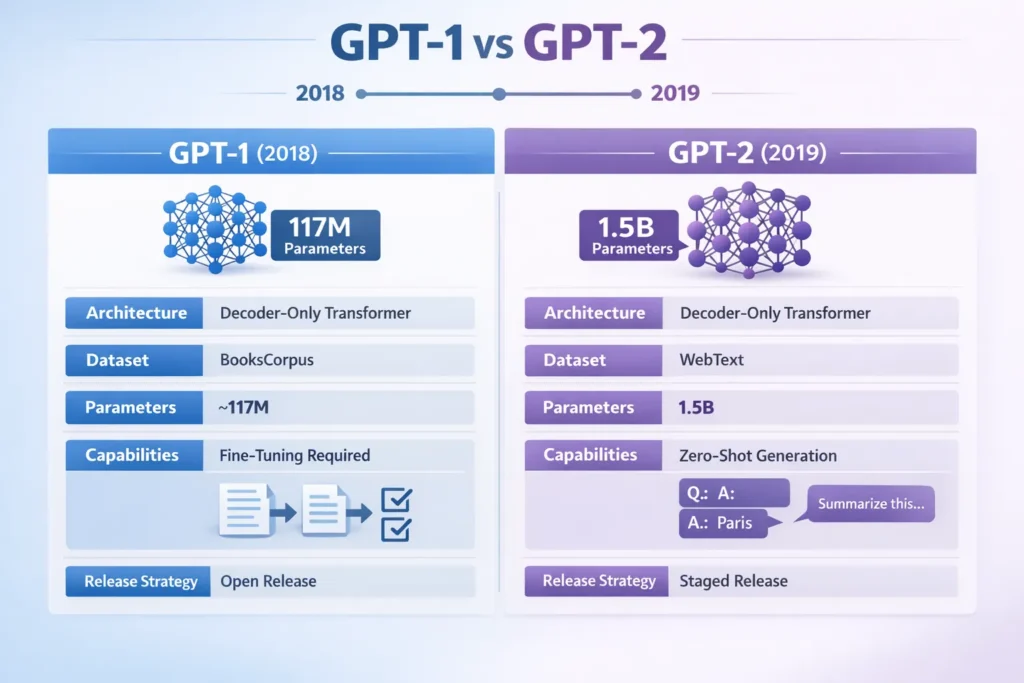

GPT-1 vs GPT-2 can reveal how scale transforms AI capabilities. In just 10 minutes, understand a 10× improvement in outputs and zero-shot tasks. Explore a quick 10-second test and see real results. Problem: inconsistent results. Hint: subtle context tweaks unlock better performance. GPT-1 and GPT-2 are base waypoints in the development of modern autoregressive language models. GPT-1 closed a decisive methodological shift for natural language processing: pretrain a decoder-only Transformer on large unlabeled corpora, then adapt that pre-trained model to target tasks via managed fine-tuning. This pipeline replaced or augmented many task-specific architectures and ushered in a more unified approach to solving tasks.

GPT-2 scaled this recipe quite a bit more layers, larger hidden dimensions, more attention heads, and far greater compute and dataset scale. That scaling was not merely a digital milestone: it produced a qualitative transformation in model behavior. GPT-2, trained on a large selected crawl dubbed WebText, displayed an ability to follow natural-language prompts for many tasks without other gradient-based fine-tuning — a capability now described as zero-shot or few-shot prompting depending on how many in-context examples were given. This emergent behavior elevated prompt design from an ad-hoc trick to a sane workflow.

Beyond technical capability, GPT-2’s staged release strategy sparked a chat about release practices, model misuse, and responsible stewardship of powerful generative models. Together, these two models illustrate how build choices, pretraining objectives, dataset composition, and scale interact to produce new capabilities. This article dives deeply into the change between GPT-1 and GPT-2 — from architecture and hyperparameters to training pipelines, behavior on next tasks, practical recipes for when to prompt versus fine-tune, safety lessons, and concrete code-ready prompts and workflows you can use today. Wherever possible, explanations use language familiar to NLP practitioners: tokens, perplexity, cross-entropy, context windows, mime, and in-context learning.

GPT-1 vs GPT-2 — What Really Changed?

- GPT-1 (2018) Set up the pretrain then fine-tune approach; used a decoder-only Transformer with about 117 million parameters; released the standard academic version.

- GPT-2 (2019): scaled the same core architecture up to 1.5B parameters (publicly released largest checkpoint); trained on WebText (a large, diverse web-derived corpus); exhibited strong zero-shot and few-shot behavior; released via a staged approach to consider misuse risk.

GPT Evolution — Key Milestones You Can’t Miss

- June 2018 — GPT-1: Paper Improving Language Understanding by Generative Pre-Training (Radford et al., OpenAI) described training a multi-layer decoder-only Transformer on large unlabeled text, then fine-tuning with supervised data on tasks like classification and question answering. The idea: pretraining on generative next-token prediction induces representations that transfer effectively after supervised fine-tuning.

- February 2019 — GPT-2 announced: Paper Language Models are Unsupervised Multitask Learners presented a family of models scaled to a 1.5B-parameter configuration. GPT-2 results suggested that large-scale language modeling can produce direct task behavior with natural-language prompts.

- 2019 (May → November) — staged release: OpenAI released smaller model checkpoints and analysis early, then gradually released larger checkpoints up to the full 1.5B weights after community and internal safety analyses.

Why do These Two Matter

- GPT-1 checked the process: If you need consistent results on tasks where you have labeled examples, it’s better to first train a model on a lot of data and then adjust it for your specific task.

- GPT-2 showed how scaling up helps: When models have enough parameters and are trained on varied data, they start to understand patterns well enough to handle many tasks just by looking at the input context — this allows for creating instructions that let the model work efficiently, often making extra labeled data and fine-tuning steps unnecessary during early testing.

Peek Under the Hood — GPT-1 vs GPT-2 Design

High-Level Snapshot

| Feature | GPT-1 | GPT-2 |

| Year announced | 2018 | 2019 |

| Core architecture | Decoder-only Transformer; pretrain → fine-tune | Decoder-only Transformer; scaled; some hyperparameter and training tweaks |

| Parameter count | ~117M (base) | Up to 1.5B (largest public) |

| Training dataset | BooksCorpus + curated corpora | WebText — web pages from outbound links on Reddit / curated crawl |

| Objective | Autoregressive next-token prediction (cross-entropy) | Same autoregressive objective; larger compute & dataset |

| Release approach | Standard academic paper & code | Staged release with safety analysis |

The Surprising Changes Between GPT-1 and GPT-2

Scale (parameters & compute): GPT-2 increased depth (# layers), width (hidden state dimensionality), and number of attention heads. Given Transformer scaling laws, this increase reduced model perplexity and increased the model’s capacity to memorize and generalize patterns. Scale also improved the model’s ability to perform tasks from instruction-like prompts.

Dataset breadth & diversity: GPT-2’s WebText incorporated far more varied tokens and linguistic phenomena from web pages, improving coverage of names, topical vocabulary, code snippets, and conversational turns. Broader data improved robustness to out-of-domain signals when compared to smaller in-domain corpora.

Same loss, different emergent behavior: Both models used next-token prediction as the primary objective. Yet GPT-2’s scale and dataset caused emergent in-context capabilities: the model could follow natural-language instructions and answer questions when given the instructions and optionally a few examples in the context window.

Hyperparameters & training tricks: Subtle adjustments — learning rate schedules, batch sizes, initialization, regularization — accompanied by scale. While the objective remained the same, training stability at 1.5B parameters required meticulous engineering.

GPT-1 vs GPT-2 — What They Can Really Do

Benchmarks & Task Behavior

- Language modeling: Larger GPT-2 variants achieved lower perplexity on held-out language modeling evaluations.

- Zero/few-shot performance: GPT-2 delivered usable performance on summarization, cloze tasks, and some QA examples using prompting alone.

- Generation quality: GPT-2 produced longer, more coherent continuations with fewer local contradictions and more consistent topicality than GPT-1.

Concrete Implications:

- GPT-2 is better at maintaining the same style and topic across several sentences than GPT-1.

- When you need a quick, accurate answer and have labeled data, fine-tuning remains the better choice for consistent results.

- If you’re working quickly or don’t have labeled data, using prompts with GPT-2 is often faster and still works well enough.

The Ultimate Comparison Table: GPT-1 vs GPT-2

Area — GPT-1 vs GPT-2 (large), with notes:

- Parameter count: ~117M vs 1.5B — roughly a 10× jump.

- Primary dataset: BooksCorpus + curated datasets vs WebText — web signals gave breadth.

- Objective: Autoregressive LM for both — same loss function.

- Zero-shot ability: Limited vs much stronger — prompts work more often on GPT-2.

- Release: Standard paper & code vs staged release with safety review.

- Main impact: Validation of pretrain→fine-tune vs impetus for prompt engineering and release policy debates.

Risks, Bias & Safety Lessons from GPT Models

Scale improves fluency but does not eliminate core problems.

Common failure modes

- Hallucination: Confident generation of incorrect facts.

- Bias & toxicity: Outputs reflect statistical biases present in training data.

- Memorization & leakage: Large models may reproduce verbatim sequences from training data (privacy risk).

- Degeneration in long generations: Repeated loops or generic phrases sometimes appear in long continuations.

GPT-2 release & safety lesson

OpenAI’s staged release of GPT-2 exemplified an early attempt to balance openness and safety. By releasing smaller checkpoints and safety analyses first, they invited community evaluation while limiting initial access to the full model. The process highlighted the need for:

- empirical misuse assessments,

- investment in detection and mitigation tools,

- transparency about dataset composition and model capabilities.

Deciding Between Workflows — Fine-Tune or Not?

Use fine-tuning when:

- You have labeled data (≥ a few hundred examples).

- You need repeatability and strict output formatting.

- Hosting and latency budgets can accommodate a dedicated fine-tuned model.

Use prompting/few-shot when:

- No labeled data is available, and you want fast prototyping.

- You need rapid experimentation across many tasks.

- You can tolerate output variability.

Hybrid: Prototype with prompting; if the task stabilizes and requires reliability, fine-tune on a curated set derived from prompt outputs.

Production Considerations & Deploy-Time Tradeoffs

- Cost: Fine-tuning and hosting a dedicated model can be more costly but yields predictable latency and outputs. Prompting a large multi-task model may have a lower development cost but a higher per-call inference cost if using large models.

- Latency: Smaller fine-tuned models can be deployed closer to users for lower latency; large shared models may introduce higher latency.

- Maintenance: Fine-tuned models must be retrained or updated for data drift; prompt-based approaches shift much of the maintenance to prompt engineering and example selection.

Case Studies That Reveal Model Impact

Case: Customer support classification

Problem: Classify user messages into intents (billing, tech, cancel).

Approach: Prototype with few-shot prompts to discover label schema. If outputs are stable, collect the best prompt outputs and human-verified labels (≈2k) and fine-tune for production.

Outcome: Faster iteration, higher final accuracy after supervised fine-tuning.

Case: Content summarization tool

Problem: Produce short summaries of long user-submitted articles.

Approach: Few-shot prompts for prototype; if consistent short summaries are required, fine-tune on curated pairs.

Outcome: Fine-tuned model gives consistent length and style; prompts are useful for on-device interactive demos.

Pros & Cons

GPT-1 — Pros

- Proved the effectiveness of pretrain → fine-tune.

- Easier to host than later huge models.

- Simpler to reproduce with limited compute.

Cons

- Limited zero-shot capability; often requires fine-tuning for reasonable performance on many tasks.

GPT-2 — Pros

- Strong zero-shot and few-shot performance; a single model can handle many tasks.

- Sparked prompt-engineering practices and important research on scaling laws.

Cons

- Larger compute and hosting costs.

- Increased security and misuse concerns; potential for memorization and biased outputs.

FAQs

A: GPT-1 was ~117M parameters. GPT-2’s largest public model was 1.5B parameters.

A: OpenAI used a staged release to study misuse risks and allow community analysis before releasing the largest model.

A: No — both used autoregressive language modeling. The big changes were scale and data.

A: If you have labeled data and need repeatability, fine-tune. If you’re prototyping or lack labels, start with prompting/few-shot.

Conclusion

GPT-1 and GPT-2 are two urgent steps in modern . GPT-1 approved the pretrain-then-fine-tune workflow that disentangled and bound together how specialists approach numerous tasks; GPT-2 illustrated how scaling parameters and in-dataset assortment can drive rising capabilities such as zero-shot and few-shot in-context learning. The down-to-earth takeaway for specialists is direct: model with an incentive to investigate thoughts rapidly and cheaply; if results stabilize and reproducibility gets to be basic, collect names and fine-tune a dedicated model.