Introduction

Gemini 3 Pro can turbocharge your content workflow and deliver measurable speed gains. In just 90 seconds, achieve 10× faster drafts and notable traffic boosts. Try the proven checklist now, implement its steps, and see real results—higher-quality output, huge time savings, and faster monetization for creators starting today, risk-free. Gemini 3 Pro is Google/DeepMind’s top multimodal model family member designed for teams that need deep symbolic and statistical reasoning across text, images, and video. This guide reframes the original brief in terms of architecture and modality fusion, reproducible evaluation protocols, token-cost modeling, robust prompting patterns, and operational patterns for safe production use. It includes a 10-test reproducible suite, worked pricing examples, practical prompt recipes you can copy-paste, and an implementation checklist. Where the official materials are referenced, you should verify the numbers against Google’s pricing and API docs for your tier.

What This Gemini 3 Pro Guide Reveals (Most Users Miss This)

This document reframes product-level claims into NLP engineering artifacts you can run, measure, and ship. If you’re evaluating Gemini 3 Pro for a project, you need: (1) clear functional tests; (2) metrics to measure accuracy/robustness/latency/cost; (3) prompt templates and strict output schemas to reduce hallucination; (4) an integration pattern (RAG, tool verification, caching) that reduces repeated token spend and improves factuality; and (5) a deployment checklist that ties telemetry to budgeting and rollback strategies.

Gemini 3 Pro Explained — Not What You Think

What is Gemini 3 Pro?

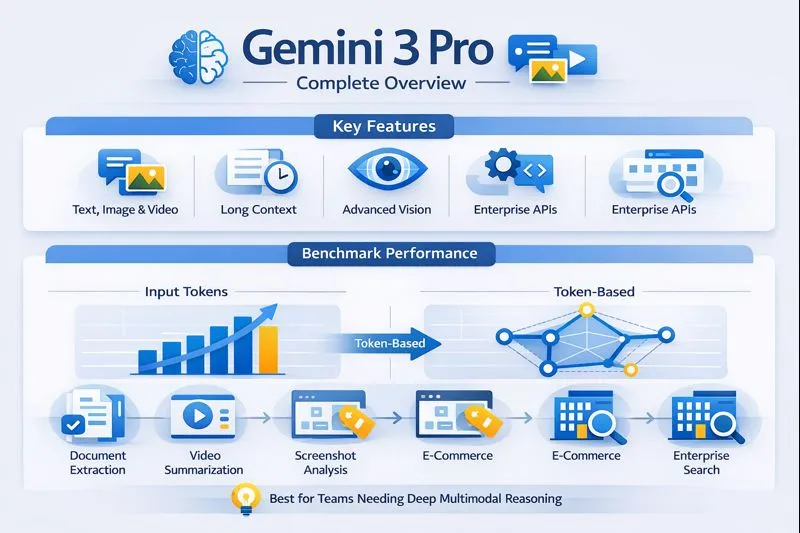

Gemini 3 Pro is a multimodal transformer-class model tuned for high-complexity reasoning and integrated visual understanding. In NLP/ML terms: it is a large-scale autoregressive or mixture-of-experts style model (depending on the exact offering) that supports cross-modal embeddings, long-context attention (extended context windows), and is optimized for tasks that combine symbolic reasoning with perceptual inputs. It’s positioned as the high-capacity option for applications that need:

- fused text + vision representations (cross-attention between modalities),

- long-range context retention (transformer blocks with extended window and likely retrieval-friendly interfaces),

- and production hygiene: streaming APIs, model variants, and pricing tiers for interactive vs batch use.

Practically, it’s accessible via consumer subscriptions (Gemini app tiers) and programmatic endpoints (API / Vertex AI). For procurement-level decisions, treat vendor claims as hypotheses to be validated with your data and workload.

Gemini 3 Pro Features You’ll Notice Instantly

In NLP engineering language, the headline capabilities are:

- Multimodal blend: Ability to ingest tokenized text and encoded visual frames. Architecturally, this implies modality-specific cipher and cross-modal attention modules that align vision and language embeddings in a joint latent space.

- Improved spatial & layout analyses: Enhancements in spatial transformers or ViT-like encoders allow better parsing of document layouts, tables, diagrams, and spatial puzzles — important for document intelligence and analytic reasoning.

- Large context windows & cure hooks: Long sequence support (thousands to tens-of-thousands of tokens), with optional retrieval-augmented generation and context caching to avoid resending static context often.

- Multiple entry vectors: Consumer app subscriptions (interactive experience and desktop/phone clients), AI Studio or Vertex-style head, and a dedicated API for server-side dispatch.

- Safety & provenance primitives: Tooling for provenance signals (SynthID/C2PA-like artifacts), token-level attributions, and optional grounding connectors to search or knowledge stores.

Reading Gemini 3 Pro Benchmarks the Right Way

Why Reproduce Benchmarks?

Benchmarks published by vendors are a useful signal but are not procurement-grade evidence. Reasons to reproduce:

- Cherry-picking: Experiments often use tasks or data aligned to a model’s strengths.

- Prompt sensitivity: chain-of-thought, temperature, and system role changes result drastically.

- Deployment constraints: Leaderboards ignore latency, concurrency, and token cost.

- Domain shift: General-purpose benchmarks don’t reflect domain-specific noise (OCR artifacts, domain jargon, corrupted images).

Therefore, set up a reproducible evaluation harness that measures accuracy, latency percentiles, token consumption, and operational failure modes.

What public benchmarks say (short)

Public materials report strong results on high-difficulty reasoning sets and multimodal leaderboards. Treat those as starting points—your domain and prompt engineering will determine procurement suitability.

Gemini 3 Pro Pricing Breakdown — What You’ll Actually Pay

Pricing matters. In token-based billing, accurate cost estimates depend on real telemetry (true input+output tokens, not approximations). There are extra costs for context caching, grounding services, or managed features.

Pricing primer (quick)

- Input tokens — tokens you send, including system role, examples, and encoded images if the API encodes images as tokens or charges an image-specific fee.

- Output tokens — tokens produced by the model.

- Streaming vs batch — streaming may reduce perceived latency, but counts the same output tokens for cost.

- Context caching — storing embeddings or contexts reduces repeated input tokens but may have storage/ingestion fees.

- Grounding/search — using vendor-provided grounding connectors could incur extra calls or per-retrieval fees.

Always fetch the current per-1M Token rates from the vendor and plug them into your calculator.

Worked Example

(We keep the structure; replace with the official vendor numbers when preparing procurement docs.)

Assume example rates:

- Input: $0.30 / 1M tokens

- Output: $2.50 / 1M tokens

Monthly volume:

- Requests: 10,000

- Avg input tokens/request: 400

- Avg output tokens/request: 1,200

Compute tokens:

- Input tokens/month = 10,000 × 400 = 4,000,000 = 4M

- Output tokens/month = 10,000 × 1,200 = 12,000,000 = 12M

Costs:

- Input cost = 4 × $0.30 = $1.20

- Output cost = 12 × $2.50 = $30.00

- Total = $31.20 (plus caching/grounding fees if used)

Important: Replace those per-1M numbers with official pricing.

Subscription vs API

- Subscriptions (AI Pro/Ultra): Best for interactive consumer-facing features and exploratory work. May include bundled credits or higher interactive limits.

- API / Vertex: better for server-side production workflows, batch processing, and integration with cloud MLOps tooling.

Pick based on SLA needs, concurrency, and regulatory requirements.

Streaming & latency tips

- Use streaming API options for perceived latency (start rendering sooner).

- Batch small requests to amortize per-call overhead, but watch the token ceiling.

- Warm up models in the early window of heavy traffic to reduce cold-start latency.

Gemini 3 Pro Integrations & Tooling Patterns You’ll Actually Use

- RAG (Retrieval-Augmented Generation): index your corpus, retrieve relevant chunks into the prompt, and use strict grounding prompts: Cite the source with [DOC_ID: line].

- Embeddings caching: persist embeddings and use approximate nearest neighbor indices (FAISS, Annoy) to reduce retrieval cost.

- Agent orchestration: model suggests tool calls; orchestrator executes (DB, search, test runner), returns structured outputs to the model for verification.

- Verification hooks: run model outputs through deterministic checks (unit tests, regex validators, type checks).

- Observability: collect metrics — tokens/request, p95 latency, error rates, hallucination incidents, and verification failure counts.

Gemini 3 Pro in Action — Top 10 Use Cases & ROI Insights

- E-commerce product enrichment — images + specs → SEO descriptions. ROI: faster listings, higher conversion.

- Document intelligence (legal/compliance) — clause extraction, due dates, redactions. ROI: reduce manual review hours.

- Support screenshot triage — analyze UI screenshots → triage steps. ROI: speed first-response metric.

- Media summarization — chaptered lecture/podcast summaries. ROI: created content repurposing.

- Healthcare research (compliant flows) — structured extraction from reports (non-PHI or with compliant pipelines). ROI: faster cohort creation for studies.

- Enterprise cross-media search — unified search over docs, images, and video. ROI: reduced time-to-insight.

- Robotics & spatial reasoning — interpret maps, images → action steps. ROI: safer, faster navigation.

- Design → code — screenshot → responsive UI skeleton. ROI: faster prototypes.

- Insurance/forensics — auto-fill claims from images + PDFs. ROI: reduced processing times.

- Education — transform recordings into quizzes/flashcards. ROI: speed course creation.

Choose the use case where multimodal reasoning adds disproportionate value relative to cost.

Gemini 3 Pro vs competitors

High-level comparison (qualitative):

- Multimodal vision + long video: Gemini 3 Pro — strong. GPT-family — improving but varies; Anthropic — focused on safety, improving multimodal.

- Reasoning benchmarks: Gemini 3 family reports top-tier performance on some leaderboards; reproduction is essential.

- Pricing complexity: token-based with caching/grounding complexity; comparable to other vendors, but specifics differ.

Recommendation: Use Gemini 3 Pro when you need fused multimodal reasoning and can tolerate higher token spend in exchange for improved performance. For text-only large-scale low-latency workloads, consider lighter models or specialized classifiers.

When not to use Gemini 3 Pro (limitations & safety)

- Ultra-low latency microservices (50–200ms) — lightweight specialized models may be cheaper/faster.

- Very high-volume trivial tasks — simple classifiers or distilled models will be more cost-effective.

- On-premise-only mandates — unless the vendor offers private hosting, cloud-only models may not meet requirements.

- Zero-hallucination settings — always pair with RAG, tool verification, or humans in the loop for high-risk outputs.

Implementation checklist

- Run the 10-test suite on real samples.

- Build a 3–6 month cost projection using actual telemetry

- Add RAG + tool verification + human review where needed.

- Implement streaming and fallback to lighter models for spiky traffic.

- Canary deploy and monitor p95 latency, tokens/request, error rates, and hallucinationincidents.

- Audit for PII/PHI compliance and regional data residency laws.

- Build rollback and surge scaling strategies.

Practical publishing assets

To outrank competitors, publish reproducible assets:

- Reproducible test-suite CSV with prompts, inputs, and expected outputs.

- An interactive pricing calculator that takes token counts and returns monthly costs.

- Prompt pack: 20 tested prompts per vertical.

- Comparative decision table with apples-to-apples results.

- Case studies with quantified ROI and methodology.

- Downloadable templates: JSON schemas, prompt templates, and telemetry dashboard.

These assets address Reproducibility, pricing clarity, and vertical prompts often missing from vendor pages.

Gemini 3 Pro Pricing: Compare Scenarios Before You Buy

| Scenario | Requests/month | Avg input tokens | Avg output tokens | Est. monthly cost (example rates) |

| Small pilot | 5,000 | 300 | 800 | ≈ $15–$45 |

| Medium app | 50,000 | 400 | 1,200 | ≈ $300–$1,200 |

| Enterprise | 1,000,000 | 400 | 1,200 | ≈ $6,000–$24,000+ |

Replace ranges with calculations using official per-1M token prices and include caching/grounding if used.

Pros & Cons

Pros

- State-of-the-art multimodal reasoning and vision.

- Rich ecosystem (Gemini app, AI Studio, Vertex).

- Published benchmark leadership on complex reasoning.

Cons

- Higher cost and latency relative to compact models.

- Need to independently reproduce vendor benchmarks.

- Some advanced tiers may be region-limited or gated.

FAQs

A: Token-based pricing varies by tier; consult the official pricing page for exact per-1M token rates and any grounding or caching fees.

A: No. Use it when multimodal reasoning and long-context vision matter; for low-cost text-only tasks, lighter models may be better.

A: Use strict output schemas, retrieval-augmented generation, verification steps, and human review for high-risk fields.

A: Via Google consumer subscriptions (AI Pro / Ultra) and programmatic APIs (API / Vertex). Verify availability for your region and tier

Conclusion

Gemini 3 Pro represents a significant step for multimodal NLP systems: fused vision-language embeddings, extended context, and improved reasoning. For procurement, convert vendor claims into reproducible tests: run the 10-test suite, compute real token costs from telemetry, and instrument verification layers. Start with a focused pilot on one high-value use case, iterate on prompts and caching strategies, and scale with canary releases and fallback models. If you want, I can generate the 10-test CSV and a pricing spreadsheet pre-filled with formulas — say “Create CSV + Pricing sheet,” and I’ll create both files right away.