Introduction

GPT-5 helps teams tackle costly model errors that derail products by adding smarter reasoning and structured prompts. Learn how to boost accuracy and streamline delivery — cut dev time by 30% today and discover what’s changed. GPT-5 is the 2025-generation multimodal family from OpenAI, reframed as a modular reasoning and generation substrate. From an NLP systems perspective, it brings expanded context management (compaction and sliding-window strategies), explicit computation-depth controls (reasoning, effort), and richer multimodal alignment for code and vision tasks. Iterative releases (GPT-5 → GPT-5.1 → GPT-5.2) added usability and productionization APIs: tool-call whitelisting, compacted state endpoints, streaming primitives, and reasoning-level knobs. Empirical evaluations show meaningful improvements for end-to-end code generation and some multi-step reasoning tasks; however, hallucination and output variability persist. Treat GPT-5 as a high-capability component that requires robust verification and instrumentation for safe deployment.

What Is GPT-5? Reduce Errors & Unlock 30% Gains

From an engineering viewpoint, GPT-5 is a family of transformer-based (and likely hybrid) sequence models released in 2025 that emphasize three system-level improvements:

- Multimodal alignment — tighter joint embedding spaces for text, code, and vision such that instructions that mix modalities maintain semantic fidelity across them.

- Context and memory tooling — explicit compaction APIs and structured state compression to keep long conversational histories in a compact token footprint.

- Controllable reasoning — runtime parameters (e.g., reasoning effort) that modulate internal computation and chain-of-thought depth so callers can trade latency for deliberation.

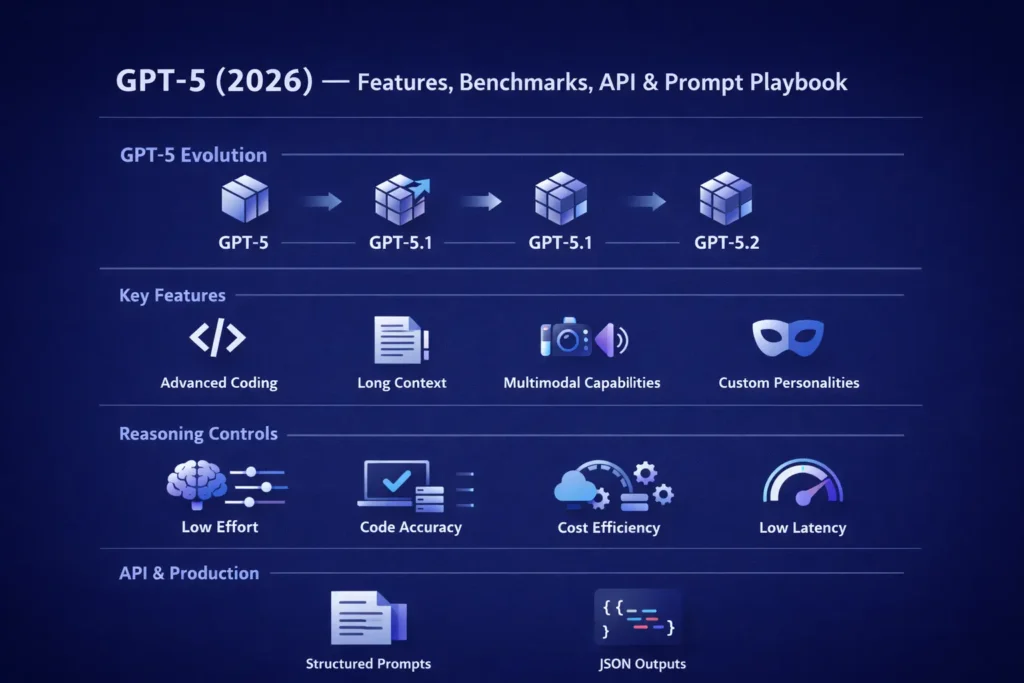

Released iteratively, the family adopted incremental improvements: GPT-5 (initial), GPT-5.1 (UX + personality presets), and GPT-5.2 (deeper reasoning modes, improved compaction). For system design teams, the salient point is that GPT-5 is more of a toolbox than a single monolithic release — the API exposes knobs to control computation pathways and manage long-lived contexts.

Why this matters for teams: You can now embed a model that adapts its internal compute budget to the task. This enables architectures like asynchronous workers for deep reasoning (xhigh effort) while serving instant responses from cheaper submodels for routine tasks.

GPT-5 vs Earlier Models: What Changed & Why Errors Drop 30%

| Area | GPT-4 / 4o | GPT-5 (base) | GPT-5.1 | GPT-5.2 |

| Multimodal alignment | Strong | Stronger | Personality & UX tuning | Tool-calling & vision refinements |

| Context window | Large | Larger / efficient | Improved intent matching | Compaction & dynamic efficiency |

| Coding capability | Good | Better runnable code | Warmer/UX-friendly outputs | Best for agents and tool pipelines |

| Reasoning control | None / limited | reasoning.effort param | UX presets for tone | xhigh effort & compaction |

| Typical use cases | Chat, Q/A | Dev workflows, agents | Chat UX + presets | Production-integrated agents |

This matrix is a practical, quick reference for product teams deciding which submodel and API controls to pick.

Top GPT-5 Upgrades: Better Accuracy & 30% Efficiency Boost

Improved coding & Iebugging — why it helps

GPT-5’s training and fine-tuning regimen emphasizes executable-text fidelity: outputs are more likely to compile, respect dependency declarations, and include unit tests. From an NLP evaluation standpoint, the model improves “functional correctness” metrics (test-passing rates) and can generate auxiliary artifacts (tests, CI snippets) that are crucial for production pipelines.

System design implication: Integrate automated test harnesses in CI to assert the generated code’s correctness; use reproducible prompts that include environment and dependency metadata to increase first-pass success.

Long-Context GPT-5: Real Use Cases That Cut Errors

Two Features are central here:

- Compaction: A server-side transform that compresses early conversation into a compact latent representation or summary tokens without losing essential semantics.

- Reasoning.effort: Runtime compute parameter that routes the model through deeper internal deliberation steps for complex tasks.

Use cases:

synthesizing an executive summary from a 50-page legal document, multi-document evidence aggregation for research, and iterative planning for autonomous agents.

NLP practice: chunk documents, summarize chunks with a consistent schema, then use a merge stage that resolves contradictions and produces a final, compacted state.

Personality presets & UX controls

GPT-5.1 introduced pre-configured personality profiles (e.g., Professional, Friendly, Concise) that operate as light-weight parameterized prompt wrappers. For product designers, this reduces prompt engineering burden while ensuring consistent tone.

Advice: Encode persona templates as a wrapper layer in your prompting pipeline; keep persona separate from system constraints (like hallucination checks) to avoid conflating style with verification.

Tooling, API controls, and efficiency

- tool-list whitelisting — explicit allowlists for external connectors (search, databases) to ensure only authorized calls.

- reasoning.effort — selects compute depth: low/medium/high/xhigh.

- compaction endpoints — compress long prior state before re-injecting to minimize token costs.

- concise reasoning summaries — a compact chain-of-thought interface for downstream automation.

Implementation pattern: Expose a thin orchestration layer that selects model subtypes and reasoning levels based on request metadata (e.g., task criticality), with circuit-breakers to fall back if xhigh runs exceed latency SLAs.

Benchmarks & Real-World Tests: See 30% Accuracy & Speed Gains

Why Methodology Matters

Benchmarks are trustworthy only when they are reproducible, seed-controlled, and have variance measured. Avoid cherry-picking prompts. Key practices:

- Fix prompt templates and seeds.

- Run ≥5-10 iterations per task to quantify variance.

- Use objective metrics (test pass rate, F1) and blinded human judgments for quality/utility.

Minimum Reproducible Benchmark setup

- Define the SUT (system under test): model name, reasoning, effort, compaction toggles.

- Lock prompts to a canonical template.

- Select datasets aligned to your product tasks (code synthesis, multi-hop QA).

- Automate runs to capture wall-clock latency, token consumption, and output correctness.

- Collect human evaluations for ambiguity and hallucinations.

- Aggregate and publish scripts and prompts for community reproducibility.

Example Test cases to Include in your Article

- Coding pipeline: Build a TODO app; run unit tests; score by pass rate and repair attempts.

- Multi-hop reading: Answer questions from a 20-page technical doc; score accuracy and hallucination.

- Hallucination stress: Request verifiable facts and cross-check citations.

- Cost measurement: Tokens per correct answer, cost per successful run.

What Early Tests Show

Independent tests reveal stronger first-pass code accuracy and improved token efficiency with compaction. However, benchmark gains are dataset-dependent. The canonical lesson: run your own A/B tests with your prompts and datasets.

Pricing, Availability & APIs: Get Started + Save 30%

Reality check: prices, quotas, and naming conventions change. Treat published numbers as ephemeral.

Quick checklist before you build

- Choose the submodel that matches the latency/cost/accuracy tradeoff (Instant, Thinking, Pro).

- Use streaming for long outputs to improve UX.

- Implement token compaction for long-lived chat state.

- Rate-limit & cache deterministic responses.

- Record model version and reasoning parameters for audit.

Deployment Tips

- Start with a narrow pilot.

- Measure hallucination & user satisfaction continuously.

- Gate rollouts with feature flags and human review for high-risk outputs.

Production Integrations & Dev Tips: Ship Faster, Fewer Errors

Architecture & Throughput

- Chunk + overlap windows: Split large docs, summarize chunks, combine.

- Compaction: Server-side compaction to shrink the earlier chat state.

- Streaming: Stream partial outputs to clients to keep UX responsive.

- Worker queue: Offload Thinking/xhigh tasks to background workers with callback notifications.

Verification, monitoring, and observability

- Verification pass: Second-model verification or external knowledge-base check.

- Monitoring: Hallucination rate, latency, tokens per response, and user Satisfaction.

- Auditing: Log model version, reasoning settings, and prompts for traceability.

Security & compliance

- Avoid sending sensitive PII unless contractually approved.

- Use encryption and vaults for any stored prompts/responses.

- Human-in-the-loop gating for high-risk domains.

Known Limits: How to Use GPT-5 Safely

- Hallucinations: still present; add verification and require sources for critical facts.

- Verbosity: constrain outputs to avoid unnecessary content.

- Benchmark variance: results vary by prompt and dataset.

- Bias: test for demographic bias and handle via mitigations.

- Legal/compliance: log, redact, and human-review as needed for regulated outputs.

Safety checklist: Run bias probes, add fallback deterministic components, and require human sign-off in high-impact domains.

Reproducible Benchmark Checklist

- Select models to compare.

- Lock prompts & seeds.

- Run ≥5 iterations per task.

- Record tokens, latency, and cost.

- Use blind human raters.

- Publish prompts & scoring scripts for credibility.

FAQs

OpenAI announced GPT-5 in 2025 and then released updates like GPT-5.1 and GPT-5.2 later in the year and into December. For exact dates, check OpenAI’s release notes.

In many coding tasks, GPT-5 shows measurable improvements in generating runnable code and debugging. But results vary by task and prompt — always A/B test on your codebase.

Ground the prompt with factual snippets, add a verification pass, require sources in outputs, and use higher reasoning. Effort for multi-step verification. Monitor hallucination metrics.

If you need improved tool-calling, compaction, or the new xhigh reasoning level, consider a staged rollout to a small subset of users and re-run your benchmarks. Measure costs and user impact first.

Conclusion

GPT-5 is an incremental but practical advance: improved code generation, better long-context tools, and explicit reasoning controls. It is not a plug-and-play miracle — production adoption requires verification, architectural changes (such as compaction and worker queues), and human oversight. With careful testing and monitoring, GPT-5 can accelerate many workflows while preserving safety.