Introduction

GPT-4.1 Mini is the latency- and compute-optimized member of the GPT-4.1 transformer family. Architecturally it preserves instruction-tuned capabilities and strong code synthesis while operating with reduced parameter/compute tradeoffs that lower inference time and token cost. Crucially, the family supports ~1,000,000 token context windows, enabling single-shot or few-shot reasoning over documents measured in hundreds of thousands of words. Use Mini as your default inference engine for high-throughput applications (conversational agents, code assistants, RAG pipelines), and escalate to full GPT-4.1 for tasks requiring peak multi-step reasoning or highest-margin creativity. Where risk is material, keep an escalation/fallback channel.

GPT-4.1 Mini: Who Should Really Be Using It?

From an NLP-engineering perspective, GPT-4.1 Mini is the pragmatic inference model for production systems that prioritize throughput, latency, and token efficiency while retaining robust instruction-following and code generation. If your system’s success metrics are dominated by p95 latency, requests-per-second, and monthly token spend, Mini is the right candidate for an A/B rollout.

Use Mini when:

- You need low-latency interactive agents (UI chat, code helpers) where response time drives engagement.

- You run retrieval-augmented generation (RAG) systems and prefer to consolidate more retrieved context into a single forward pass (fewer RPCs).

- Your app issues many micro-inference steps (agentic workflows). Mini’s lower per-call cost and latency reduce the cost and time of multi-step plans.

- You require highly scalable base inference for non-critical outputs and preserve larger siblings only for flagged high-risk items.

Don’t use Mini when:

- The task is high-stakes and the final answer requires the absolute strongest chain-of-thought by the largest model (e.g., final legal opinions, high-impact scientific claims).

- You rely on highest-end multi-hop reasoning across many implicit assumptions; the full GPT-4.1 can produce marginally better reasoning in such cases.

Why: Mini trades top-end parameter-count advantages for optimized compute/latency—an intentional capacity/performance tradeoff common in production model design. In practice, hybrid routing (Mini default + escalate) yields the best cost/accuracy balance.

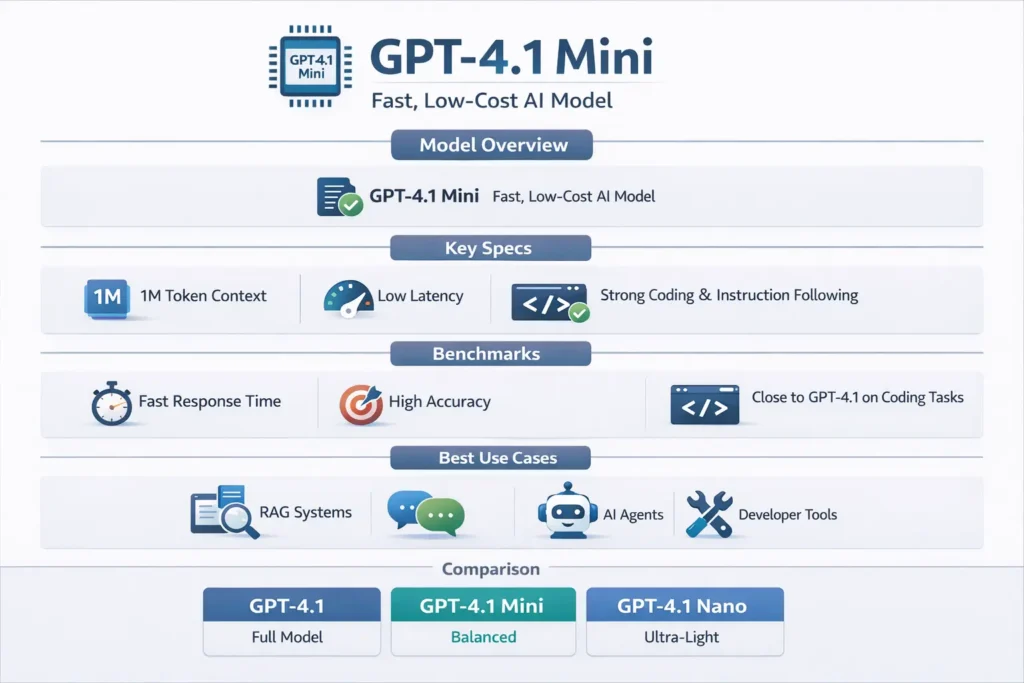

GPT-4.1 Mini: What It Is and Its Key Specs at a Glance

Model family: GPT-4.1 (variants: full, Mini, Nano) — an instruction-tuned transformer series.

Context window (capability): ~1,000,000 tokens (model-level receptive field; actual API/request limits may be lower).

Knowledge cutoff: Static snapshot (circa mid-2024 for the family — always verify your deployment’s docs).

Strengths (NLP terms): Instruction-following, code generation, token-efficient summarization, tool-calling compatibility, large-context integration.

Architectural intuition: Mini is a capacity-reduced variant tuned via distillation/parameter-efficient techniques to maintain instruction-following fidelity while reducing transformer depth/width or using efficient inference kernels (quantization, optimized attention). The consequence is lower FLOPs per token and reduced latency for the same prompt length.

Why the family has a 1M token window: Large positional embeddings + attention optimizations (sparse or chunked attention in practical implementations) — this allows a single forward pass to consider far more sequence context than classical models. In practice, implementations rely on chunking, compressed representations, or rotary/ALiBi-like positional schemes that scale beyond prior windows.

Practical constraints: “1M tokens” is model capability, not always API submission limit. Infrastructure (API gateways, request body size, SDK limits) can impose stricter maxima. Also, compute and latency scale with tokens — sending the full million on each call is rarely efficient.

Summary: think of Mini as the scaled-down transformer variant designed to be your production workhorse: cheaper per token, faster per token, and still strong on standard NLP tasks (classification, generation, summarization, code), with the family’s large context capability intact.

GPT-4.1 Mini: What a 1M-Token Window Really Means

Token-level hunch: A token is a slang unit. 1,000,000 tokens is on the order of hundreds of thousands of words. For English, this may tally to ~600k–800k words depending on tokenization granularity. Practically, that means you can feed entire long-form corpora (books, corpora of manuals, months of chat logs) into one model context.

What This capability Enables :

- Single-call long-document summarization: Instead of iterative chunk + synthesize pipelines, you can, in principle, place massive documents into one forward pass for global compression and summarization.

- Large RAG contexts: Embed a much larger set of retrieved documents inline, reducing the number of retrieval calls and orchestration complexity.

- Long-session memory: Keep a long user history in-context for personalization without external state fetching on each turn (but be mindful of cost)..

Practical Tips and Pitfalls:

- Don’t dump everything. More context can dilute signal. Use retrieval quality metrics (embedding cosine scores) to select the most relevant passages; quality beats quantity.

- Chunk & synthesize often wins. Even with 1M Tokens, staged summarization (chunk -> extract -> synthesize) tends to produce clearer outputs because it imposes structure on the model’s reasoning.

- Add IDs & metadata. Pass chunk IDs and source metadata (doc name, URL, timestamp) so the model can return traceable citations.

- Compression & summaries. Pre-compress repeated or low-value text (e.g., logs) into semantic summaries to reduce token usage.

- API constraints & engineering. Check the API’s content-length limits and gateway timeouts — model capability does not nullify operational limits.

- Verification loop. For critical outputs, perform a verification pass (e.g., a smaller model checking key facts, unit tests for generated code).

Memory and coherence considerations: very long contexts can still lead to retrieval/attention sparsity issues — the model might weight recent tokens more. Implement strategies like salient-context boosting (repeat key facts near the prompt’s end) or hierarchical prompting (summary + detailed chunks).

GPT-4.1 Mini: Real‑World Benchmarks & Surprising Performance

- Instruction-following & code: Mini performs closely to full GPT-4.1 on most unit test style code tasks and instruction-following benchmarks (pass@k, unit test success rates). If you measure functional correctness via automated unit tests and test harnesses, Mini typically tracks near the full model for many practical code-generation tasks.

- Latency & throughput: Mini reduces inference latency (mean and tail) and per-token compute substantially; this drives cost-efficiency at scale.

- Multi-step reasoning: On benchmarks requiring deep multi-hop reasoning, the full GPT-4.1 often retains an edge; Mini is sometimes slightly less consistent for very long chain-of-thought reasoning.

GPT-4.1 Mini: What You Should Track in Your Benchmarks

- Task accuracy

- For code: automated unit tests, pass@1 / pass@k metrics.

- For QA: Exact Match (EM), F1.

- For summarization: ROUGE-L, BERTScore, human evaluation.

- Latency under load

- Track mean (µ), p95, p99 latencies for realistic payloads.

- Token cost per session

- (input tokens + output tokens) × price per token.

- Robustness

- Adversarial prompts, instruction-suppression tests, prompt injection resilience.

- Long-context retention

- Construct tests where the model must reference or use facts introduced at 100k+ tokens earlier — measure recall and consistency.

Real Test Categories & What to Expect

- Code generation: Mini produces high-quality function bodies and test stubs; combine with CI unit tests for production safety. Performance nearly matches full model for common patterns.

- Edits & diffs: For code edits, diffs, and PR summarization, Mini is fast, consistent, and cost-effective.

- RAG long-context: Mini simplifies system architecture by allowing larger retrieved payloads inline. However, retrieval quality (embedding accuracy + selection heuristics) remains the gating factor.

- Conversational UIs: Many user studies show preference for snappy replies; users often favor lower latency over marginally more insightful but slower responses.

GPT-4.1 Mini: A Test Recipe That Reveals Hidden Performance

Goal:

- Select representative tasks: code completion, API doc Q&A, long-document summarization (200k-token doc), tool-calling.

- Build deterministic test suites (unit tests for code, golden Q&A pairs for docs).

- Run A/B: route 20% traffic to Mini, 80% to baseline. Run for a two-week window.

- Measure: pass@1, pass@k, EM/F1, p95/p99 latency, token cost per session, user satisfaction metrics.

- Tune prompts for Mini (shorten system message, tighten output schema), then re-run.

GPT-4.1 Mini: Benchmarks You’ll Want to Share Publicly

When publishing benchmarks, include: dataset description (size, domain), prompt templates, prompt seeds, hardware/inference stack (quantization, CPU/GPU type), and evaluation metrics. This transparency is essential for reproducibility.

Practical Takeaways

- Phased rollout with automated tests is the safest migration path.

- Prompt and retrieval tuning often closes most accuracy gaps between Mini and the larger sibling.

- Escalation policies (confidence thresholds, human review) mitigate risk for critical outputs.

GPT-4.1 Mini: Pricing Secrets & Cost Comparison Revealed

Important caveat: prices change. Always cite the provider pricing page before publishing.

Representative cost Reasoning

Token cost is linear with tokens processed (input + output); compute cost is proportional to model size and per-token execution. For budgeting:

- Measure tokens per session: Average input tokens + average output tokens.

- Multiply by sessions per month to get monthly tokens.

- Apply pricing rates (input/output prices differ sometimes) to compute cost.

Example

- Avg tokens per session = T

- Sessions per month = S

- Price per 1M input tokens = P_in ($)

- Price per 1M output tokens = P_out ($)

Then: - Monthly input tokens = S × T_input

- Monthly output tokens = S × T_output

- Monthly cost = (Monthly_input_tokens / 1e6) × P_in + (Monthly_output_tokens / 1e6) × P_out

Concrete Illustrative Example

- Avg session tokens: 1,500

- Sessions/day: 10,000 → Monthly (30d): 300,000 sessions

- Monthly tokens: 300,000 × 1,500 = 450,000,000 tokens (450M)

If illustrative Mini costs are: P_in = $0.40 / 1M, P_out = $1.60 / 1M and outputs are 40% of total:

- Input cost = 450M × 0.60 × $0.40 / 1M = $108

- Output cost = 450M × 0.40 × $1.60 / 1M = $288

- Total ≈ $396/month (note: these numbers are only examples)

Compare to a full GPT-4.1 which might be 3–6× more expensive per token depending on pricing. Real savings come from routing, compression, and caching.

Cost Tactics

- Cache repeated contexts: Use cached-input pricing or cache frequent prompts to reduce redundant token billing.

- Hybrid routing: Route the “easy” queries to Mini and escalate only low-confidence cases.

- Compact representations: prefer JSON or compact data schemas to reduce token churn.

- Token budgeting practice: Reduce verbosity in system messages, avoid unnecessary long bios or function signatures in prompts.

- Rate limits & circuit breakers: Throttle bursts to avoid runaway costs.

GPT-4.1 Mini: Best Use Cases & Architectures You Can’t Miss

Where Mini shines

- Developer tools & coding assistants: Fast code completions, refactors, PR descriptions. Combine with unit tests for safety.

- RAG with big corpora: Legal manuals, product documentation, research archives where larger inline context is valuable.

- Agent micro-steps: Planner/fetcher/summarizer microservices where many small model calls occur. Mini reduces cost per micro-step.

- High-throughput chatbots: Default model for conversational UIs that need responsive replies.

GPT-4.1 Mini: Architecture Patterns That Actually Work

- Hybrid routing (recommended)

- Front door: GPT-4.1 Mini for baseline responses.

- Escalation: switch to full GPT-4.1 for low-confidence, high-risk queries.

- Fallback: human review for safety-critical outputs.

- RAG + Mini single-call

- Vector DB (FAISS, Pinecone) retrieves top-K passages.

- Construct a focused prompt that includes retrieved passages (with chunk IDs).

- Call Mini for synthesis, then optionally run a verify pass.

- Agentic workflow (many small calls)

- Use Mini for each micro-step (plan, fetch, summarize, act).

- Keep an escalation policy or human-in-loop for final decisions.

Observability & Logging

- Token and cost dashboards per endpoint.

- Unit test harnesses for code outputs.

- Periodic human QA and model-incident postmortems.

GPT-4.1 Mini: Migration Checklist for Switching from Older Models

Inventory & goals

- Catalog endpoints and user flows that will use the model.

- Set KPIs: latency, cost, accuracy, user satisfaction.

Unit Tests

- Build automated test suites for critical features: code generation tests, QA pairs, summarization tests. These form your ground truth.

Sandbox & Smoke Tests

- Run Mini on a small dataset to measure delta in accuracy and latency. Collect logs and failure cases.

Rollout

- Route 10–20% of traffic to Mini for one to two weeks with representative traffic. Compare metrics (pass@k, EM/F1, p95/p99, token cost).

Implement Fallbacks

- For low-confidence outputs (< threshold), route to full GPT-4.1 or human review. Implement automatic triggers on hallucination detection or unit test failures.

Monitor & Iterate

- Track SLOs, complaints, and token spend. Adjust prompts and routing thresholds, re-tune retrievals.

Full Rollout & Deprecation

- Expand traffic access only once KPIs are met. Decommission older endpoints carefully and publish migration metrics to stakeholders.

Tooling checklist

- CI pipelines for unit tests.

- Observability stacks (dashboards, alerts).

- Cost calculators and token usage alerts.

GPT-4.1 Mini: Monitoring, Safety & Rate-Limits You Must Know

Monitoring Essentials

- Token & cost dashboards: Segment by feature and endpoint.

- Quality metrics: Automated unit tests for code, periodic human QA for sensitive outputs.

- Latency & reliability: Track µ, p95, p99 latencies; error rates and retries.

- User signals: Feedback buttons, escalation counts, complaint tracking.

Safety & Guardrails

- Automated filters: Detect PII leaks, sexual content, medical/legal advice.

- Human-in-loop: Manual review for high-risk outcomes.

- Confidence thresholds: Route low-confidence outputs to higher-capability models or humans.

- SLOs & rollback: Set safety SLOs (e.g., <1% hallucination in extract tasks) and rollback plan when SLOs break.

Rate-Limits & Throttling Design

- Respect API provider quotas.

- Implement client-side throttles, exponential backoff, and circuit breakers in agent workflows to prevent cascading failures.

- Monitor token burn rate and set emergency thresholds to prevent runaway charges.

GPT-4.1 Mini: Family Comparison Table You’ll Want to See

| Feature | GPT-4.1 (full) | GPT-4.1 Mini | GPT-4.1 Nano |

| Context window | 1M tokens | 1M tokens | 1M tokens |

| Best for | Deep reasoning, high-stakes | Low latency; coding; RAG | Micro-tasks; trivial prompts |

| Cost | Highest | Mid (best cost/latency) | Lowest |

| Latency | Higher | Low | Very low |

| Use case | Research / final decisions | Production chat, agents | Bulk Preprocessing |

FAQs

A: Yes. Mini is optimized for instruction-following and code tasks. For mission-critical code, combine it with unit tests and escalation to full GPT-4.1.

A: Technically yes, but don’t just paste entire books. Use semantic retrieval and chunking so the model sees the right parts.

A: It depends on your workload. Many teams see 2× or more cost reduction for comparable throughput — but run an A/B to know for sure.

A: The GPT-4.1 family uses a knowledge cutoff around June 2024 — check docs for your deployment.

A: Often prompt engineering is enough. Fine-tuning can help for specialized behaviors but has cost/maintenance tradeoffs. Check OpenAI’s fine-tuning docs.

Conclusion

GPT-4.1 Mini is a pragmatic, production-ready model for high-throughput, latency-sensitive NLP applications. It preserves strong instruction-following and code generation while improving cost and response times. The recommended approach:

- Inventory endpoints & set KPIs.

- Build unit tests and benchmark harnesses.

- Run a 10–20% A/B rollout to Mini for one to two weeks.

- Tune prompts & retrieval to close any accuracy gaps.

- Implement escalation policies (confidence-based routing).