Gemini 1 Nano — Complete Guide

This pillar guide explains Gemini 1 Nano in plain but technically accurate NLP terms. You’ll learn what it is from an architectural and inferencing perspective, how it executes on phones and edge NPUs, how the image modes (Nano Banana / Nano Banana Pro) differ, what to measure and publish as benchmarks, developer quickstarts (on-device Android + cloud examples), workflows for product and creative teams, model limitations and privacy considerations, and a prompt library you can copy. The guide also contains a 500-word synonymized excerpt (requested) and clear publishing/SEO notes for editors.

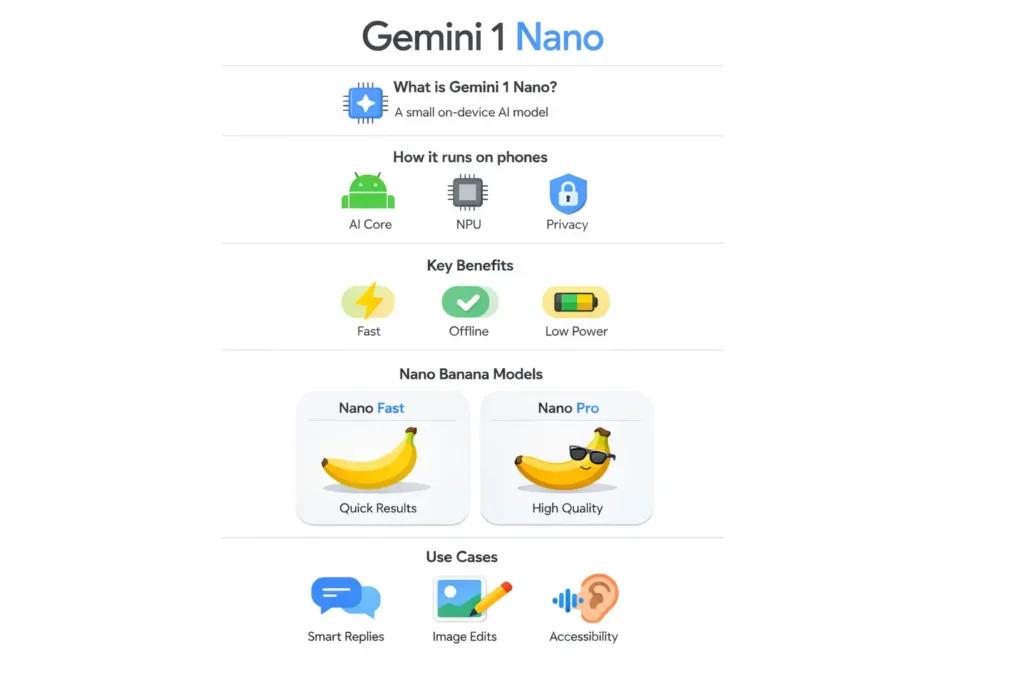

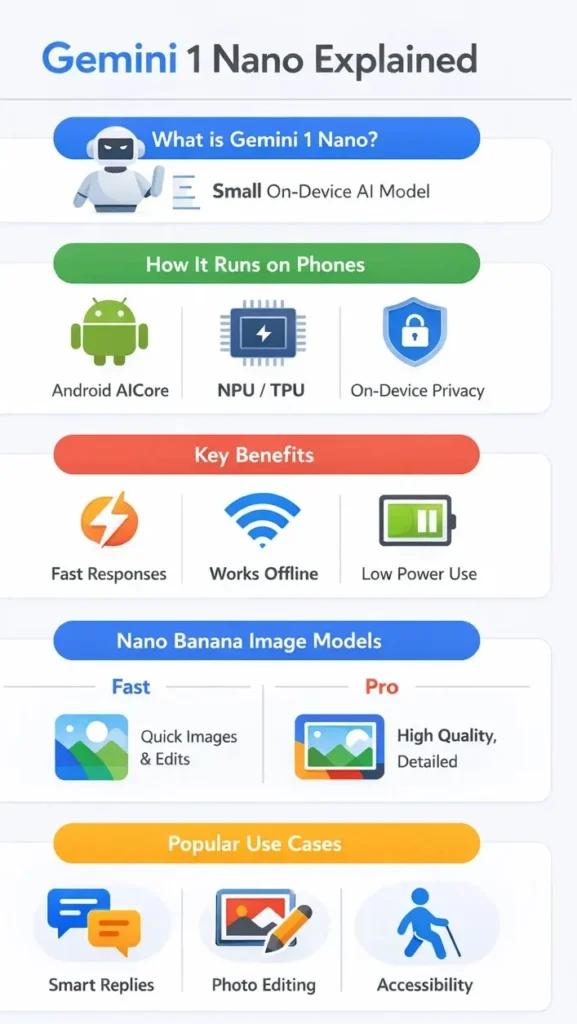

What is Gemini 1 Nano?

Gemini 1 Nano is a compact transformer-based model configuration in Google’s Gemini family engineered for on-device inference. From an NLP perspective, Nano is a heavily distilled and quantized transformer stack that preserves critical sequence modeling capabilities (tokenization, positional encoding, self-attention) but sacrifices raw parameter count and context window to meet latency, memory, and power budgets on mobile NPUs/TPUs/NPUs. It is multimodal — it accepts text and image inputs for short-form tasks (captioning, edits, summarization) — but its architecture and weights are optimized for fast sparse/dense matrix evaluation and operator fusion on mobile runtimes (TFLite/NNAPI/Vulkan).

Key design techniques usually used to get models into the Nano class include: pruning, knowledge distillation, mixed-precision quantization (e.g., 8-bit or 4-bit integer formats, PTQ/QAT), activation/weight clustering, operator fusion, and runtime-specific kernels that target Android AICore or device NPUs. The result: sub-second cold starts for simple text tasks, low energy per token, and usable multimodal edits on-device.

Why it Matters Gemini 1 Nano

- Latency: On-device inference reduces round-trip time and eliminates network jitter for many short tasks (e.g., smart replies, local summarization).

- Privacy: Keeping model execution local reduces the frequency of sending raw user data to cloud services (but does not automatically remove telemetry).

- Cost & Scalability: Offloading trivial operations to the device reduces server compute burden and cloud inference costs.

- User Experience: Faster, predictable interactions enable new UX patterns (instant message suggestions, real-time camera edits).

- Developer Productivity: Integration with Android’s AICore and ML Kit simplifies access and lifecycle management for apps.

Key Specs & How it’s Optimized For Devices

Note: Exact numerical specs (parameter count, quantization scheme, context length) may change across releases; always cite the model card or official docs when you publish.

Architectural Shape :

- Reduced number of transformer layers vs. Pro/Ultra.

- Narrower hidden sizes and fewer attention heads.

- Less outside context window (e.g., 512–2048 tokens effective for stable output).

- Different-precision weights — often 8-bit or 4-bit amounts for weights; activations may be kept in float16/float32 where hardware grants.

- Refining losses and targeted fine-tuning to preserve key capabilities for on-device tasks against a smaller capacity.

Optimization Plan:

- Size: Post-training quantization with adjustment, or quantization-aware training to maintain accuracy under low-bit calculation.

- Operator fusion: Combining adjacent linear + activation ops into single kernels to reduce memory movement.

- Trim & Lack: Cut out redundant weights or system sparsity to exploit NPU sparse trial optimizations.

- Model Queezing & packaging: Use TFLite/ONNX conversion + custom grain for NNAPI/Vulkan to reduce binary size and runtime atop.

- Runtime setup: pick batch size 1, dynamic shape handling, and left computation to reduce waiting.

Multimodal Handling:

- A compact vision encoder (e.g., a small ViT or convolutional trunk) that produces embeddings fused with text token embeddings via cross-attention layers or late fusion adapters.

- Small image decoders for edit-style outputs; heavier decoding tasks typically fallback to cloud models.

How Gemini 1 Nano Runs on Phones

AI Core & Android integration

Android exposes a system-managed surface (AICore / ML Kit) that allows apps to request model inference without bundling and maintaining the model binary directly. AICore handles model dispatching, runtime selection (NNAPI driver, vendor NPU), versioning, and permission policies. This abstraction simplifies lifecycle concerns: model updates, security patches, and test harnesses are handled by system channels.

Hardware Acceleration

- NPUs / TPUs / DSPs: On Pixel devices and many modern Android phones, vendor chips provide dedicated tensor accelerators with high multiply-add throughput and low energy per operation. These chips implement low-precision kernels natively (INT8/INT4), which Nano leverages.

- Fallbacks: When a device lacks a capable NPU, runtimes fall back to CPU or GPU. Performance/latency will vary dramatically.

- Operator availability: Not every operator (e.g., rotary embeddings, certain attention variants) has optimized kernels on every vendor. Model builders must design with common kernel availability in mind.

Runtime Considerations

- Cold start vs warm start: The first inference after model load includes deserialization and kernel initialization; caching warmed execution contexts reduces repeated overhead.

- Memory mapping & paging: The model must be memory-mapped efficiently to avoid excessive page faults. Use small initial load footprints and lazy-load segments where possible.

- Thermal & battery: Extended decoding or iterative image generation will heat devices and potentially trigger frequency throttling. For energy-sensitive UX, favor bursty short operations and hybrid flows to the cloud for heavy jobs.

Privacy & Telemetry

- On-device execution reduces exposure of raw data but does not guarantee zero telemetry. System services, crash logs, and optional analytics can still leak metadata. For high-compliance apps (e.g., medical), perform threat modeling and legal review. Provide user-facing transparency in settings.

Gemini 1 Nano— Image Generation & Editing Explained

“Nano Banana” is the consumer-facing name for the fast, on-device-friendly image generation mode. Architecturally, think of Nano Banana as a lightweight diffusion or autoregressive image head coupled to the Nano multimodal encoder. In the cloud, the same family supports higher-capacity decoders (Nano Banana Pro / Thinking) that run with larger diffusion schedules, higher-resolution latent spaces, and better text rendering capabilities.

Fast (Nano Banana)

- Purpose: Rapid prototyping, social edits, style transfers, thumbnail generation.

- Architecture: Smaller UNet-like or transformer decoder with fewer parameters and a shorter denoising schedule.

- Tradeoffs: Faster per-image time, lower energy, more artifacts in fine detail (hands, small text), potentially less color fidelity.

Pro (Nano Banana Pro / Thinking)

- Purpose: Production-grade images where text readability and fine detail matter (posters, packaging).

- Architecture: Larger decoder, more denoising steps, higher-resolution latents and attention capacity.

- Tradeoffs: Slower; typically cloud-executed. Significantly better text rendering, anatomy, and complex scene coherency.

Common Artifacts & Mitigation

- Text & labels: Use Pro for images with legible text. Alternatively, post-generate text layers in a vector editor.

- Hands & fine anatomy: Add explicit constraints in prompts (e.g., “realistic hands with five fingers, natural finger positions”) and use Pro for final renders.

- Color shifts/skin tones: Include color anchors in prompts: “preserve natural skin tone; sample from reference image.”

- Postprocessing: Apply denoising, super-resolution, or manual touch-ups in an external editor.

Benchmarks & Real-World Performance

Readers want numbers. If you can’t run tests, publishing a transparent methodology is still valuable. Below is a reproducible checklist and suggested table fields.

Gemini 1 Nano: What to Measure

- Cold start latency: Time from API call to first token/reply on a specified device/OS.

- Warm start latency: Time after the model is already loaded.

- Token throughput: Tokens/sec (text) on Pixel 9/10 and a midrange device.

- Image generation time: Seconds per 512×512 or 1024×1024 image for Nano Banana vs Nano Banana Pro.

- Energy draw: % Battery consumed over X images or Y minutes (specify screen on/off, brightness).

- Memory footprint: Peak RAM usage during inference.

- Perplexity/quality proxies: Task-specific metrics (ROUGE, BLEU for summarization; FID/IS for image quality if available).

- Stability across context: Degradation curves as we increase context length (512→1024→2048).

Example Benchmark Table

| Metric | Pixel Model X (Tensor G4) | Midrange Device Y | Notes |

| Cold start latency (text) | 320 ms | 780 ms | median, 50 samples |

| Warm latency (first token) | 45 ms | 120 ms | model resident |

| Token throughput (tokens/sec) | 280 | 95 | 32-token prompts |

| 512² image generation (Nano Banana) | 4.2 s | 9.8 s | single-step pipeline |

| 1024² image (Pro, cloud) | 8–15 s | N/A | cloud-only |

| Battery drop (30 min image loop) | 6% | 11% | screen on, manual loop |

| Peak RAM use | 420 MB | 1.1 GB | includes a visual encoder |

Best Practices For Developers

- Graceful fallback: If NPU/driver is unavailable, fall back to GPU or cloud with user-friendly messaging.

- Cache & reuse: Persist warmed model contexts for frequent tasks to reduce latency.

- Privacy-first: Offer clear toggles for on-device only processing, telemetry opt-out, and local data deletion.

- Monitoring: Track cold/warm start ratios and edge device failures to triage model shipping issues.

Gemini 1 Nano Use cases & Workflows

Productivity & Everyday

- Smart replies & composition assistance: Local short-form suggestions with contextual privacy.

- Recorder summarization: On-device summarization of voice notes when permitted.

- Search & inline help: On-device query refinement and suggestions in app UIs.

Creators

- Rapid prototyping: Generate multiple thumbnail ideas locally before lifting the best cloud for final polish.

- In-app editing: Fast filters, background replacement, and color grading.

- Thumbnail & social content pipeline: Use Nano Banana for drafts, Nano Banana Pro for final uploads.

Accessibility

- Alt-text generation: Local, instantaneous rich image descriptions for users with low vision.

- Real-time simplification: Readable simplified summaries for complex text in offline contexts.

Hybrid Pattern

- Local-first: Try Nano for speed & privacy.

- Cloud escalation: If the task requires longer context or higher-fidelity outputs, transparently escalate to cloud Pro/Ultra.

- Human-in-loop: For high-stakes outputs, include human verification or citation checks.

Gemini 1 Nano Limitations, Privacy & Safety

Limitations

- Capacity constraints: Nano cannot substitute for cloud models on deep reasoning, long-form synthesis, or detailed multi-step code generation.

- Hallucinations: Smaller models still hallucinate facts — verify important outputs.

- Multimodal fidelity limits: Nano Banana may not perfectly render tiny text or complex layouts.

Privacy & compliance

- On-device is not an automatic compliance solution. Investigate telemetry, OS-level logs, and any optional crash reporting.

- Permissions: Be explicit about permission usage for the microphone/gallery.

- Data residency & audits: For regulated industries, maintain auditable logs of where data was processed.

Safety

- Bias & fairness: Distillation and pruning can amplify biases in surprising ways; test across languages and demographics.

- Malicious use: Consider abuse vectors (deepfakes, fabricated certificates) and include usage limits and abuse detection.

Comparison table: Nano vs Flash vs Pro

| Feature / Metric | Gemini 1 Nano (on-device) | Gemini Flash (fast cloud/edge) | Gemini Pro/Ultra (cloud) |

| Typical use | Low-latency on-device tasks, quick multimodal edits | Fast cloud responses for medium complexity | Long-form, deep Reasoning, highest quality images |

| Latency | Very low (local) | Low-moderate | Higher (but stable) |

| Context length | Short/medium | Medium | Longest |

| Image fidelity | Good (fast styles) | Good–Very good | Best |

| Battery impact | On device — can increase battery | Minimal device impact | Minimal device impact |

| Privacy | Stronger (local processing) | Moderate | Lower (data to cloud) |

| Deploy complexity | Low for apps using AICore | Moderate | Moderate-high (auth, infra) |

Pros & Cons Gemini 1 Nano

Pros

- Instant low latency for many tasks.

- Stronger local privacy footprint for user content.

- Enables offline or intermittent-network features.

- Lowers cloud cost for trivial tasks.

- Simple integration via AICore / ML Kit for Android.

Cons

- Less capable than cloud Pro/Ultra for deep reasoning or highest-fidelity images.

- Device performance varies widely by model and driver.

- Extended heavy use increases battery draw and thermal throttling risk.

- Telemetry may still occur; on-device ≠ zero telemetry.

FAQs Gemini 1 Nano

A: In many cases, yes — Gemini Nano can run locally on supported devices and do tasks offline, like summarizing recordings or editing photos. But exact offline features depend on your phone model, OS version, and region. Check your device settings and the app’s permissions.

A: Google enables Nano gradually. Newer Pixel phones with Tensor chips get priority. Support varies by device, OS version, and region — always check Pixel product pages and Android developer docs for the current list.

A: Nano Banana is the fast image generation mode for quick images and edits. The Nano Banana Pro (also known as the Thinking/Pro image model) provides better detail, clearer text, and higher fidelity outputs. Use Fast for prototypes and Pro for final images.

A: Yes for low-latency, privacy-sensitive features (like local summarization and instant replies). For high-stakes or high-quality generation, use a hybrid approach: local Nano for quick responses and cloud Pro/Ultra for final outputs and reviews

Conclusion Gemini 1 Nano

Gemini 1 Nano is a distilled, quantized transformer variant tuned for on-device multimodal inference. From an NLP engineering standpoint, its value proposition is speed, battery-efficient inference, and improved privacy for short-form tasks. For developers, integrate Nano via Android AICore or ML Kit and design graceful cloud fallbacks for heavier jobs. For creators, use Nano Banana for rapid ideation and Nano Banana Pro for production-grade results. Always publish a transparent benchmark methodology, including device/OS details, and present clear, user-facing privacy controls.