Perplexity PPLX Models — Complete 2025 Guide

Perplexity PPLX Models — Complete 2025 Guide. If you build tools, products, or research workflows that need fresh, sourceable answers, this guide is for you. It explains what the Perplexity PPLX Models are, how the pplx-api works, real-world use cases, integration tips, practical benchmarks to run, known limits, and a suggested pilot plan. Where Perplexity makes claims, I direct you to independent commentary and documentation so you can verify them before production.

What are Perplexity PPLX Models?

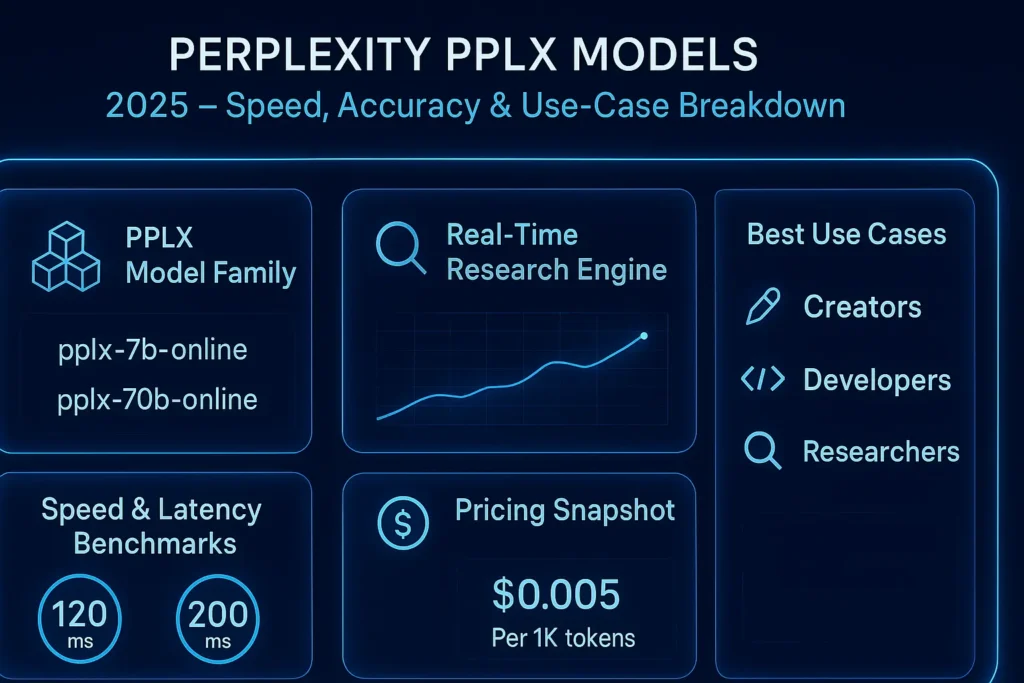

Perplexity PPLX Models are a family of online large language models offered by Perplexity AI that blend a foundation LLM (7B or 70B scale) with externally retrieved web content at inference time. The two headline instances you’ll see are pplx-7b-online and pplx-70b-online. Conceptually, PPLX is an implementation of retrieval-augmented generation (RAG) where retrieved fragments — small web snippets or cached pages — are injected into the model input so the generation is grounded in recent, sourceable material.

From an NLP framing: think of PPLX as a dual system — a retrieval layer that produces short, high-signal evidence fragments, and a sequence model that conditions on those fragments plus its parametric knowledge to produce fluent, citation-aware outputs.

Why “online” Matters: an online model can ingest recent pages, news, and updates at inference time — meaning it can provide answers about events after its training cutoff, with links for traceability. Practically, this reduces “staleness” and turns the LLM into a bridge between parametric knowledge and real-world documents.

Quick verdict — who should care about Perplexity PPLX Models

Who should care

- Researchers and analysts who need fresh and cited answers.

- Developers building chatbots, search tools, or summarizers where traceability and citations matter.

- Enterprises that want a hosted API with low-latency, web-grounded responses.

Who might NOT prefer PPLX

- Teams that require strictly offline-only inference (no web data).

- Use cases where legal/copyright risk from web-sourced content is unacceptable without heavy review.

- Ultra-sensitive pipelines requiring deterministic outputs with zero external grounding.

PPLX model lineup & specs

| Model | Base | Best for | Notes |

| pplx-7b-online | 7B-class foundation (efficient) | Fast, cost-efficient, real-time Q&A | Good latency for short queries and high volume. |

| pplx-70b-online | 70B-class foundation (higher capacity) | Complex reasoning, deep research | More context window and capacity; better for multi-step tasks. |

| pplx-7b-chat / pplx-70b-chat | Chat-tuned variants | Conversational apps | Dialogue-optimized for back-and-forth interaction. |

Note: Perplexity publishes product posts and docs describing the family and pplx-api — always link to their canonical docs for precise parameter lists and up-to-date availability.

How Perplexity PPLX Models

At an abstract level, PPLX implements Retrieval-Augmented Generation (RAG) with engineering optimizations for speed and traceability. The typical pipeline:

- User query → retrieval

Perplexity’s backend identifies relevant web pages (or cached fragments). Retrieval is tuned to return concise, high-recall snippets. - Fragment injection

Retrieved fragments are packaged into the model input as extra context. The fragments are typically short (to control token budget) and prioritized. - Model generation

The underlying LM (7B or 70B) conditions on both its internal weights and the injected fragments to produce an answer that cites supporting material. - Citation surfacing

Where possible, Perplexity returns links or snippet attributions so answers are traceable back to the source content.

From an NLP lens, key concepts at play:

- Context window management: fragments + prompt must fit the model’s token window; careful truncation & scoring is crucial.

- Prompt engineering: templates that label fragments and instruct the LLM to “cite” and “summarize” reduce hallucinations.

- Evidence weighting: retrieval score + snippet quality should inform how the model prioritizes sources in generation.

- Post-processing: citation normalization and link validation reduce fabricated URLs.

Important caveat: community reports suggest the “online” behavior sometimes uses cached/indexed data rather than a live browser on each call. That’s often acceptable (freshness is high), but if you need minute-by-minute accuracy, include tests that reflect your freshness requirement.

Latency, throughput & pricing — what to expect

Latency & performance claims

Perplexity markets PPLX for low-latency inference suitable for production applications. Real-world latency will depend on model choice (7B vs 70B), fragment quantity, token lengths, and network conditions. Always benchmark in your target environment — vendor claims are useful but not a substitute for empirical testing.

Pricing & free credit

Perplexity uses usage-based API pricing. At certain times, they have offered credits for Pro subscribers; these promotions change often. Always check Perplexity’s pricing docs when budgeting. In production, include a buffer for spikes, and track your cost-per-use (tokens per response + requests).

How to benchmark PPLX

A robust benchmark measures several axes: freshness, factuality, latency, hallucination rate, and cost per useful result. Below is a repeatable plan.

Benchmark goals

- Freshness: Does the model answer recent events?

- Factuality: Are the returned facts correct against the ground truth?

- Latency: Median & p95 latencies for typical calls.

- Hallucination rate: Frequency of fabricated claims or links.

- Cost per useful result: Dollars per verified answer.

Suggested dataset & tasks

- Freshness: Ask about items from the last 24–72 hours (news headlines, earnings, regulatory updates).

- Factuality: Ask about facts (product specs, historical dates).

- Reasoning: Multi-step prompts (e.g., “Plan a 3-step migration for X with risks and rollback”).

- Source quality: Request sources and verify that top-cited links actually contain the claimed evidence.

Measurement approach

- Use the same prompt across models (pplx-7b-online, pplx-70b-online, and your offline LLM baselines).

- Run 50+ queries per task to collect stable stats.

- Capture median latency, p95 latency, token counts, and error rates.

- Use human raters to mark correctness (binary) and annotate hallucinations.

- Add automated checks: fetch cited URLs and confirm snippet presence.

Example metrics to compute

- Accuracy (human): percentage of answers rated correct.

- Citation precision: % of cited URLs that corroborate the claim.

- Median latency & p95 latency.

- Cost per correct answer.

Why this matters: Vendor metrics are useful, but Independent testing with your prompts and traffic profile reveals real behavior for your use case.

Strengths, limitations & community signals

Strengths

- Freshness: Useful for recent events because of fragment injection.

- Citation-friendly: When configured, PPLX returns sources, making answers traceable.

- Speed: Engineered for low-latency inference relative to typical hosted 70B-class endpoints.

Limitations / Risks

- Stability: Some community reports mention occasional 5xx errors; build retries and fallbacks.

- Hallucinated sources: The model can sometimes provide fabricated or misleading URLs — always validate citations for critical outputs.

- Cache vs true live browsing: “Online” may rely on nearline caches or indexed snapshots; test for the freshness granularity you require.

Community and press coverage

Perplexity’s PPLX launch and pplx-api have attracted independent coverage and early adopter commentary. Use a mix of vendor docs and independent posts for your EEAT links.

Safety, legal & copyright considerations

Web-sourced content introduces risks you must mitigate.

Copyright & reuse

- Web fragments may be copyrighted. If you republish or monetize derivative content, verify licensing or transform content sufficiently, and track attribution requirements.

Misinformation & fabricated links

- The model may hallucinate claims or sources. Programmatic verification (fetch the top-cited URL and check the text) reduces risk A

Data privacy

- Avoid sending sensitive PII to third-party APIs without reviewing Perplexity’s data handling policy and contractual safeguards.

Mitigations

- Add citation validation layers (confirm links return expected content).

- Maintain human-in-the-loop for regulated outputs.

- Keep logs and evidence snapshots for audits.

FAQs Perplexity PPLX Models

1: “Online” means the model is augmented with web data. Perplexity retrieves web fragments relevant to your query and feeds them into the model, so answers are grounded in recent external content. This helps with freshness and sourceable responses.

2: pplx-7b-online is lighter and faster — good for simple, high-volume queries. pplx-70b-online is larger and better at complex reasoning and longer context. Choose based on latency vs capability tradeoffs.

3: Perplexity has usage-based pricing. Perplexity Pro users receive a recurring $5 monthly credit for certain API features — check Perplexity’s billing docs for the latest terms. Free users can still access API features but may not get the Pro credit.

4: No — sometimes the model can return low-quality or even fabricated links. For critical use, validate citations programmatically and via human review. Community threads discuss source hallucinations, so plan accordingly.

5: With caution. Use source validation, human review, and legal checks on content reuse. For highly regulated outputs, consider an additional approval step.

Conclusion Perplexity PPLX Models

Perplexity’s PPLX models are rapidly becoming the go-to choice for real-time research, fast generation, and high-confidence answers — especially for creators, developers, and teams who need speed backed by web-grounded accuracy. With its expanding model family, low-latency API, and deep research capabilities, PPLX is positioning itself as a serious alternative to traditional LLMs. By understanding how each model performs, where it excels, and how to integrate it into your workflows, you can unlock far better results than your competitors — and stay ahead in 2025’s AI-driven content landscape