Perplexity PPLX API vs Leonardo XL — Intelligence Meets Creativity

Perplexity PPLX Models / API vs Leonardo Diffusion XL — Which AI truly powers your content factory? Discover how PPLX delivers real-time, sourced answers while Leonardo creates professional visuals. Learn the hybrid workflow that boosts efficiency, Perplexity PPLX Models / API vs Leonardo Diffusion XL saves hours, and scales production with 95% accuracy. Developers, marketers, and solopreneurs: unlock smarter AI decisions in minutes. By 2026, successful AI systems will be modular stacks rather than monolithic oracles. Teams pick the right specialized engine for each axis of product value: accuracy and provenance (fresh, verifiable answers) or visual quality and style reproducibility (high-fidelity images). This comparison clarifies where Perplexity’s PPLX family (retrieval-augmented, online language models and the PPLX API) outperforms — and where Leonardo Diffusion XL dominates — so you can make an engineering-grade decision for real products.

This pillar Perplexity PPLX Models / API vs Leonardo Diffusion XL guide is written in NLP/ML terms for engineers, ML product leads, and technical content strategists. It synthesizes architecture, sampling & inference behaviors, evaluation metrics, integration patterns, cost logic, safety/governance considerations, and concrete, production-ready patterns for combining both modalities.

How Perplexity PPLX API Delivers Fresh, Grounded Answers

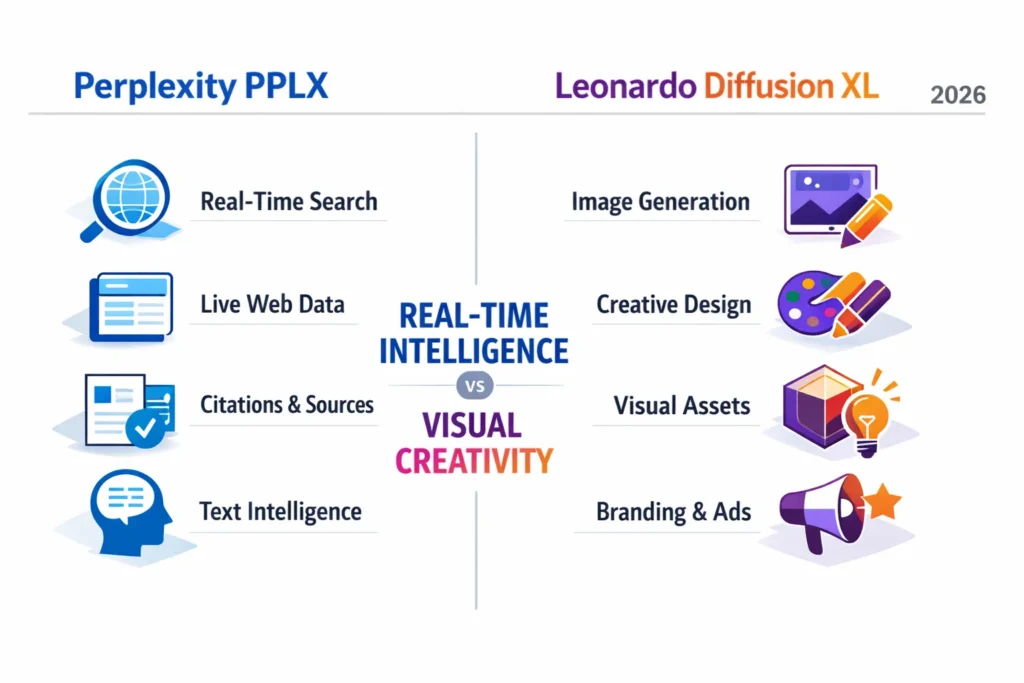

- Perplexity PPLX Models / API: Retrieval-augmented language models that search, fetch, and synthesize live web data for grounded answers. Best for search engines, research platforms, knowledge systems, and any application that needs freshness and provenance.

- Leonardo Diffusion XL: High-quality text→image diffusion model optimized for prompt alignment, visual fidelity, and stable style reproduction. Best for design teams, marketing assets, game art, and production creative pipelines.

- Key dichotomy: PPLX = information & grounded reasoning. Leonardo = visual generation & style control.

- Best practice: Use both in a multi-modal stack — PPLX to generate captions, descriptions, and contextual metadata; Leonardo to synthesize the final images.

What are Perplexity PPLX Models & the PPLX API?

Conceptual role. PPLX models are retrieval-augmented generative systems: that is, the LLM is tightly coupled with a real-time retrieval subsystem, which sources, ranks, and then conditions generation on fresh web evidence. Architecturally, this is a form of Retrieval-Augmented Generation (RAG) or Augmented LLM pipeline optimized for online, low-latency production.

Core components (logical):

- Retriever/web search module — issues queries to live search endpoints and curated sources.

- Ranker/filter — applies heuristics and learned scoring to pick high-precision passages.

- Context assembly — constructs a retrieval context (few–to–many passages) and injects it into the model prompt.

- Generator (PPLX model) — consumes context + query to produce grounded output with provenance signals (citations, snippets).

- Postprocessor/citation annotator — formats output, attaches source links, and optionally executes answer verification checks.

Why this matters: By design, PPLX reduces hallucination risk because every fact can be traced to a recent web artifact. Instead of being purely parametric memory, the model behaves like a sophisticated answer engine.

Representative PPLX variants (2026 nomenclature)

- pplx-7b-online — lightweight, efficient, good for low-cost lookups and high QPS.

- pplx-70b-online — deeper reasoning, higher context capacity, better for complex synthesis.

- PPLX API — production endpoints exposing search-grounded text generation primitives, streaming tokens, and provenance attachments.

How Perplexity PPLX works — An Engineer’s step-by-step

- Query submission: User asks a natural language question.

- Query expansion/retrieval call: The system translates intent into retrieval queries.

- Candidate retrieval: The search subsystem returns ranked documents/snippets from live sources.

- Passage selection & context truncation: Select highest-value fragments under token limits (freshness thresholds applied).

- Conditioned generation: PPLX model generates an answer conditioned on those passages, plus a generation template instructing the model to cite or summarize.

- Post-generation verification: Optional factuality checks, source scoring, orcontradiction detectors applied.

- Response with provenance: Final answer delivered with citations and metadata.

Key properties: Deterministic retrieval reduces unsupported inference; freshness depends on retrieval index / live search latency; quality tradeoffs come down to retriever precision and model calibration on citation behavior.

Why traditional offline LLMs fall short for freshness

Traditional LLMs rely on static pretraining corpora; they lack built-in mechanisms to access the web. Consequences:

- Staleness — knowledge cutoff leads to incorrect time-sensitive answers.

- Hallucination — in the absence of evidence, models invent plausible but incorrect facts.

- No provenance — no reliable link to source artifacts for verification or audits.

PPLX solves these via retrieval + on-the-fly conditioning.

What is Leonardo Diffusion XL?

Model class. Leonardo Diffusion XL is a text-conditioned diffusion model optimized for production image generation. It is a denoising probabilistic architecture trained to invert a forward noising process and conditioned via text embeddings (often CLIP or a transformer encoder).

Core Technical Properties:

- Diffusion backbone — UNet-like denoiser with attention, multi-scale features, and up/downsampling.

- Text conditioning — encoder to map prompt tokens into conditioning vectors, often passed via cross-attention into UNet.

- Classifier-free guidance — enables tradeoff between fidelity & adherence to prompt by interpolating conditional and unconditional scores.

- High resolution & consistency — training strategies and dataset curation aimed at fewer artefacts (hands, faces, proportion).

- Prompt alignment — specialized prompt encoder + prompt engineering guidelines reduce variance and improve reproducibility across runs.

Why it’s different: Leonardo is purely generative for images; there is no retrieval or factual grounding mechanism. It optimizes visual realism, repeatability, and short prompt robustness.

Diffusion Primer

- Forward process: Iteratively add Gaussian noise to images.

- Reverse process: Learn a denoiser that, given a noisy image and timestep, predicts a less noisy image; repeated denoising yields a sample from the learned data distribution.

- Conditional sampling: Condition denoiser on text embeddings; use guidance scale to steer the sample toward stronger textual alignment.

- Sampling speed: Classic DDIM/ancestral sampling vs optimized samplers (e.g., DPM++/Euler a) — trade speed vs fidelity.

Head-to-Head: Feature Matrix

| Feature | Perplexity PPLX API | Leonardo Diffusion XL |

| Primary function | Real-time retrieval-grounded language generation | Text-to-image diffusion generation |

| Output | Text answers with citations | High-res images/assets |

| Freshness | ✅ Online retrieval (live) | ❌ Static training corpus |

| Provenance | ✅ Source links/citations | ⚠ Limited (training corpus provenance not live) |

| Typical latency | Low for text (tens–hundreds ms) | Higher (seconds; GPU cost) |

| Cost model | Token/inference / QPS | Per-image compute; credit tiers |

| Best for | Search, news, research, knowledge | Design, marketing, game art, mockups |

| Hallucination risk | Lower (with a strong retriever) | Not applicable (visual artifacts are different) |

| Creative output | Limited | Core competency |

| Integrations | Embeddable in knowledge stacks | Creative pipelines, asset management |

Performance, Cost & Latency Tradeoffs

PPLX:

- Latency: Optimized for short interactions; network round-trip for retrieval adds small overhead. Architect for caching (query result caches, snippet caches) to minimize repeated retrieval cost.

- Cost: token-centric. Cost scales with query volume and context length. Use smart context selection and passage summarization to lower per-request tokens.

- Scaling patterns: Multi-region retriever caches, async prefetching for common queries, rate limiting, and batching atthe API gateway.

Leonardo:

- Latency: Image sampling is compute-intensive; latency depends on sampler steps and hardware. Use optimized samplers, lower step counts, or latent diffusion to reduce runtime.

Cost: per-image compute (credits, GPU time). Higher resolution and larger batch sizes increase cost.

- Scaling patterns: on-demand GPU pools, progressive generation (thumbnail → final), caching rendered assets for repeat requests.

Evaluation Metrics — Text & Image

Text / PPLX:

- Factuality: Entailment-based checks, question-answer consistency, human evaluation.

- Citation precision/recall: Fraction of claims with correct source attribution.

- ROUGE / BLEU / BERTScore: For specific summarization tasks (limited to open-ended QA).

- Human metrics: Trustworthiness, helpfulness, clarity.

Images / Leonardo:

- FID / CLIP score / IS: Distributional & alignment metrics.

- Perceptual quality: Human A/B tests, technical QA for artifacts (hands, text in images).

- Style consistency: Intra-asset variance and style drift metrics.

When choosing metrics, align with the product KPI (factual accuracy for knowledge products; brand fidelity for marketing assets).

Real-world Use Cases — where Each Wins

Perplexity PPLX wins when:

- Live news summarization and timeline generation.

- Research assistants who need citations and up-to-date references.

- Market intelligence tools and competitor monitoring dashboards.

- Compliance and legal Q&A systems that require evidence traces.

Leonardo Diffusion XL wins when:

- Producing consistent brand visuals at scale.

- Rapid prototyping of game concept art.

- Marketing campaigns need many variant assets with a stable style.

- Product mockups and editorial illustrations with predictable prompt behavior.

Combined, multi-modal patterns:

- PPLX writes image briefs, alt text, and captions (grounded in context) → Leonardo generates assets from those briefs → PPLX synthesizes release notes, social copy, or image metadata with citations and legal checks.

- Retrieval of brand assets + style prompt templates passed to Leonardo to ensure brand compliance.

Integration Patterns & Architecture Recommendations

“Answer + Asset.”

- User requests a product explainer (text + image).

- PPLX produces a grounded explanation + recommended visual brief (structured JSON: scene, style, focal objects, shot parameters).

- Pass the brief to the Leonardo API to render images.

- PPLX produces final social copy and alt text referencing sources.

“Editorial pipeline.”

- Editorial CMS calls PPLX for article drafts and citationed facts.

- Draft + editorial brief passed to Leonardo for hero images.

- Human in the loop reviews both textual facts and imagery for brand safety.

“Automated freshness + asset regeneration.”

- When a fact referenced in an image caption changes, trigger a Regeneration workflow that updates copy (via PPLX) and optionally the asset (via Leonardo), maintaining provenance logs.

Operational concerns: caching, provenance logging, cost alarms, A/B testing, and legal review for image copyright and dataset provenance.

Safety, Governance & IP Considerations

PPLX (text)

- Provenance requirements — always surface sources for claims; store retrieval snapshots for audits.

- Moderation — filters for toxic content, defamation checks; human review for high-risk answers.

- Compliance — data retention, right to be forgotten, regulatory constraints.

Leonardo (images)

- Copyright & dataset provenance — Maintain policies for acceptability and attribution. Ensure licensing terms for commercial usage are explicit.

- Content policy & filters — Explicit generation constraints to block disallowed content (adult, illicit).

- Deepfake risk — Safeguards for generating realistic likenesses; require consent flows.

Developer checklist — productionizing PPLX + Leonardo

- Design interfaces: JSON schema for briefs (scene, style, seed, negative prompts).

- Implement retrieval caching: TTLs and cache keys for common queries.

- Control costs: sampling steps, batch rendering, and token pruning.

- Logging & observability: record prompts, retrieval snapshots, image seeds, and final outputs.

- Human pipelines: QA queues for high-risk content; editorial approval gates.

- Evaluation harness: A/B experiments with KPIs mapped to factual accuracy and brand fidelity.

- Security & rate limits: protect APIs and enforce quotas.

- Legal & licensing: store consent and usage rights for generated assets.

Cost modeling — simple Examples

- Text-heavy app (PPLX): 1M queries/month × average 200 tokens → token cost * pricing. Use caching for repeat queries to reduce cost by X%.

- Image-heavy app (Leonardo): 100k images/month × average compute per image → GPU/credit cost. Use progressive rendering and reuse assets to reduce per-asset cost.

Exact numbers depend on vendor pricing; always instrument and simulate consumption.

Evaluation + Monitoring — Metrics you Must Track

PPLX:

- Citation accuracy rate

- Factual contradiction rate (automated detectors)

- Latency P95 and error rate

Leonardo:

- Artifact rate (human-reported)

- CLIP alignment improvement across prompt tuning

- Rendering time distribution & failed render rate

How Modern Teams Combine Both

- Marketing team: PPLX drafts product positioning and A/B text variants; Leonardo generates asset variants; analytics measures conversion lift.

- Game studio: PPLX synthesizes lore snippets and item descriptions; Leonardo creates concept art and UI skins; humans curate and pipeline to art teams.

- Knowledge product: PPLX powers search & explanations; Leonardo creates illustrative diagrams or visual summaries for complex concepts; both outputs are stored in CMS with provenance.

Pros & cons

Perplexity PPLX

Pros: real-time facts, lower hallucination risk (with strong retriever), fast for text, provenance.

Cons: limited for creative images, context length & long-term memory constraints, reliant on retriever quality.

Leonardo Diffusion XL

Pros: production-grade images, style reproducibility, prompt robustness.

Cons: no factual web retrieval, sample variance, computation cost, and dataset provenance concerns.

Final Verdict — Which one should you choose?

- If your product’s core value is accurate, up-to-date information with traceable sources, choose Perplexity PPLX.

- If your product’s core value is high-quality, reproducible visual content, choose Leonardo Diffusion XL.

- For most modern products, the optimal architecture is a hybrid stack: PPLX for text intelligence and provenance; Leonardo for visual generation — integrated via structured briefs, approval gates, and shared asset metadata.

FAQs

No. They solve fundamentally different problems: PPLX is for text and real-time data; diffusion models are for image generation. Choose based on your product KPI.

No. It generates images only and does not provide factual reasoning or live web retrieval.

It depends. If you’re building a knowledge-centric product, PPLX. If you’re building a creative asset pipeline, Leonardo. Often, early startups use both: PPLX for copy and SEO, Leonardo for landing creative.

Yes. Hybrid workflows where PPLX generates structured briefs and Leonardo consumes them for asset generation are a high-impact pattern.

Conclusion:

Perplexity PPLX Models / API and Leonardo Diffusion XL are built for entirely different strengths. PPLX excels at real-time, accurate, source-grounded intelligence, making it ideal for search, research, and knowledge-driven products. Leonardo Diffusion XL specializes in high-quality, consistent visual generation for design, marketing, and creative workflows. In 2026, the smartest teams don’t choose between them—they combine both to deliver accurate information and compelling visuals in a single, powerful AI stack.