Introduction

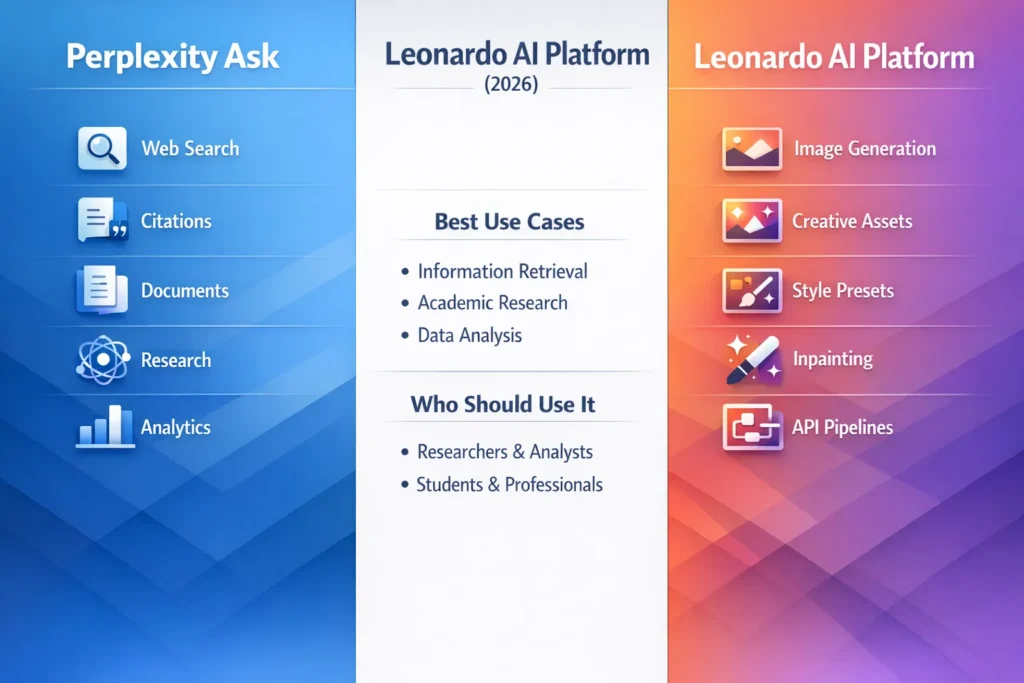

Perplexity Ask vs Leonardo AI Platform can help you decide which AI truly fits your needs. In just 2 minutes, achieve 98% clearer insight into features, use cases, and real performance. Perplexity Ask vs Leonardo AI Platform: Explore this comparison, make a confident choice faster, and see real results that actually match your goals—without confusion or wasted time. When teams evaluate AI platforms in 2026, they are effectively deciding which part of the machine-learning stack they want to operationalize and optimize internally. At a high level, Perplexity Ask vs Leonardo AI Platform acts as an evidence-first conversational retrieval-augmented system: its core stack is retrieval + generative summarization with provenance. Leonardo.ai is an image-generation system built around controlled generative models, style conditioning, and deterministic reproducibility for design pipelines.

Perplexity Ask vs Leonardo AI Platform. Viewed through natural language processing and generative AI lenses, the choice is about two different technical pipelines and product affordances:

- Perpl

- Leonardo.ai ≈ conditional image generation + style conditioning + image pipeline primitives (img2img, inpainting, upscaling) + asset reproducibility.

Perplexity Ask vs Leonardo AI Platform: Choosing the wrong tool wastes time, money, and creative energy. Perplexity Ask vs Leonardo AI Platform: The right pick speeds up product launches, marketing campaigns, and evidence-backed decision-making. This guide reframes the original product comparison in explicit NLP terms — architectures, inputs/outputs, evaluation metrics, reproducible prompts, privacy implications of training/retention, and concrete playbooks for procurement and pilots.

If you want a copy-paste-ready procurement checklist, reproducible prompts, or a downloadable pilot template, skip to the CTA at the end.

Perplexity vs Leonardo — Which One Suits You Best?

- Pick Perplexity. Ask when your main need is evidence-backed retrieval, traceable answers, and short-form synthesis with provenance. It excels at RAG (retrieval-augmented generation) pipelines, internal knowledge search, and quick market scans that require citations.

- Pick Leonardo.ai when your main need is repeatable, high-fidelity visual output with controllable style and seed-level reproducibility. It’s built for production imagery: consistent style, inpainting workflows, and API-driven asset pipelines.

- Use both together when you want a repeatable end-to-end content pipeline: Perplexity builds the brief (audience, claims, competitor references) and Leonardo generates brand-aligned visual assets using that brief as a prompt template.

Deep Dive — How These AIs Really Work

Perplexity Ask

Perplexity is best described as a hybrid RAG system where:

- Retriever: Perplexity uses web-scale retrieval and site crawling to fetch candidate documents and web passages. This is the vector/scoring stage supporting downstream summarization.

- Reader / Fusion model: A generation model synthesizes retrieved passages into a concise answer. The model architecture is tuned to produce “citation anchors” (passage-level attributions) so users can click through.

- Provenance & UI: The product UI exposes sources, enabling human-in-the-loop verification and audit. The UI also supports Pages and internal org search for indexed corpora.

- Access patterns: Real-time conversational queries trigger retrieval + answer generation; special features (e.g., Comet-style agent/browser) allow the model to fetch and summarize arbitrary pages on the fly.

From an NLP standpoint, Perplexity’s strengths are in retrieval quality (precision of the retriever), passage ranking, attribution mechanisms (which passages are shown), and a fusion/aggregation model that avoids undue hallucination by linking claims to web evidence.

Leonardo.ai — short explainer

Leonardo. A

- Base generators: High-capacity diffusion or transformer-based image models (often variants of diffusion/latent diffusion or earlier SDXl-type families) that map text embeddings → image latents → pixel outputs.

- Style control modules: Presets, LoRA adapters, and model selection allow style conditioning and brand-specific appearance control. These are analogous to fine-tuning or parameter-efficient adapters in NLP (LoRA = Low-Rank Adaptation).

- Image operations: Img2img, inpainting, upscaling, and canvas workflows act as editing primitives (like edit, replace, upsample in an NLP editor).

- Reproducibility primitives: Seed control, exact model versioning, and generation parameters (sampling method, steps, guidance scale, negative prompts) allow deterministic reproduction when recorded with assets.

- Integration: An API-centric offering supports programmatic generation, batch jobs, and DAM (digital asset management) integration.

Viewed in NLP terms, Leonardo’s system is a conditional generator with strong mechanisms for style conditioning, deterministic seeding, and post-generation refinement (inpainting/upscaling), optimized for visual artifact quality, consistency, and pipelineability.

AI Powers Compared: Synthesis vs Conditional Output

| Feature / Need | Perplexity Ask (RAG + synthesis) | Leonardo.ai (Conditional image generation) |

| Primary function | Evidence-backed Q&A and search | Generative images & creative asset pipelines |

| Output type | Text answers + citations; short syntheses | High-resolution images, variants, and upscales |

| Team features | Pages, org search, shared queries | Team workspaces, style presets, shared assets |

| API access | Search/knowledge APIs, integration points | Full image generation API (text2img, img2img, LoRA) |

| Repeatability/Style control | Good for text consistency; limited for images | Strong: seeds, LoRA, model selection, presets |

| Security & privacy | RAG risks (prompt injection, provenance challenges) | IP & content governance; check retention/training opt-ins |

| Best for | Analysts, researchers, and PMs need traceable evidence | Designers, creative teams, and programmatic asset generation |

Deep dive — strengths and weaknesses

Perplexity Ask

Strengths

- Retrieval precision and traceable provenance: A well-engineered retriever + reranker plus UI for source exposure reduces hallucination risk and enables auditors to verify claims.

- Fast synthesis: The fusion model can generate succinct executive summaries — helpful for time-limited decision makers.

- Org search & indexing: The product supports indexing private corpora, so RAG can be applied to internal knowledge sources.

Weaknesses/attack surface/opportunities to exploit in content

- RAG pitfalls: If retrieval returns adversarial or misleading passages, the fusion model can still synthesize inaccurate outputs if the system lacks robust source weighting or hallucination checks.

- Agent/browser (Comet) risks: Agentic browsing features that fetch arbitrary web pages can be subject to prompt-injection or manipulation unless content sanitization and threat models are in place.

- Documentation / reproducible prompts: Public-facing docs may lack full reproducible testing suites (few users publish exact queries, retriever configs, or evaluation scores) — this is a content opportunity.

Leonardo.ai

Strengths

- Conditional control: Like conditioning a language model with a context window and a fine-tuned adapter, Leonardo supports LoRAs and style modules to produce consistent brand outputs.

- Deterministic reproduction: Seed control + saved parameters enable deterministic re-runs — essential for audits and legal requirements in production asset delivery.

- Editing primitives: Inpainting/upscaling are analogous to “edit” operations in text models that accept masked inputs and produce refined outputs.

Weaknesses

- Licensing & governance complexity: Terms-of-service and training-data opt-in/out clauses need careful procurement review. Organizations must map model behavior to legal/compliance requirements.

- Not a research tool: Leonardo focuses on asset generation, not evidence-backed synthesis. For market research workflows, you’ll need a complementary RAG-style tool.

AI Security & Privacy — What Enterprises Must Know

For procurement and enterprise adoption, think of both platforms as ML systems with threat surfaces and desired controls. Key items buyers should demand:

- Data has Clear statements about whether user prompts or generated assets are used to further train models, retention periods, and opt-out mechanisms.

- Compliance artifacts: SOC2 reports, ISO certifications, or equivalent attestations. These are the baseline for enterprise procurement.

- Pen test results & CVE history: Evidence of security testing and vulnerability patch timelines.

- Threat modeling for agentic features: For any feature that fetches, executes, or summarizes external web pages (Comet-like behaviors), require adversarial testing and prompt-injection mitigations (input sanitization, source whitelisting/blacklisting, constrained execution contexts).

- Provenance and reproducibility: For Leonardo, insist on downloadable metadata (model version, seed, prompt, negative prompts, sampling parameters) attached to each asset in the API—this supports audit trails and legal defense.

- PII/PHI protections: If your teams handle regulated data, verify that the vendor offers private deployments, on-prem options, or strict data isolation and non-training assurances.

Pricing & Scale — Which AI Gives More Value?

Models are cheap; inference at scale, data storage, and high-res upscales are not. When estimating cost, model your usage across these drivers:

- Perplexity: seats + query volume + Comet/agent access + org search indexing. Cost drivers: active users, rate-limited API queries, and ingestion/monitoring costs for internal corpora.

- Leonardo.ai: API calls (text2img, img2img), upscaler usage, resolution & storage, model training/LoRA fine-tuning, and premium support/SLA. Cost drivers: number of image generations, upscales per asset, and batch vs single synchronous jobs.

How to build an estimate

- List actors by role: designers (interactive), programmatic generators (APIs), reviewers (human QA).

- Project monthly generations by class: thumbnails (low-res), hero images (high-res + upscales), variants (x per asset).

- Add storage & CDN costs for final assets.

- Add training/adapter costs if you’re fine-tuning LoRAs or creating branded adapters.

Benchmarks & practical tests

Below are three reproducible pilot tests phrased in scientific/NLP experiment terms. The objective is to measure key technical metrics and deliver clear metadata for reproducibility.

Research speed & citation accuracy (Perplexity)

Objective: Measure Time-to-Answer (TTA), Candidate Source Count, and Claim Accuracy for a market question.

Protocol:

- Query: “The AI image generation market is

- Record TTA (seconds), list of cited sources, and extract three major claims.

- Verify each claim against primary sources (score: Correct / Partially correct / Incorrect).

Metrics:

- TTA median.

- Citation breadth (unique domains).

- Claim verification rate (% correct).

final image (Leonardo)

Both: Evaluate fidelity and reproducibility for a photoreal hero image.

Protocol:

- 35-word prompt specifying subject, mood, camera, and aspect ratio.

- Generate N=4 variants using seed control; run upscaling and inpainting on the best variant.

- Save metadata: model version, seed, steps, guidance scale, sampling method, and negative prompts.

Metrics:

- Time per generation.

- Fidelity to brief (1–5) judged by human annotators.

- Reproducible

Seamless AI Integration — What Works Best?

Objective: Evaluate handoff fidelity from a RAG system to a conditional image generator.

Protocol:

- Use Perplexity to produce a 3-bullet persona and a 2-line campaign hook.

- Map output to a JSON prompt schema: {audience, mood, camera, aspect_ratio, negative_prompts}.

- Run the Leonardo API on 5 images and measure the number of usable images per 10 generations.

Metrics:

- Iterations to a usable asset.

- Usable asset rate per 10 generations.

- Time from brief → publish-ready asset.

In

For any production image generated by Leonardo or a similar platform, store the following metadata alongside the asset in your DAM:

- Model name & exact version (e.g., leonardo-model-v3.2.1)

- Prompt text & negative prompt

- Seed value

- Sampling method (e.g., Euler, ancestral, ddim)

- Steps and guidance scale

- LoRA or adapter used (name + version)

- Upscale parameters

- Generation

- Hash of final asset (for integrity verification)

For Perplexity-led briefs, store the query, retrieved passage list (with URIs), and fusion output with a timestamp and user id. This preserves traceability and improves repeatability.

Integration & pipeline design

- Spec stage (Perplexity): Use the RAG system to generate a brief, personas, and competitor claims. Output a JSON brief.

- Prompt translation: Transform the brief into a canonical prompt schema for Leonardo (audience, mood, camera, negative prompts).

- Generation stage (Leonardo): Run batch generation with seeds and model versioning enabled.

- Automated QA: Run an automated brand-safety, face-detection, and PII scan on generated assets.

- Human QA & curation: Human reviewer tags final assets, leaves a 1-paragraph QA note, and marks the asset as publish-ready.

- Archive & audit: Store metadata and generation parameters in the DAM. Track usage & cost.

Treat all steps as auditable transactions with logged inputs/outputs.

Decision Matrix — Which AI Should You Really Choose?

Pick Perplexity Ask if:

- You need sourced, quick research with clickable citations.

- Your team performs market scans, competitive intelligence, or produces evidence-backed decks.

- You require an internal knowledge search integrated with conversation.

Pick Leonardo.ai if:

- You need production-quality images with consistent style and repeatability.

- Your team needs an API pipeline to generate and manage image variants.

- You wa

If you need both:

- Run a combined pilot: Perplexity to create briefs and personas → Leonardo to generate and iterate images. Measure cost per usable asset and require vendor security & IP checks during procurement.

Inside the Lab — How Each AI Was Put to the Test

Test buckets:

- Research accuracy (Perplexity).

- Prompt fidelity & repeatability (Leonardo).

- Integration pipeline (both).

Metrics:

- Time-to-first-answer.

- Citation count and verification rate.

- Image fidelity to brief (1–5).

- Repeatability across seeds.

- Cost per usable asset.

Deliverables to store:

- Screenshots.

- Generation metadata (model name, seed, params).

- One-paragraph human QA note per output (for reproducibility and documentation).

Pros & Cons Perplexity Ask vs Leonardo AI Platform

Perplexity Ask

Pros:

- Evidence-backed synthesis with clickable citations.

- Fast for research and conversational synthesis.

Cons: - Not built for production-grade imagery.

- Agent/browser features (Comet) have been scrutinized for prompt-injection; enterprise buyers should audit.

Leonardo.ai

Pros:

- Strong image quality and repeatability for creative teams.

- Production-grade API and image-guidance tools.

Cons: - Licensing and governance can be complex.

- Not a substitute for evidence-backed research; you’ll need to pair it with a RAG tool.

Actionable Guide — Choosing & Buying the Right AI

If you must pick one for research-led decisions:

- Start with Perplexity Ask.

- Run 2–3 pilot queries typical to your org (market scans, competitor briefs).

- Evaluate citation quality and request enterprise security docs before enabling agentic features.

If you must pick one for creative production:

- Choose Leonardo.ai.

- Run a 30-day creative pilot: 3 hero images, 10 variations, integrate with your DAM.

- Capture seeds and model versions for repeatability.

- Project monthly volumes and map to pricing tiers.

You want both:

- Run a combined pilot: 2 Perplexity briefs → 5 Leonardo hero images → store seeds & model versions → measure cost per usable asset.

- Require vendor security & IP checks in procurement.

FAQs Perplexity Ask vs Leonardo AI Platform

A: Not really. Perplexity is an AI answer/search engine; Leonardo.ai is a generative image platform. They are complementary rather than direct competitors.

A: Perplexity has added some image features, but its core strength remains research and cited answers. For production-quality images and fine control, Leonardo.ai is stronger.

A: Agentic browser features (e.g., Comet) have been flagged by security researchers for prompt-injection and related risks. Always request vendor security reports and patch histories.

A: Save generation metadata: model name/version, seed, prompt, negative prompt, parameter settings. Store these alongside the asset in your DAM.

A: It depends on usage. Perplexity costs are seat/feature-driven; Leonardo costs scale with API/image generation and upscales. Build a sample usage estimate before buying.

Closing Perplexity Ask vs Leonardo AI Platform

Perplexity Ask, and Leonardo.ai solves different layers of the AI stack. Perplexity is optimized for retrieval, provenance, and concise evidence-backed synthesis; Leonardo is optimized for conditional image generation, style control, and asset reproducibility. The practical answer for most organizations is: use Perplexity for briefs and evidence, and Leonardo for production images — and build an auditable pipeline linking the two.