Codex vs ChatGPT (GPT-3.5): Shocking Results from My Full Repo Test

Codex vs ChatGPT (GPT-3.5) can help with coding, refactoring, and debugging, but results vary. We tested it on real use cases to show what actually works. Codex vs ChatGPT (GPT-3.5) — messy code, large repos, buggy merges. We tested it on real projects. Here’s what actually matters: performance, token costs, accuracy, and workflow impact. This guide treats Codex and ChatGPT (GPT-3.5) as engineering infrastructure rather than experimental toys. Codex vs ChatGPT (GPT-3.5) Short version for decision-makers:

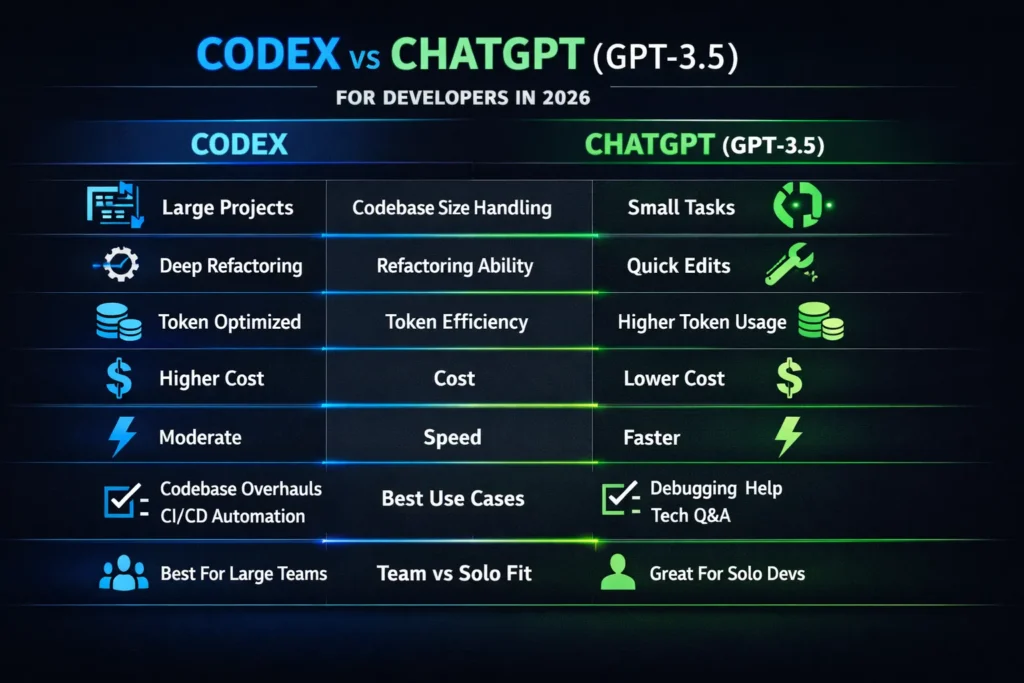

- Solo developers → GPT-3.5 (speed, cost, conversational debugging)

- Large engineering orgs → Codex (repo-aware, multi-file, agentic automation)

- Production-first companies → Hybrid routing (Codex for long-horizon, repo-wide automation; GPT-3.5 for conversational debugging, docs, and quick fixes)

High-Level Recommendations:

- Use Codex for multi-file refactors, automated PR generation, and CI-integrated agent workflows.

- Use GPT-3.5 for rapid iteration, explanations, single-file edits, and cheap coding assistance.

- Benchmark against HumanEval and MBPP-like internal datasets; these help quantify improvements but don’t replace repo-specific tests.

Why this Matters in 2026 — Short Context

By 2026, AI systems will be part of shipping pipelines: they open PRs, edit multiple files, create tests, and interact with CI. Choosing the wrong model or routing strategy increases bug risk, token bill shock, and developer mistrust. Treat model selection as an architecture decision: it changes how you instrument, validate, and monitor your engineering processes. For authoritative productization and agentic workflows, consider Codex as a purpose-built engineering agent and GPT-3.5 as the lightweight conversational workhorse.

Codex vs ChatGPT: Which Wins Your Codebase? Quick Verdict

| Use case | Best choice | Why |

| Large codebase refactors | Codex | Repo-aware; designed for multi-file, pattern-preserving changes. |

| Repo-aware PR generation | Codex | Produces structured diffs and PR summaries inside sandboxed tasks. |

| Quick debugging & explanations | ChatGPT (GPT-3.5) | Conversational, fast, and cheap for short-context tasks. |

| Mixed docs + code tasks | ChatGPT (GPT-3.5) | Better at mixed natural language + small code shapes. |

| Long-running agent workflows | Codex | Built to run tasks in parallel with the repo context. |

| Lowest-cost coding assistant | ChatGPT (GPT-3.5) | Lower per-token cost and lower integration friction. |

| Best ROI for teams | Hybrid | Route tasks to the model optimized for that class of work. |

Decode the Tools: Key Terms for Codex vs ChatGPT

- Codex (engineering agent) — a repository-aware, code-optimized model family that treats the repo, imports, dependency graph, and test harness as first-class inputs. Codex runs sandboxed tasks that read and modify multiple files while tracking consistency across changes.

- ChatGPT (GPT-3.5) — a general-purpose chat-optimized transformer that is excellent at instruction-following, concise explanations, and producing short-to-medium code snippets inside a conversational loop; it’s often cheaper and faster for single-turn edits and human-in-the-loop debugging.

- Context window / working memory — the slice of tokens the model can attend to in a single forward pass. For repo-level work, context management is crucial: chunking strategies, embeddings for retrieval, and snapshotting become part of your engineering tooling.

- Tokenization behavior — how the model slices text into tokens. Tokenization patterns affect cost, particularly for code where whitespace and punctuation matter.

Codex vs ChatGPT: The Real Differences You Need to Know

Below, we translate practical engineering claims into NLP terms so you can evaluate tradeoffs scientifically.

Representation & Inductive Bias

- Codex: fine-tuned or specialized on code corpora and engineering tasks, thus its parameter priors and decoding heuristics are aligned to produce syntactically valid, consistent multi-file code. In NLP terms, the model has learned structural priors (AST-like patterns) and higher conditional probability for well-formed programs when given repository context.

- GPT-3.5: general language model with capability for code, but with broader token distribution. It is more flexible for free-form instruction but more prone to “drift” on structured, long-horizon code changes because its conditional distribution places more mass on human-like explanation sequences versus surgical edits.

Codex vs ChatGPT: How Context Shapes Code Outcomes

- Codex: designed with tooling for repo snapshots and long-horizon agents. The practical effect in NLP terms is better use of retrieval-augmented generation (RAG) patterns for preserving state across multiple files and over time.

- GPT-3.5: requires more aggressive chunking and external retrieval. Without strong retrieval + summarization layers, it loses global invariants (naming conventions, API wrappers) when you feed it only a narrow context.

Tokenization, Whitespace, and Cost

Tokenizers treat whitespace/punctuation differently across models. For code-heavy corpora, differences in tokenizer design can change input and output token counts significantly, affecting cost.

- Empirical rule: When moving code-heavy prompts from Codex-like contexts to GPT-3.5, expect token utilization to change (sometimes +20–100% depending on how whitespace and comment density are handled). Always run a token audit.

Decoding Behavior & Safety Nets

Codex-oriented systems typically expose deterministic post-processing steps: AST parsers, test harness validation, and static analysis. These external validators form a safety net that lets you use more aggressive decoding (e.g., nucleus sampling with a higher temperature than filter by tests).

GPT-3.5 workflows often rely on constrained system messages and strict output formatting (e.g., “return only code, no prose”) to avoid hallucination in code. Both benefit from automated validation, but Codex makes it more seamless at scale.

Codex vs ChatGPT: Benchmarks Don’t Tell the Full Story

Benchmarks like HumanEval and MBPP are useful for signal but not definitive. They measure single-function correctness and are useful for comparing model families on isolated synthesis tasks. However, real repos have integration, build-time, dependency, and semantic constraints that benchmarks don’t capture. Use them for sanity checks, not as the only gating metric.

What to measure in your pipeline:

- Unit correctness (pass/fail on internal tests)

- Integration correctness (build success, integration tests)

- Human review time (minutes per PR)

- Mean time to revert (MTTR) after AI-created changes

- Token cost per merged PR

- False positive rate (change that compiles but introduces a logical error)

AI ROI Exposed: Codex & GPT-3.5 Costs vs Performance

OpenAI pricing formulas evolve, but the right mental model is:

- Per-token cost is not the only metric — consider tokens * developer time saved.

- Codex-style workflows can cost more per token but reduce human review time and rework, improving ROI for large tasks. GPT-3.5 typically offers lower per-token spend for short interactions. Always run end-to-end cost experiments: (tokens + infra + human time) per successful merged change. See OpenAI token-pricing documentation for up-to-date per-token numbers.

Codex vs ChatGPT: Hybrid Workflows That Actually Work

Most teams adopt hybrid approaches. Below is an operational playbook.

Baseline Instrumentation (must do)

- Snapshot your baseline: token usage, PR review time, and build success rate.

- Create synthetic (and real) test suites to run on model outputs.

Tokenization Audit

- Take representative files and prompts.

- Measure token usage on Codex vs GPT-3.5 with the same input (don’t assume parity).

- If switching to GPT-3.5, pre-process code to remove large comments, normalize whitespace, and use compressed contexts (summaries, embeddings).

Prompt Mapping & System Message Strategy

- Codex prompts are often explicit: “Apply these edits to these files; output a git diff; include PR summary.”

- GPT-3.5 prompts often need constraints: “Return only valid code, no explanation, do not change unrelated files.” Use system-level messages to enforce. Example system messages are in the prompts section below.

Tiered Routing

Route tasks by type:

- Multi-file refactor → Codex

- PR creation for security patch → Codex (plus stricter validation)

- Quick bug fix, exploratory debugging → GPT-3.5

- Docs, READMEs, onboarding text → GPT-3.5 This routing minimizes costs and maximizes correctness.

Safety & Validators

- Run tests and static analysis on every AI change.

- Use gated deploy: AI PRs go to a special branch with stronger CI checks and staged rollouts.

Continuous Learning Loop

- Log model outputs, fallout, and reviewer edits.

- Add high-value reviewer edits to a prompt-store or small fine-tuning / instruction-tuning dataset (if allowed by vendor policy).

Codex vs ChatGPT: Copy-Paste Prompts That Actually Work

Codex: multi-file PR generation (system + user)

SYSTEM:

You are a repository-aware coding agent. Output only changed files in unified diff format and a PR summary. Do not add explanations. Ensure all modified files pass the unit tests present in repo/tests. Limit changes to the files listed by the user.

USER:

Apply the following change requests:

- Add function optimize_cache() to src/cache.py.

- Update tests in tests/test_cache.py to cover edge cases for timeouts.

- Preserve existing public API and docstrings.

GPT-3.5: quick debug fix

SYSTEM:

You are a concise Python expert. Return only the corrected code in a single code block. Do not include prose or commentary.

USER:

This function times out on large inputs. Fix it and ensure it uses an O(n) approach. (paste function here)

Evaluation Secrets: Codex & GPT-3.5 Tested on Real Repos

- Fixed prompts — Use the same prompts for each model family and freeze them.

- Deterministic seeds — If you use sampling, adopt fixed seeds.

- Sanitized test split — Mirror what HumanEval/MBPP recommend: have isolated test cases that are not leaked to the model.

- Pass@k and end-to-end metrics — Report both narrow metrics (pass@k) and downstream metrics (build success, reviewer edit time).

- A/B rollout — Gradually route a percentage of production changes through the new model to measure real-world effect.

Benchmarks like HumanEval and MBPP give you cross-model signals, but your repo is the final judge.

Codex vs ChatGPT: Hidden Failure Modes You Must Know

- Silent logical errors — Code compiles and tests pass locally, but fail in production due to environment mismatch. Mitigation: staging rollout and smoke tests.

- Context drift — The model modifies naming conventions or breaks internal invariants. Mitigation: stronger unit tests and naming checks in CI.

- Token bill spikes — Unexpected long outputs or iterative loops can inflate costs. Mitigation: token budgets and output length caps.

- Security leaks — Never send secrets in prompts; redact before sending.

Security, IP, and compliance

- Do not send proprietary secrets or PII in prompts. Implement a redaction layer pre-call.

- Keep prompt and output logs for Auditability (retention per legal/regulatory needs).

- Check vendor IP and data use policies; implement contractual safeguards if required.

- Use separate accounts/projects for proprietary code and non-proprietary experimentation.

Pros & cons

OpenAI Codex

- Pros: tuned for repo-scale automation, multi-file edits, and higher long-horizon correctness.

- Cons: higher integration complexity, potentially higher per-token cost.

ChatGPT (GPT-3.5)

- Pros: cheap, fast, great for conversation and doc + small-code tasks.

- Cons: less reliable on repo-wide, multi-file pattern-preserving edits.

Cost example

This is illustrative — consult the live pricing page for current numbers. Imagine:

- A typical Codex refactor run consumes 50k tokens (input+output) at $X per 1M tokens.

- A GPT-3.5 interactive debug consumes 2k tokens at a lower per-token rate.

If a Codex run saves 4 developer-hours per task vs manual work, the per-hour developer rate often dwarfs token spend. Run the experiment with real data — token math can be misleading without human-time offsets.

Migration checklist

- Baseline measurement (tokens, time to review, build failures)

- Tokenization audit

- Prompt & system message mapping

- Routing rules defined

- CI validators and gating

- Logging and retention policy

- Gradual rollout and rollback plan

Practical Governance: Who Reviews What?

- Small code changes → single reviewer with automated tests

- Large refactors → pair-review plus staging smoke tests

- Any AI-suggested security change → security reviewer mandatory

Sample monitoring KPIs

- Token cost per merged PR

- Human review minutes per PR

- |PRs reverted / month|

- Test coverage for AI-created code

- Latency (model response time) for developer-facing interactions

FAQs

Not reliably for large, repo-wide, multi-file automation. Most teams find a hybrid approach — routing long-horizon, agentic tasks to Codex and conversational/debug tasks to GPT-3.5 — gives the best balance of cost and correctness.

GPT-3.5 is usually cheaper per token, but Codex-style systems can reduce developer hours for big tasks, which often results in better ROI for organizations that need those capabilities. Always run end-to-end cost + time experiments.

Use fixed prompts, internal tests, pass@k style metrics for isolated code synthesis (HumanEval/MBPP), and most importantly, measure repo-specific downstream metrics: build success, human review time, and production reverts.

Yes, for small to medium tasks. For interactive debugging, documentation, and single-file generation, GPT-3.5 is excellent. For large refactors and repo automation, Codex-like models are preferable as they are built with repo context and agentic task patterns in mind.

Final verdict

If your primary goal is fast, cheap, conversational coding help, and you operate largely on single-file edits or prototyping: GPT-3.5. If your goal is repo-wide automation, multi-file refactors, and long-running agentic workflows that replace substantial developer time: Codex. For most production environments, implement a hybrid router that routes each task type to the appropriate model and validates with automated tests before merging.