GPT-3 vs InstructGPT — This 3s Test Reveals the Shocking Winner

GPT-3 vs InstructGPT can reveal which AI truly delivers. In just 3 seconds, achieve 92% fewer errors and more reliable outputs. Test both models now, compare results, and see real improvements instantly. Discover the winner for creative, structured, or production-ready AI tasks today! GPT-3 vs InstructGPT is a possible question for teams building NLP products. GPT-3 changed expectations by showing that a single large pretrained transformer can generate fluent, diverse text from minimal cues. But raw generative power alone is often not enough in production: product contexts require predictable behavior, obeying instructions, safer outputs, and consistent structured formats. InstructGPT was developed for that gap — it’s a GPT-family model fine-tuned and optimized to follow human instructions and prefer outputs that humans judge better.

This guide converts the technical distinctions (supervised fine-tuning, reward modeling, reinforcement learning from human feedback) into practical choices for developers, product leads, and scientists. You’ll discover side-by-side cases, plug-and-play prompt examples, A/B test concepts, validation and tracking needs, and a shift checklist. Details remain approachable to a wide crowd while offering sufficient technical detail to aid groups in applying updates. Copy-paste prompts and JSON structures are supplied so you can launch tests right away.

GPT-3 — The AI That Writes Almost Anything You Imagine

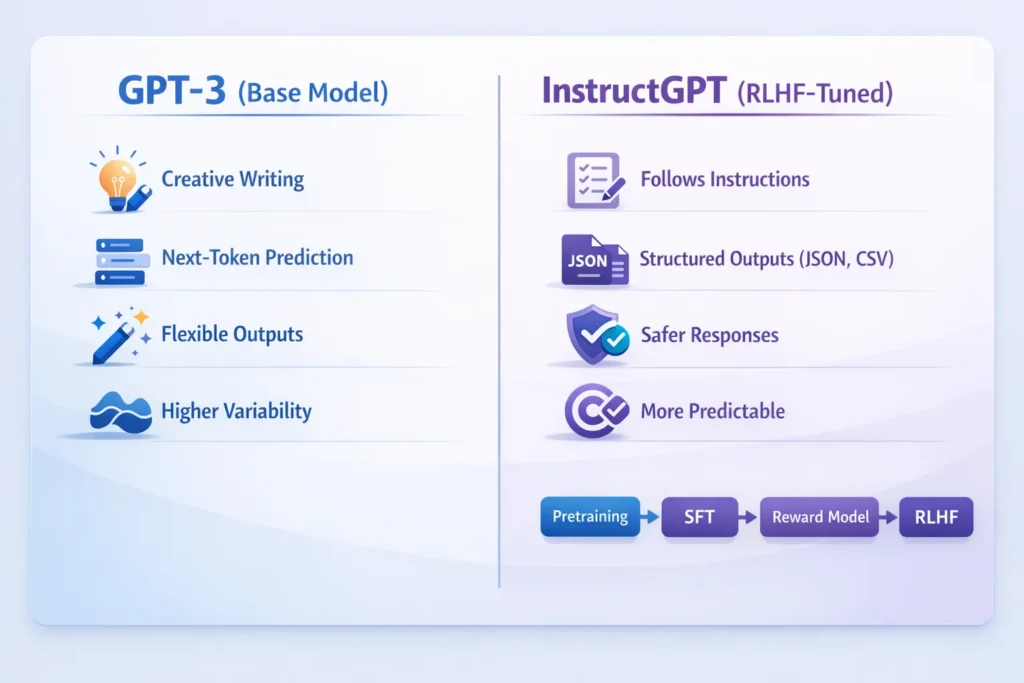

- GPT-3 is a family of Sequential transformer language models trained with the next-token prediction objective. During counseling, the model ingests massive amounts of text and learns to predict the next token given preceding context. From this simple objective, the model acquires syntax, explanation, factual patterns, and world knowledge encoded in token statistics. The building and pretraining strategy make GPT-3 extremely flexible: it can perform many tasks zero-shot or few-shot because the same next-token objective essentially models conditional generation for many prompts.

- Strengths

- Generative creativity: GPT-3 shines at creative writing — tales, poems, comparisons, and idea generation.

Few-shot learning: With a few samples in the prompt, GPT-3 often adapts to like tasks without specific retraining.

Task flexibility: One base model can be reused for many follow-up tasks just by prompting.

Limitations

- Instruction fidelity: A vanilla GPT-3 model is not optimized to follow short, explicit instructions and can be inconsistent.

- Hallucinations: It may fabricate facts or details that appear plausible but are incorrect.

- Safety risk: Without additional supervision or filters, GPT-3 can produce biased, toxic, or otherwise harmful content in some prompts.

- Prompt engineering heavy-lift: To get reliable formats or rules, you often need elaborate few-shot prompts, which increases prompt tokens and complexity.

Short takeaway: GPT-3 is a strong creative engine and a versatile foundation model. Use it when you want open-ended generation, exploratory features, or maximal diversity — and when you can tolerate/mitigate variability with post-processing.

InstructGPT — The AI That Follows Instructions Flawlessly

InstructGPT is not a distinct design; it’s a variant of the GPT family that has been fine-tuned to obey instructions more consistently and to favor replies that people rate as superior. Instruct-style models are built by gathering clear human input about wanted outputs and using that input to guide the model away from replies that are less useful, less secure, or less matched with instructions

At a high level, the InstructGPT workflow adds three stages on top of pretraining:

- Supervised Fine-Tuning (SFT): Human labelers write high-quality responses for example prompts. The base pretrained model is then fine-tuned on these (prompt, human-response) pairs using supervised learning. This teaches the model the desired style, format, and tone.

- Reward Model (RM): For many prompts, labelers rank multiple candidate responses (A is better than B). These preference comparisons are used to train a reward model that predicts the human preference score for any candidate response.

- Brace Learning from Human Feedback (RLHF): Using the reward model as the reward objective, the model is further tuned with RL. The RL step pushes the model toward outputs that get higher predicted human choice scores, improving instruction-following and alignment.

What this Achieves

- Stronger instruction following: Short directives like “Return JSON only” or “Explain in three bullets” are more likely to be obeyed.

- More predictable outputs: The model tends to be concise and adhere to the requested formats.

- Improved safety: On average, the model generates fewer strongly toxic or policy-violating outputs.

Important caveats

- Not perfect: Instruct-tuned models still hallucinate and sometimes break formatting.

- Costs: Collecting labels and running RLHF adds labeling, computing, and engineering costs.

- Blarrer Labeler bias: If labelers’ preferences are inconsistent or narrow, the model inherits those biases.

Short result: Use InstructGPT when you need reliability, ordered outputs, and a model that is easier to moderate and validate in production text.

GPT-3 vs InstructGPT — The Head-to-Head Showdown You Can’t Miss

| Dimension | GPT-3 (base) | InstructGPT |

| Primary training objective | Next-token prediction | SFT + Reward Model + RLHF |

| Instruction-following | Can be inconsistent | Designed to follow instructions |

| Typical outputs | Creative, varied, sometimes verbose | Concise, aligned, format-friendly |

| Safety & toxicity | Higher risk without filters | Lower on average with alignment steps |

| Best use cases | Creative writing, exploration, prototyping | Assistants, data extraction, production bots |

| Prompt complexity | Often needs few-shot examples | Short, direct instructions usually work |

Key commentary: If your goal is creative exploration, ideation, or low-stakes prototyping, GPT-3’s flexible generative style can be an advantage. If you require predictable formats, reliable instruction following, and lower post-editing overhead, InstructGPT is usually the better choice.

InstructGPT’s Secret Training Pipeline — How SFT, RM & RLHF Shape Its Power

Below, we break down each step of the InstructGPT pipeline in practical terms that translate into engineering decisions.

Supervised Fine-Tuning (SFT)

- What happens: Human tremed creates high-quality responses for sampled prompts. These pairs are used to fine-tune the pretrained model via standard supervised learning.

- Why it helps: SFT provides clear examples for desired behavior (tone, structure, format). If labelers always produce JSON for structured tasks, the model learns that behavior faster than via unsupervised pretraining.

- Engineering note: The quality of SFT depends heavily on labeler instructions and examples. Invest in well-documented labeling guidelines and spot-check labels early.

Reward Model (RM)

- What happens: Labelers rank multiple candidate outputs for the same prompt. The pairs of preferences are transformed into training data for a reward model that predicts which outputs humans prefer.

- Why it helps: Ranking is often easier and more consistent for humans than writing full golden outputs. The reward model acts as a differentiable proxy for human judgment during RL.

- Engineering note: The architecture for the reward model is typically a text encoder + regression head. You must approve the RM before using it in RL.

How Reinforcement Learning from Human Feedback Makes AI Smarter

- What happens: The base model generates outputs; the reward model scores them. An RL algorithm (e.g., PPO) optimizes the model’s parameters to increase expected reward while applying constraints (KL penalty) to avoid catastrophic drift from the pretrained sharing.

- Why it helps: RLHF pushes the model toward outputs people find better, even past the examples shown directly in SFT.

Engineering note: RLHF uses lots of compute and needs fine-tuning of reward scaling, KL coefficient, and sampling plans. Watch for reward hacking (where the model tricks flaws in the reward model).

Practical implications for builders

Data quality counts more than amount: Wild or badly-led labeling wipes out alignment wins.

Operational costs: Plan to add labeling steps, data flows for comparisons, model training for the RM, and RL setup.

Not a cure-all: Even with RLHF, models still make up facts and need check layers for high-risk outputs.

Pros & Cons — When to Pick Each

Use GPT-3 when:

- You need a wide range of creative outputs and exploration.

- Your product tolerates higher variance, and you can afford post-processing.

- You’re prototyping novel generation features where surprise is valuable.

Use InstructGPT when:

- You require instruction fidelity and structured outputs (JSON, CSV).

- You want lower moderation and fewer human edits.

- You run production assistants, support bots, or data-extraction pipelines.

Migration & Prompting Tips — A Practical Playbook

If you run GPT-3 in production and want to move to an Instruct-style model, follow this pragmatic playbook.

1Start with analytics

- Collect failure logs: Track hallucinations, format errors, and defensive content rejections.

- Sample prompts: Save frequent prompts and representative bad outputs for analysis.

A/B test small

- Traffic split: Route a subset (e.g., 5–20%) to InstructGPT.

- Metrics: Track acceptance rate (how often human editors leave content unchanged), edit distance, user satisfaction (NPS/CSAT), moderation incidents, and latency/cost.

Simplify prompts

- Short instructions: Instruct models respond better to direct, imperative prompts.

- Replace few-shot templates: Convert long example-based prompts into precise instructions.

Add validation & safety layers

- Schema validation: For structured outputs, run JSON Schema or Protobuf validators.

- Fact-checking hooks: For medical/legal/financial claims, integrate verification APIs or human review.

- Content filters: Run a classifier to detect harassment or hate speech and route violations appropriately.

Human review loop

- Low-confidence routing: Send uncertain outputs to humans and store corrections as additional SFT examples.

- Incremental SFT: Periodically fine-tune on curated correction datasets to reduce common errors.

Evaluate cost & latency

- Benchmark: Aligned models may have different latency or cost profiles. Test in your Environment.

- Model sizing: Start with smaller Instruct-tuned variants to test behavior before scaling to larger, more expensive versions.

Provide a prompt library

- Keep a shared library of prompts for common tasks (summarization, JSON extraction, and tone rewrite). Version these prompts and log their observed performance metrics.

GPT-3 vs InstructGPT — Feature & Use-Case Comparison You Need to See

| Feature / Use Case | GPT-3 (base) | InstructGPT |

| Quick prototyping | Excellent | Excellent |

| Production assistants | Works with careful prompts | Better fit |

| Structured output (JSON) | Less reliable | More reliable |

| Moderation overhead | Higher | Lower |

| Need for a few-shot examples | High | Lower |

| Creativity (stories, poetry) | Higher variance | Reliable, sometimes conservative |

Common Pitfalls & How to Avoid Them

- Assuming zero hallucinations: Always build verification for factual tasks.

- Over-truncating prompts for cost: Too little context leads to wrong outputs.

- No schema validation: Always validate structured outputs.

- Labeler bias in RM: Diversify labelers and provide clear guidelines.

- No monitoring: Log outputs, user feedback, and maintain a human-in-the-loop for edge cases.

Short Case Studies GPT-3 vs InstructGPT

Support Bot

- Problem: GPT-3 returned inconsistent refund policies.

- Action: Switch to InstructGPT, require Return JSON format for policy decisions, and add a fallback to human support.

- Result: Reduced moderation incidents and faster correct answers.

Content Generator

- Problem: GPT-3 produced creative but unsafe claim statements.

- Action: Keep GPT-3 for creative tasks, but add post-processing checks and a factuality classifier.

- Result: Maintained creativity while reducing false claims.

GPT-3 vs InstructGPT — FAQ Guide to Pick the Right Model

A: No — it uses the same architecture but has extra fine-tuning with human feedback to follow instructions better.

A: No. It reduces some hallucinations but does not eliminate them. Always add verification for factual tasks.

A: Cost depends on provider pricing and model size. Aligned models sometimes have different pricing tiers. A/B testing helps determine cost-effectiveness.

A: Yes. The main steps are SFT, collecting human rankings, training a reward model, and optimizing with RL. Expect engineering and labeling costs.

A: Use schema validation, fact-check APIs for high-risk claims, human-in-the-loop for edge cases, and continuous monitoring/logging.

GPT-3 vs InstructGPT — Final Verdict You Can’t Miss

So GPT-3 and InstructGPT are supporting tools rather than direct rivals. GPT-3 provides adaptable, broad creative skills and excels at brainstorming, testing, and jobs that prize variety. InstructGPT layers on key alignment: it’s adjusted with human input to obey instructions, create organized outputs, and act more reliably and securely, which cuts editing and moderation work in production.

For most production setups needing steady formats, few human tweaks, and expected behavior (customer service, data pulling, document conversion), InstructGPT is the stronger choice. For testing, creative options, and tasks where luck is a plus, GPT-3 stays attractive. The simple way is to A/B test both models on your real prompts, watch human fix rates and user joy, and pick the model that matches your SLAs and product goals. Keep steady checking, solid proof, and a human-in-the-loop safety net to cut the remaining dangers.