Introduction — Perplexity AI Review (2025)

In the modern information ecosystem, professionals increasingly expect search and summarization systems to do more than return links: they want concise, traceable answers, provenance, and the ability to integrate private data with the live web. Perplexity AI positions itself precisely at that intersection. From an NLP perspective, Perplexity is best understood as a production-ready retrieval-augmented generation (RAG) system, combined with pragmatic engineering for provenance, observability, and UX, that prioritizes sourced factuality over unconstrained creativity.

What Perplexity AI is

At its core, Perplexity AI is an applied NLP pipeline that combines large language models (LLMs) with real-time web retrieval, wrapped in engineering that emphasizes traceability (citations), freshness (live indexing), and usability (a search-like interface and Deep Research orchestration).

Key conceptual components from an NLP perspective:

- Retriever: A dense (embedding-based) and/or sparse (BM25-like) retrieval layer that turns the query into vector or token-space matches against a document index.

- Indexer & freshness: A continuously updated web index and domain-specific ingestion (for user-uploaded docs) that ensures the retrieval layer pulls recent signals.

- Ranker / re-ranker: A learned re-ranking module or heuristics that orders candidate contexts by relevance before synthesis.

- RAG synthesis engine (generator/reader): An autoregressive decoder (a transformer) that conditions on retrieved contexts to produce a synthesized, concise answer, oftentimes accompanied by footnotes or numbered citations.

- Citation generator: Logic that chooses spans from source documents and attaches links; sometimes this involves span extraction and alignment heuristics.

- Workflow orchestrator: “Deep Research” that decomposes complex queries into sub-questions, issues parallel retrievals, merges results, and synthesizes a consolidated report.

- Private namespaces: A capability to upload PDFs, spreadsheets, and docs, which are vectorized and indexed separately for enterprise or Pro use.

- Controls: Model switching, temperature/creativity settings, and UI controls that limit hallucination propensity and balance precision/recall.

For anyone with NLP training, these map to canonical primitives: tokenization → embedding projection → approximate nearest neighbor (ANN) search → context window management and prompt construction → decoding → provenance annotation.

Architecture & how it works (technical walk-through)

1. Query processing and intent understanding

When a user types a question, the system performs intent classification and normalization. It applies tokenization and lightweight parsing to decide whether the query is:

- A simple Factual QA (quick retrieval),

- A multi-part research task (triggering Deep Research), or

- A request to search private documents (switching to private namespace retrieval).

Intent detection drives which retrieval pipeline (fast quick-search vs multi-query deep-search) the orchestrator invokes.

2. Retrieving and indexing

Perplexity uses real-time web retrieval. Practically, this means:

- A hybrid retrieval stack (dense embeddings + traditional lexical search) for robust recall,

- An ANN index (HNSW, Faiss, or similar) to serve vector nearest-neighbor lookups,

- Periodic index refreshes and crawling to ensure freshness,

- Private vector indexes for uploaded documents, isolated with access controls for teams.

Embedding encoders transform queries and documents into a shared semantic space. The encoder choice (sentence-transformer, cross-encoder for rerank) shapes retrieval quality. Dense retrievers excel at semantic matches (paraphrase-level) while lexical components capture exact-match signals.

3. Re-ranking & context selection

Before synthesis, candidate passages are re-ranked. Techniques used in production-grade RAG include:

- A cross-encoder reranker that scores (query, passage) pairs,

- Heuristics for penalising duplicate sources,

- Span-level trimming to keep the eventual context within the model’s token budget.

This stage is crucial for citation accuracy: if the re-ranker prioritizes shallow or irrelevant contexts, the generator may produce confident but unsupported claims.

4. Prompt engineering & synthesis

Perplexity’s synthesis stage constructs a “retrieval-augmented prompt” that concatenates top-ranked passages in a coherent order with guardrails (instructions) that push the decoder to:

- Produce concise answers,

- Attach citations for claims,

- Avoid fabrication when evidence is absent,

- Optionally return a chain-of-thought internal to the product (but not exposed publicly).

This is where model-switching comes into play in Pro tiers — you can select decoders with different creative tendencies or safety profiles.

5. Citation alignment & provenance

Aside from producing citations, a high-quality system should align individual claims to specific spans in sources (span-level grounding). Perplexity’s value proposition lies in surfacing clickable references immediately adjacent to its synthesized answer, letting humans verify claims. But citation fidelity remains a central evaluation axis (more on that below).

6. Deep Research orchestration

When a query is complex, the orchestrator fragments the top-level intent into sub-queries, dispatches them in parallel, and consolidates the synthesized micro-reports. This is analogous to decompositional planning in NLP pipelines and allows users to receive a richer, multi-angle summary.

Features

- Real-time web retrieval: Live crawling and indexing mean answers can reflect current events.

- Citation-backed answers: Each answer normally includes numbered references to sources.

- Deep Research: Automatic query decomposition + multi-query orchestration for multi-faceted topics.

- Model switching: In Pro, choose different underlying models (favouring creativity, precision, or speed).

- File uploads & private search: Vectorize, index, and search personal or team documents.

- Collaborative Spaces: Save queries, share research artifacts, and build team knowledge bases.

- API access: Enterprise- and developer-friendly endpoints to embed RAG capabilities.

- UX choices: Clean interface that looks and feels like a search engine but returns synthesized answers.

How it behaves in practice

Quick factual check (single-shot RAG)

- Query entered → retriever returns top 5 passages → re-ranker orders passages → prompt constructed → decoder synthesizes short answer + citations. Use-case: verifying a stat or finding a date.

Deep Research (multi-stage)

- Complex topic entered → orchestrator decomposes into subquestions → parallel retrieval & synthesis runs → merged report produced with sections and citations. Use-case: background briefing for a journalist.

Private-data augmented search

- Team uploads financial reports → vectorizes them → user asks a query that requires integrating internal metrics with public market reports → system returns a synthesis referencing both private docs and external sources. Use-case: competitive intelligence.

Pricing & plans

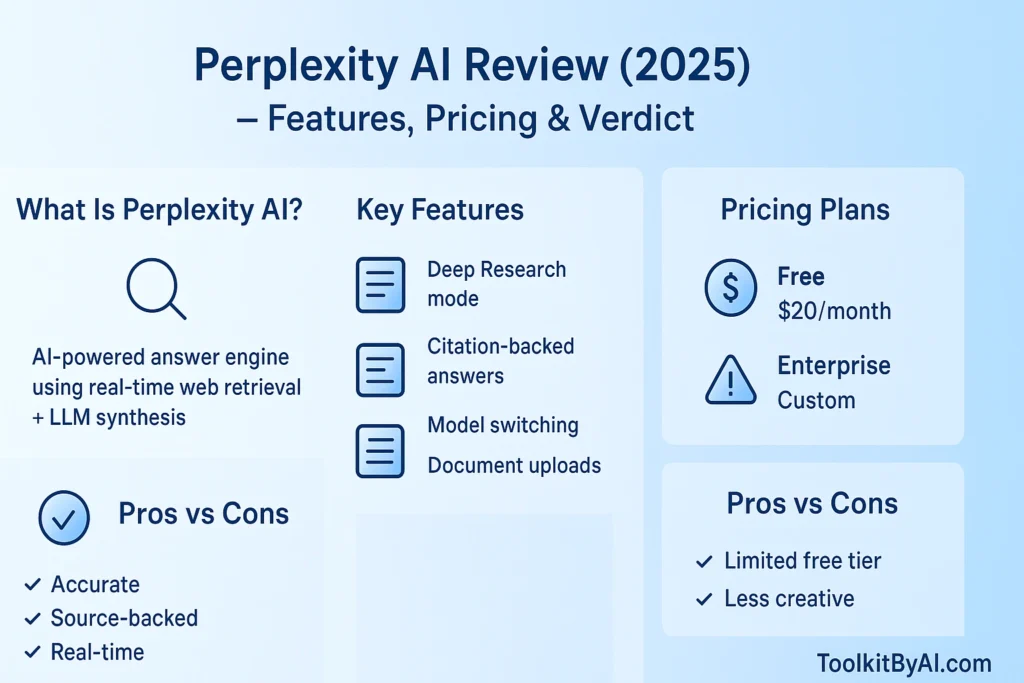

Typical structure (indicative):

- Free: Basic access, limited Deep Research runs, standard model.

- Pro (~$20/month): Unlimited quick searches, more Deep Research capacity, premium model access, file uploads, and team tools.

- API / Enterprise: Usage-based API, SLAs, admin controls, custom pricing.

Note: actual regional prices, promotions, and enterprise negotiations vary — always confirm current pricing on the official Perplexity site.

Evaluation, metrics & reliability

Perplexity optimizes for precision and provenance. But measured performance requires multiple evaluation axes:

- Retrieval metrics: Recall@k, MRR — how often top-k contains supporting evidence.

- Summarization metrics: ROUGE and ROUGE-L can measure overlap with human references; however, for factual QA, these are insufficient.

- Factuality / Hallucination metrics: Claim-level verification, FEVER-style entailment checks, or human adjudication to confirm whether claims are supported by cited sources.

- Citation quality: Proportion of claims with correct supporting links; whether links point to the exact supporting span or just a homepage.

- Latency & freshness: Time-to-answer and recency of indexed content.

Human evaluation remains the gold standard: testers should check source support, detect hallucinated facts, and measure how often the system equivocates when evidence is missing.

Known empirical weaknesses:

- Citation granularity: Sometimes links point to general pages instead of a specific passage.

- Paywall blindness: Information behind paywalls or unindexed archives may be absent.

- Hallucination under prompt pressure: If retrieval is weak, the decoder can produce plausible-sounding but unsupported claims.

- Regional variability: Crawling coverage or latency can vary by geography.

Pros & Cons

Pros

- Strong RAG engineering and evidence-first UX.

- Good for tasks needing traceability and up-to-date info.

- Deep Research offers built-in decomposition and multi-query orchestration.

- Private document indexing supports enterprise workflows.

Cons

- Not optimized for unconstrained creative composition compared to tuned generative models.

- Citation fidelity varies; span-level grounding is sometimes coarse.

- Dependence on public web means blind spots for paywalled or private sources.

- Free tier limits and possible latency for certain regions.

Real-world use-cases

- Journalists — backgrounders, quick fact checks, and sources for attribution.

- Researchers — literature surveys that require recent preprints and news.

- Market research & competitive intelligence teams — blending internal and external evidence.

- Content creators who need verified stats and sources before writing.

- Product teams need concise, sourced answers during decision-making.

Less ideal: purely creative writers who need imaginative generation and long-form stylistic work without an evidence-first constraint.

Privacy, safety, and governance

From an NLP governance perspective, important considerations include:

- Access controls for private indexes and explicit data partitioning.

- Audit logs and provenance traces to support reproducibility and compliance.

- Safety filters to detect toxic or unsafe outputs before they reach the user.

- User consent & compliance for personal data ingestion.

- Mechanisms to contest or correct incorrect citations (human-in-the-loop editing).

If you plan to ingest sensitive or regulated data, evaluate contract terms and technical safeguards carefully.

Integration & developer notes

- API access typically supports RAG queries and returns answers + citation metadata; integrate conservatively and surface citations in your UI.

- Use embedding and vector-store options to pre-index custom corpora (e.g., domain manuals, support tickets).

- Rate-limit and token-budget: design flows that respect cost-per-token and caching opportunities.

- Monitor retriever quality via offline evaluation tasks and add relevance feedback loops to improve recall.

Limitations & research directions

Perplexity’s architecture is strong in many respects but invites improvement in areas that the larger research community is actively pursuing:

- Better span-level grounding: Automatically aligning each atomic claim to a document span is still brittle.

- Evaluating truth under uncertainty: Richer uncertainty quantification (calibrated confidence scores tied to provable evidence) is necessary.

- Multi-hop reasoning with provenance: Explaining intermediate inference steps while preserving source fidelity.

- Paywalled content handling: Secure connectors to institutional repositories while respecting licensing.

- Fine-grained hallucination detection: Automated detectors that flag suspect claims in produced text.

Verdict — who should adopt Perplexity in 2025?

If your primary need is verifiable, up-to-date answers with clear sources — for journalism, research, or business intelligence — Perplexity is one of the strongest specialist tools in 2025. It is intentionally designed as a research engine: Pair it with a separate drafting/creative tool if you need a long-form narrative with stylistic polish.

FAQs

Each answer typically comes with numbered references linking to original web sources, so you can click through and verify.

Yes — in Pro/team tiers, you can upload files (PDFs, CSVs, Word docs) and search across both your uploads and the web.

Yes — for occasional queries and lightweight research, the free plan works. But if you need heavier-duty use (lots of deep research, team collaboration, premium models) you’ll likely need the Pro plan.

Not exactly. It complements them by offering synthesised, conversational answers with sources, which saves you click-through time. But for broad browsing or highly exploratory link surfing, traditional search still has its place.

Because Perplexity searches the web in real time, its answers can include very recent news, statistics, or papers — making it more current than tools relying solely on static training data.

Additional Considerations & Tips

- When using Perplexity for academic work, verify citations — automated systems can still err or fabricate references; always confirm key facts from primary sources.

- For content pipelines: use Perplexity to gather facts and citations, then pass those verified facts into a creative model for drafting to combine accuracy with style.

- If you need enterprise-level data ingestion, governance plan: consent, ACLs, logging, and retention policies.

- Test system behaviour for your locale (Islamabad/Pakistan) with pilot queries to confirm latency and regional crawling coverage.

Final verdict

Perplexity AI (2025) is a specialist, evidence-first RAG service: outstanding for research, verification, and team workflows that need provenance and up-to-date results. It is not a replacement for creative-first LLMs, but it is an excellent partner when accuracy and traceability matter. If you prioritize verifiable answers and a research-centric workflow, Perplexity should be in your toolkit — pair it with a drafting engine when you need narrative polish.