Gemini 1.5 Flash Preview vs Gemini 2.0 Flash — Full 2026 Comparison & Benchmarks

Gemini 1.5 Flash Preview vs Gemini 2.0 Flash — the battle of speed, reasoning, and multimodal AI. Developers and teams need real benchmarks, code handling, and migration insights to decide if upgrading is worth the effort. This guide reveals performance differences, cost considerations, and who should pick which model. Large language model (LLM) environments continue to evolve rapidly. For NLP engineers, data analysts, and product teams, the differences between winer model families matter not only for raw accuracy but for latency profiles, cost skill, inference pipelines, and multimodal composition. Google’s Gemini family — and in particular the Flash line — is positioned for use cases that require low-latency, high-throughput inference. However, within that Flash family, the operational tradeoffs and representational adjustment between Gemini 1.5 Flash Preview and Gemini 2.0 Flash are bid.

Gemini 1.5 Flash Preview vs Gemini 2.0 Flash — Full 2026 Comparison & Benchmarks

- Gemini 1.5 Flash Preview is advanced for throughput and token-cost efficiency. It is a sober choice for truthful pipelines: fast summarization, short Q&A, high-volume distribution, and scenarios where latency per request must be minimized.

- Gemini 2.0 Flash is a skill upgrade: stronger semantic image, improved reasoning chains, more robust code generation, and better multimodal adjustment. It trades a modest upgrade in per-call compute/cost for measurable gains in downstream task skill and reduced error propagation in multi-step prompts.

Conclusion: Use 1.5 for high-volume, low-complexity tasks; use 2.0 for hard reasoning, code fusion, and multimodal workflows that require more reliable semantic unity.

Gemini 1.5 Flash Preview vs Gemini 2.0 Flash — Structured Testing

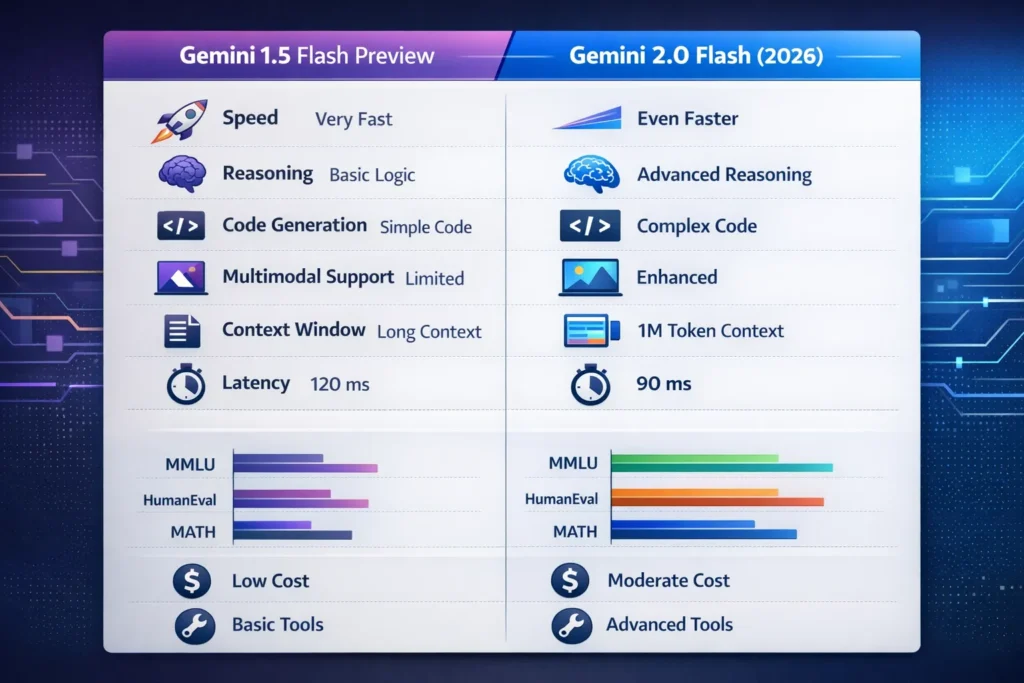

| Feature / Dimension | Gemini 1.5 Flash Preview | Gemini 2.0 Flash |

| Primary Optimization | Latency & low cost | Balanced: capability + latency |

| Release Window | Early preview (2024) | 2.0 — general availability late 2024 — 2025 |

| Intended Use Cases | High throughput, simple tasks | Reasoning, code, multimodal large-context |

| Token / Context Handling | Long context support (older optimizations) | Optimized for 1M token contexts |

| Multimodal | Basic image+text | Advanced multimodal alignment |

| Reasoning & Code | Basic reasoning; simple code | Stronger reasoning & code generation |

| Tooling & Integrations | Minimal | Enhanced tools, previews, plug-ins |

| Latency | Very low | Lower median latency with better tail behavior |

| Cost | Lower per token | Slightly higher per call, but more efficient for complex tasks |

| Lifecycle | Older variants are being deprecated | Current focus / active support |

Release, Availability & Lifecycle

From an operational view, release timing and lifecycle are not mere marketing details — they directly drive risk in production systems:

- Deprecations and model IDs: Cloud vendors (Vertex AI, Cloud APIs) may retire specific model IDs. If your production code hardcodes a 1.5 Flash Model ID, you must plan for replacement and compatibility tests.

- Support windows: 2.0 Flash received broader support and integration investments; this affects bug fixes, expanded tooling, and optimized runtimes.

- Long-term planning: Treat preview lineages (like 1.5 Preview) as transitory optimizations. Plan for migration windows and run compatibility matrices early.

Operational checklist:

- Inventory every service and endpoint using model IDs.

- Track vendor lifecycle announcements.

- Add automated alerts for model-deprecation notices and run periodic smoke tests.

Architecture & Core Capabilities

Google does not publish full model internals, but for NLP practitioners it,’s useful to reason about architectural tradeoffs and training/optimization choices that likely explain observed differences.

Representation & capacity

- 1.5 Flash is engineered for efficient representations that minimize compute per forward pass: smaller activation footprints, aggressive quantization, and optimized attention sparsity patterns.

- 2.0 Flash increases representational capacity and uses improved pretraining curricula, more diverse multimodal data, and targeted fine-tuning on code and reasoning tasks. This yields higher-quality latent representations and better generalization on complex tasks.

Attention, context windows, and Long-Range Modeling

- Both families support long context, but 2.0 Flash includes architectural and software-level optimizations for 1M-token-level contexts (memory-compression, blockwise attention, or hybrid sparse-dense attention for efficiency).

- When modeling very long documents, representation drift and context truncation strategies are critical — 2.0 aims to retain higher fidelity across longer windows.

Multimodal components

- 1.5’s multimodal capability is basic — good for captioning and short image-text tasks.

- 2.0’s multimodal pipeline includes cross-modal fusion layers and joint embedding spaces tuned to reduce modality mismatch, improving tasks like visual QA, document understanding with images, and image-grounded code commentary.

Inference Optimizations

- 2.0 benefits from advanced kernel fusions, improved operator scheduling, and better quantization that keeps compute cost low while raising output fidelity.

- These changes affect tail latency as well as throughput — a big win in production where p95/p99 latency matters.

Benchmarks & Evaluation Methodology — How to Measure Meaningfully

Benchmarks are only useful when the measurement protocol aligns with production realities.

Recommended Metrics

- Accuracy metrics: MMLU (knowledge reasoning), HumanEval (code generation), MATH (math reasoning), BLEU/ROUGE for specific tasks where applicable.

- Latency percentiles: p50, p95, p99; measure end-to-end (including network, serialization).

- Token economics: cost per token, cost per useful response.

- Robustness: hallucination rate, factuality tests, adversarial prompts.

- Multimodal fidelity: cross-modal alignment scores, retrieval-to-answer accuracy when images are present.

Benchmark Design Best Practices

- Representative prompt sampling: Use logs to stratify prompts by length, complexity, and domain.

- Separate throughput from effective latency: Don’t conflate model compute speed with network/adapter overhead.

- Use multiple seeds & environments: Evaluate under different batching and concurrency conditions.

Interpreting Results

- Gains on MMLU or HumanEval generally indicate better reasoning Representations, but translate to product improvements only when the benchmark tasks mirror your user traffic.

- Latency improvements of 10–30% may not impact user satisfaction if network jitter dominates; measure end-to-end.

Feature Breakdown: Multimodal, Context, Tooling

Multimodal Behavior

- Cross-modal grounding: 2.0 improves joint understanding across images and text, reducing misalignment in responses that require referencing visual context.

- Practical tip: When using images in prompts, add explicit grounding tokens and short descriptions to reduce ambiguity.

Context Windows and Retrieval-Augmented Workflows

- 1M-token contexts open new capabilities: entire books, long codebases, or extensive user histories can be passed in one request. But you must manage memory usage and retrieval relevance.

- RAG + Flash: Combine retrieval indexes with chunked embedding search to keep the context focused and reduce noise.

Tooling & Developer Ergonomics

- 2.0 Flash integrates better with tool abstractions (tooling for retrieval, browsing, and code execution), making it easier to build deterministic toolchains.

Head-to-Head: Practical Operational Dimensions

Below is a concise operational head-to-head that helps translate NLP tradeoffs into engineering decisions.

- Latency: 1.5 = very low; 2.0 = lower median and improved tail behavior.

- Accuracy: 2.0 > 1.5 on reasoning, code, and multimodal tasks.

- Cost: 2.0 slightly higher per call; effective cost advantage depends on task complexity (higher accuracy often reduces post-process costs).

- Context: 2.0 offers superior long-context performance and more stable semantics over long windows.

- Tooling: 2.0 has richer integrations for tools and pipeline orchestration.

Pricing & Efficiency — Real-World Considerations

Pricing varies across providers and depends on token usage, context size, and throughput. From an NLP viewpoint:

- Token cost: Flash models aim to be cheaper per token than higher-capability base models, but 2.0’s enhanced capability can reduce the number of iterations required to produce a correct output, offsetting the higher per-call price.

- Engineering cost: Migration effort, prompt tuning, test coverage, and wrapper logic incur time and dollars.

- Throughput vs. latency: Use adaptive batching and autoscaling; monitor p95/p99 and adjust batch sizes to trade latency for throughput.

Practical rule: instrument cost-to-quality metrics (e.g., cost per correct answer) rather than cost per token alone.

Migration Guidance & Lifecycle Planning

Step-by-step Migration Plan

- Inventory: catalog all endpoints, prompt templates, and pipeline expectations for 1.5.

- Define KPIs: set acceptance criteria — e.g., δ accuracy ≥ X%, p95 latency ≤ Y ms, cost per request ≤ Z.

- Create a representative test corpus: include edge cases, long-context prompts, and multimodal examples.

- Run controlled A/B tests: route 5–10% traffic to 2.0 for a defined period; log semantic metrics.

- Measure differences: analyze hallucination rates, token growth, and error types.

- Canary & rollback: deploy gradually with circuit breakers and fallbacks to cheaper endpoints when errors spike.

- Tune prompts: perform prompt engineering and small prompt-sensitivity adjustments; sometimes, minimal tuning suffices.

- Finalize migration: shift traffic once KPIs are met and observability is in place.

Observability & Testing

- Semantic tests: Automated checks for answer consistency across paraphrases and longer contexts.

- Performance tests: P50/p95/p99, token counts, throughput under concurrent load.

- Cost analytics: Cost per successful transaction, cost per retained user, etc.

Pros & Cons

Gemini 1.5 Flash Preview

Pros: Low latency, low cost, great for high-throughput simple tasks.

Cons: Lower reasoning capability, older tooling, potential deprecation.

Gemini 2.0 Flash

Pros: Superior reasoning, robust code generation, improved multimodal alignment, optimized long-context handling.

Cons: Slightly higher cost; requires testing for edge cases and prompt adjustments.

FAQs

A: Not always. From an NLP and production standpoint, “better” depends on your objective function. If your product objective prioritizes raw cost-per-short-reply and you have simple prompt sharing, 1.5 Flash may be more cost-effective. If accuracy, robust reasoning, long-context coherence, or multimodal fidelity matter, 2.0 Flash is the superior choice. Run a targeted A/B with model workloads to quantify the tradeoffs for your KPIs.

A: Yes — Gemini 2.0 Flash adds optimizations designed to handle very large context windows (1M tokens) more reliably than earlier Flash variants. However, end-to-end performance depends on your runtime, batch sizing, and any retrieval/embedding pre-processing you perform. Validate with classic large-document workflows.

A: Some older 1.5 Flash model IDs have been object or scheduled for retirement by cloud providers. Gemini 2.0 Flash has been the active focus of union since late 2024 and through 2025, but providers may still deprecate specific variants as newer releases or runtime optimizations appear. Always consult your cloud vendor’s lifecycle proof and subscribe to deprecation feeds.

A: In many cases, only prompt tuning and small template adjustments are required. However, because 2.0 changes error profiles and the way it interprets multi-step instructions, you should plan for regression testing. In practice, many teams find minor prompt rewrites plus a short validation pass are sufficient; for edge cases and deterministic toolchains, more extensive prompt mapping may be necessary.

Conclusion

The shift from Gemini 1.5 Flash Preview to Gemini 2.0 Flash isn’t just a simple upgrade—it’s a real choice that hits on output quality, speed tweaks, budget math, and what your users are okay with. For NLP teams: pick wisely, set up metrics that tie model smarts straight to your business goals, and do proper A/B tests that mimic real-world traffic. If your app needs better logic, coding chops, or handling images/text together, go for 2.0 Flash. But if you’re running huge volumes of quick, short-prompt jobs with super-strict speed limits, 1.5 might still work until it’s phased out.